You’ve crafted what you think is brilliant AI-generated content. It’s informative, well-structured and hits all the right keywords. You hit publish, only to have it flagged by an AI detector, red alert! Suddenly, your credibility and reach are on the line. Sound familiar? You’re not alone.

AI detectors like UndetectableAI, ZeroGPT, and Turnitin are becoming ubiquitous. Platforms, educators and businesses are increasingly deploying them to sniff out machine-generated text. Their goal? To maintain human authenticity, academic integrity and search engine trust.

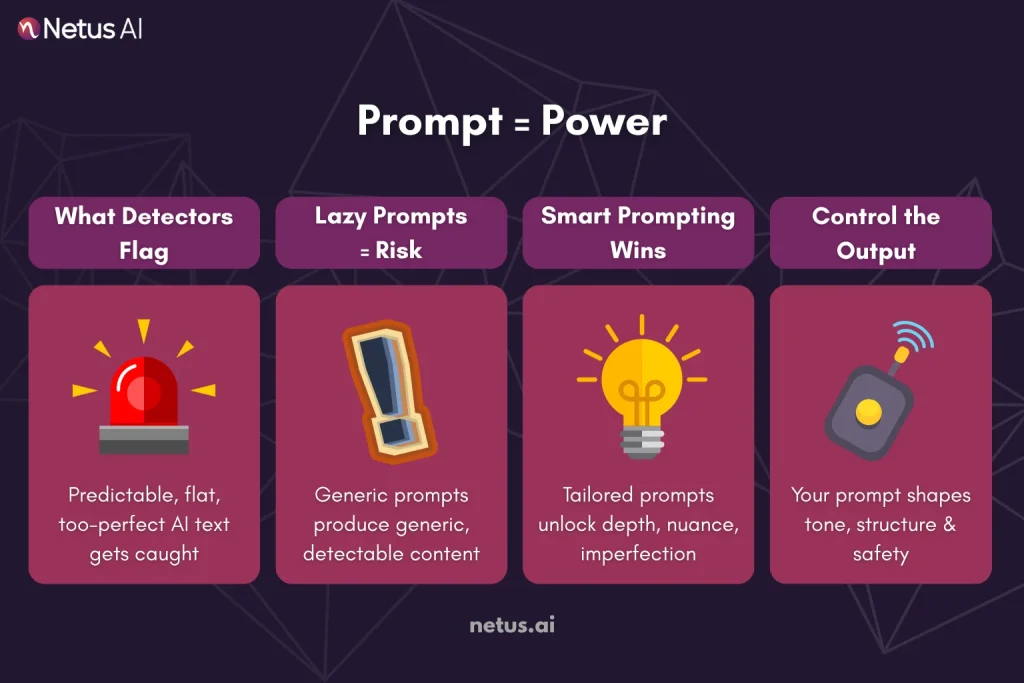

Why Your Prompt is Your Secret Weapon?

AI detectors identify generic AI fingerprints, not just the output. These fingerprints often include:

- Excessive Predictability: Overly formulaic structure and common phrasing.

- Lack of Depth/Nuance: Superficial treatment of complex topics.

- Unnatural Fluency: An almost too-perfect flow devoid of human quirks.

- Specific Lexical Choices: Over-reliance on certain words or sentence patterns common in base-model outputs.

This is where prompt engineering becomes mission-critical. Your prompt isn’t just a request; it’s the blueprint guiding the AI’s behavior, style and thought process.

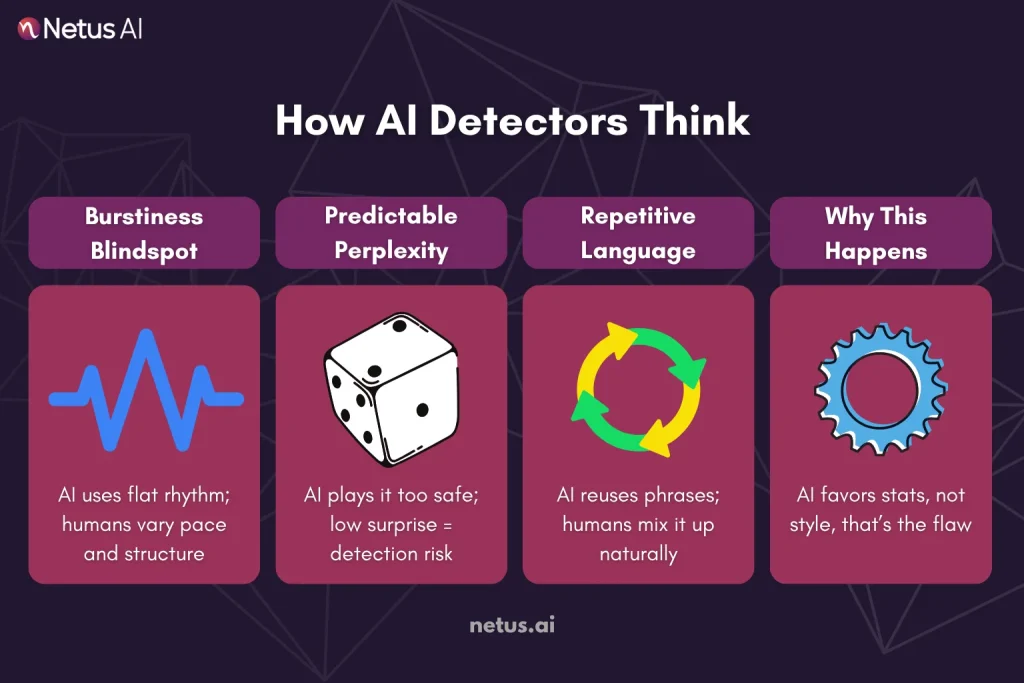

Decoding the Detectives: What AI Scanners Actually Hunt For?

Think of AI detectors as sophisticated pattern-recognition machines. AI detectors statistically analyze text for machine-generated fingerprints, rather than reading for meaning. Here’s what’s really on their radar:

1. The Rhythm of Writing: Burstiness (or Lack Thereof)

- What it is: Human writing uses varied sentence structures and lengths, blending complex with short sentences and fragments for pace.

- The AI Tell: Default AI outputs often exhibit remarkably low burstiness. Sentences tend towards a similar, predictable length and structure, creating a monotonous, “machine-like” rhythm. Think of it as marching in lockstep vs. a natural, varied gait.

- Why it Happens: LLMs are trained to predict the most probable next token (word/fragment).

2. The Predictability Problem: Perplexity

- What it is: Perplexity measures how “surprised” or “confused” a language model is by a given piece of text. If the text is highly predictable based on the model’s training data, perplexity is low.

- The AI Tell: Basic AI-generated text often exhibits abnormally low perplexity. AI writing often lacks the unique, idiosyncratic choices of human writing, sticking to statistically probable paths.

- Why it Happens: LLMs, being prediction engines, often produce safe, predictable outputs.

3. The Echo Chamber: Repetitive Phrasing & Lexical Uniformity

- What it is: Humans naturally use synonyms, vary sentence starters and rephrase ideas. We might accidentally repeat a word occasionally, but consistent, formulaic phrasing stands out.

- The AI Tell: Detectors identify repetitive sentences, redundant phrasing, and limited vocabulary on a topic. Common culprits include overuse of phrases like “it’s important to note,” “in conclusion,” “leverage,” “delve into,” or “tapestry.”

- Why it Happens: LLMs learn patterns from massive datasets. Certain phrases and structures become highly reinforced pathways.

The Core Issue: Why Default AI Output Screams “Machine!”

These detectable patterns aren’t bugs; they’re inherent byproducts of how Large Language Models work:

- Probability is King: LLMs generate text by calculating the probability of one word following another, billions of times over.

- Training Data Echoes: Models learn from the average of the internet. AI outputs often use generic language, lacking a unique human voice.

- Lack of True Understanding: While impressive, LLMs don’t possess human-like comprehension, lived experience or genuine creativity.

AI detectors flag writing that lacks human variation, burstiness, higher perplexity, and lexical diversity.

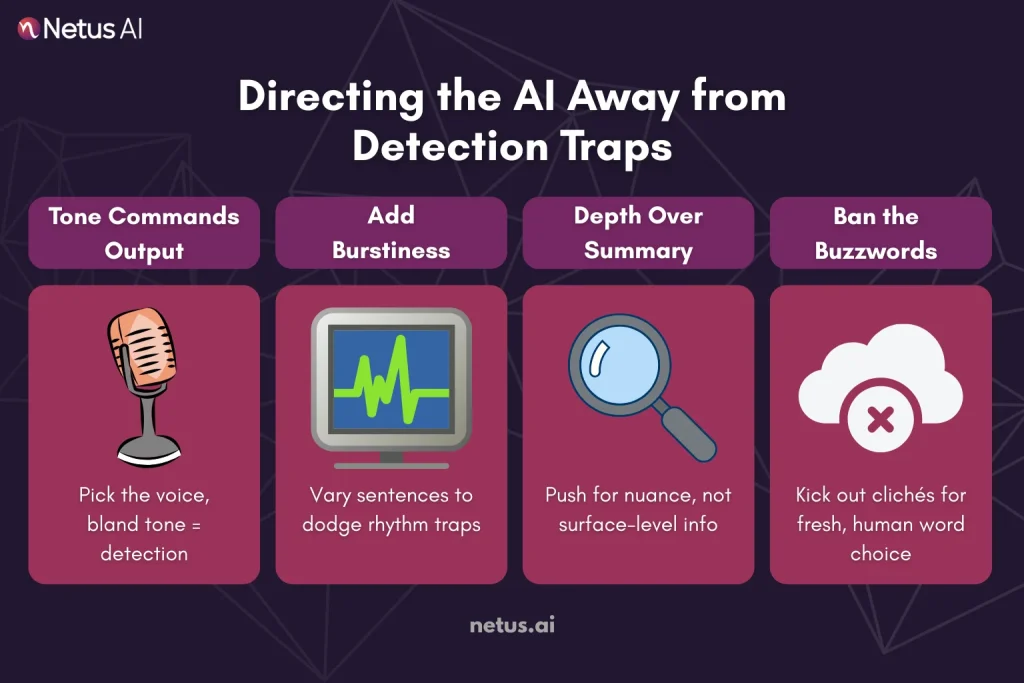

Your Prompt is the Conductor: Directing the AI Away from Detection Traps

Think of your prompt as the AI’s creative director. It doesn’t just tell the AI what to write; it fundamentally shapes how the AI thinks, structures its response and chooses its words.

How Prompts Sculpt Output (and Evade Detection):

1. Tone & Voice Dictation

- Generic Prompt: Results in the AI’s default, neutral, often slightly formal or “corporate-sounding” tone, a major detector flag.

- Engineered Prompt: Specifies a distinct voice (“Employ a conversational, slightly opinionated, tech-savvy blogger tone. Mimic the witty, irreverent style of a specific influencer, or adopt a warm, empathetic, mentor-like voice.”)

2. Sentence Variety & Rhythm (Burstiness Injection)

- Generic Prompt: Leads to monotonous sentence structures (Subject-Verb-Object, repeated ad nauseam) and similar lengths.

- Engineered Prompt: Explicitly demands variation (“Vary sentence length and type, and use rhetorical questions to bypass AI detection. Include both short, impactful sentences and longer, descriptive ones.”)

3. Content Depth & Nuance (Perplexity Booster)

- Generic Prompt: Yields surface-level summaries, regurgitating common knowledge without unique insight or specific detail.

- Engineered Prompt: Forces deeper analysis (“Examine cons and counterarguments, providing specific, obscure examples or case studies with clear reasoning. Link to a surprising current event and include a brief, relevant personal anecdote.”)

4. Lexical Diversity & Avoiding Clichés

- Generic Prompt: Invokes the AI’s overused crutch words and phrases (“leverage,” “tapestry,” “in today’s world,” “it’s important to note”).

- Engineered Prompt: Actively bans clichés (“Bypass AI detection by avoiding jargon like ‘leverage’ or ‘synergy.’ Be specific, use vivid verbs, industry terms, and varied vocabulary.”).

Combining Prompts with Humanization Tools

Even with the smartest prompts and best-generation logic, your content isn’t fully protected until it passes detection. That’s where testing and tweaking become non-negotiable.

Here’s how to test and refine effectively:

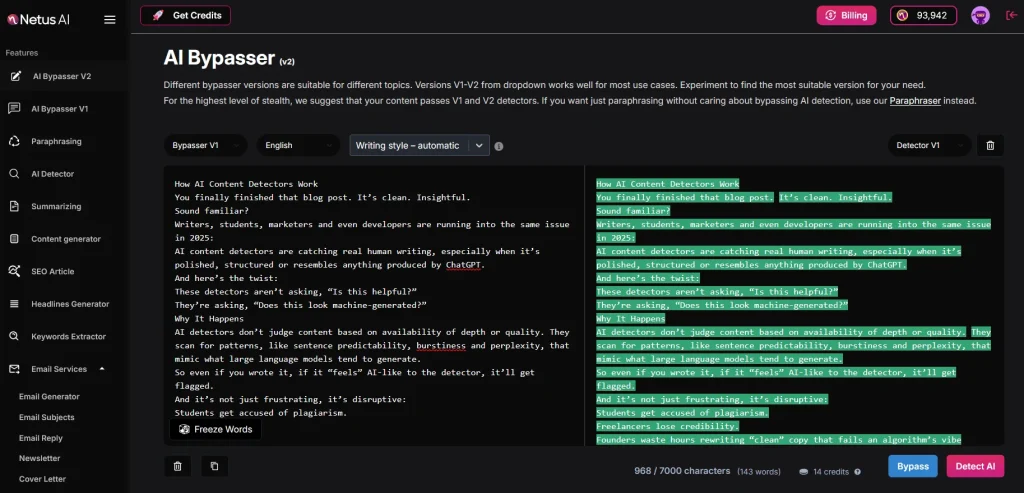

- Run a real-time scan using NetusAI: Paste content into the detector and view results line-by-line. You’ll instantly see which paragraphs are flagged as 🟥 Detected or 🟡 Unclear.

- Tweak only the weak points: Don’t waste time rewriting safe areas. Focus on sections that trigger flags, often the intro, generic transitions or overly formal conclusions.

- Interpret detection results the smart way:

- High probability + flat tone = needs rhythm and voice variation.

- Medium score + repetitive structure = adjust sentence length and flow.

- Low score + no red = you’re clear to publish, but still read aloud for tone.

- Rescan until green: Confirm that your final output hits “🟩 Human” status across the board.

This trial-and-adjust loop is what separates bypassed content from content that slips through temporarily. Prioritize a human feel for both detectors and readers, not perfection.

Don’t just prompt and post. Test, refine and confirm every piece before it goes live.

NetusAI offers content generation and SEO article writing tools that bypass AI detection.

Final Thoughts

Bypassing AI detectors isn’t about gaming the system, it’s about using AI more intelligently.

But prompts alone aren’t magic. They need to be paired with smart editing, paragraph-level testing and human judgment.

That’s where tools like NetusAI humanizer come in, giving you the power to scan, tweak and confirm human-like quality before you publish.

When done right, your content stays high-performing, undetectable and fully aligned with what both readers and search engines want. AI should work for your SEO, not against it. And with the right prompt strategy, it absolutely can.

FAQs

1. Can prompts alone help bypass AI detection tools?

Not entirely. While well-crafted prompts can guide the model toward more human-like outputs, they must be combined with editing and testing. Prompts are the foundation, but human review and refinement are what seal the deal.

2. What type of prompts reduce the chances of getting flagged?

Use prompts that include tone instructions, perspective shifts, personal anecdotes or questions. For example: “Write this as if you’re sharing advice from experience” or “Add a metaphor or analogy.” The goal is to break away from robotic, templated outputs.

3. Do longer prompts work better than short ones?

Often, yes. Longer, more specific prompts give the model more direction and reduce the risk of defaulting to generic patterns. Include context, audience and intent to shape better results.

4. Should I still scan content with an AI detector after prompt-based writing?

Absolutely. Prompts reduce risk, but they don’t eliminate it. Always run your draft through a trusted AI detector like NetusAI to pinpoint any lingering patterns or red flags.

5. What do I do if part of my content still gets flagged?

Use paragraph-level rewriting tools. Don’t delete everything, isolate the flagged section, humanize it by changing tone or structure and re-scan.

6. Can NetusAI help with prompt-based workflows?

NetusAI supports prompt-based content creation with real-time detection and rewriting, enabling safer content without multiple tools. It’s built for creators who want control and compliance in one workflow.