Changing a few words isn’t enough to fool AI detectors. You can take an AI written paragraph, swap synonyms, shuffle a few lines and still get flagged.

Why? Because modern detectors don’t just evaluate what you say. They judge how you say it.

Humanized AI content is writing that mimics the tone, intent and rhythm of real people, not just the structure of language. It feels lived-in, unpredictable and emotionally grounded. That means:

- Voice, Real opinions, personal tone, even a bit of humor.

- Intent, Clear reasoning instead of generic filler.

- Emotional flow, Changes in energy, pacing and mood.

- Unpredictability, Sentence variety, unexpected transitions and natural imperfection.

If paraphrasing is a surface fix, humanization is a total rewrite of texture.

Google’s Helpful Content Signals & Detector Overlap

The scary part? You can follow all of Google’s SEO rules and still tank if your content sounds like it was written by AI.

Google’s HCS rewards content demonstrating E-E-A-T: Experience, Expertise, Authoritativeness and Trust. The thing is, AI detectors like OriginalityAI or ZeroGPT can spot AI content based on writing style. And their signals are increasingly aligning with what Google likes (or dislikes).

Here’s where things get murky:

- Detectors flag AI-style patterns (low burstiness, flat tone)

- Google downranks unhelpful or templated content

- Readers bounce when they sense robotic writing

That means if your blog looks AI-written, it risks being down ranked, even if the facts are solid.

That’s why humanizing your AI content does double duty:

- It dodges detectors that penalize mechanical writing.

- It aligns better with Google’s helpfulness criteria.

Evidence Round-Up

It’s not a theory anymore, AI-style writing really is getting penalized.

Even partial-AI content hasn’t been safe. Turnitin’s research found more false positives, flagging human writing as AI because it was too polished or pattern-based. In other words, if your sentences are too symmetrical or “perfect,” you’re guilty until proven human.

What does that mean for SEO?

- Robotic writing leads to higher bounce rates and lower dwell time

- Search engines downrank “template-sounding” articles

- Detectors mislabel hybrid content, damaging credibility

And it’s not just about ranking. Studies show readers are getting savvier, they can feel when something was written by AI. That gut-check is becoming a trust-breaker.

Humanization Techniques Ranked

So you’ve written a draft, maybe with ChatGPT, maybe half by hand and now you need to pass detection. What are your options?

Most current AI content humanization strategies are no longer effective.

Manual Editing

Still the gold standard, in theory. Manual rewrites give you full control over tone and pacing. You can inject quirks, rhythm shifts and intent. But here’s the catch, it’s slow. Doing it well takes time, effort and practice. If you’re working on one essay, fine. But if you’re scaling blogs or rewriting 50 product pages? Forget it.

Word Swaps & Basic Paraphrasers

Tools that just switch out words or rewrite sentences don’t fix the core issue: AI patterns live in structure, not just vocabulary.

If you change “great” to “excellent,” but the rhythm stays the same, you’re still waving a red flag. Most free paraphrasers fall flat here. They’re fast but they leave your work almost detectable, which is sometimes worse than fully detectable.

Style Disruptors (Sentence Shufflers, Randomizers)

Some tools try to boost “burstiness”, that variation in sentence length detectors scan for, by randomly mixing structure. You’ll pass some checks. But your content might feel choppy, jarring or worse, written by a confused intern. Readers notice. Trust drops.

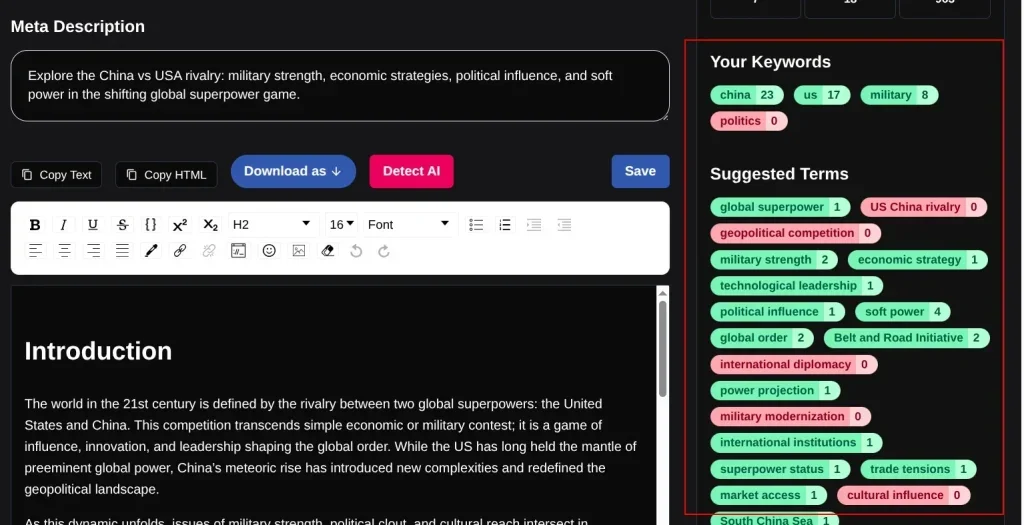

Content Starts at Generation: NetusAI’s SEO Article Writer and Content Generator

Avoiding stylometry and detection issues shouldn’t begin after content is written, it should start with the writing itself. That’s where NetusAI steps in.

Elevate your content with the NetusAI SEO Article Generator SEO Article Generator, designed to produce high-quality, human-like blog posts. NetusAI transforms content to achieve higher search rankings and improved reader engagement. Unlike generic tools, it goes beyond simple drafting. It:

- Lets you input headlines and targeted SEO keywords

- Supports long-form templates for full blogs

- Auto-generates a structure with Title → Outline → Content

- Works in multiple languages for global teams

The best part? It’s all built into the Netus AI Bypasser + Detector system. That means your content won’t just be easy to read; it’ll also be set up to fly under the radar of AI detectors.

You can generate, review and rewrite all in one interface without needing third-party tools to patch the gaps. It’s built for marketers, freelancers and bloggers who want their AI content to actually pass as human-written.

Future-Proofing Content in an AI-Regulated World

AI detection is evolving from “guesswork” to “proof.” If you’re only thinking about detectors today, you’re already behind.

Invisible Watermarks Are Coming

OpenAI, Anthropic and Meta have explored token-level watermarking, creating undetectable patterns in AI-generated text for detection tools.

These aren’t just guesses, they’re cryptographic tags. Once this becomes the norm, rewriting a few lines won’t save you, only deep humanization will.

Content Provenance Is Going Mainstream

Think of it like a “nutrition label” for your content. Platforms like Medium, Substack and LinkedIn are already experimenting with AI-content labels.

This means:

→ If your article was written or heavily AI-edited, platforms might automatically tag it, unless it passes as human.

Governments Are Stepping In

The EU AI Act officially classified synthetic content in education, politics and journalism as “high-risk.” In the U.S., schools and companies are requiring authorship declarations, even for lightly AI-edited documents.

And more regulation is coming.

Expect mandates like:

- “AI-Free” content certifications

- Penalties for undisclosed AI usage

- Required use of detection software by publishers

What This Means for You

It’s no longer just about fooling AI detectors. It’s about transforming your content so that it:

- Passes watermark scans

- Avoids AI-labeling

- Meets human trust standards

- Holds up under legal scrutiny

And that’s exactly what NetusAI helps you do. It doesn’t just paraphrase, it reshapes the flow, unpredictability, tone and emotion of your writing. That’s what makes your content undetectable and future-proof.

Final Thoughts:

It’s not enough to write well, your content has to feel human. For students, founders or marketers, overly “AI-sounding” content is a disadvantage. And rewriting it manually? That’s slow, inconsistent and mentally draining.

Instead of hoping your work passes, you can know it will, with the right workflow.

FAQs

1. Can Google detect AI-generated content?

Google algorithms prioritize helpful, expert and original content, not AI detection. Generic AI content may be downranked by the helpful content system for lacking human depth. Humanizing AI content, with tools like NetusAI, is essential for providing real value and avoiding downranking.

2. Why does my AI-written blog rank lower on Google?

AI content, particularly from basic rewriters, frequently falls short of Google’s valued E-E-A-T signals. This often results in “unhelpful” content. NetusAI, however, humanizes content by adjusting its structure, tone and rhythm, making it sound original and algorithm-safe.

3. Do AI detectors ever falsely flag human content?

Yes, frequently. Turnitin’s own FAQ admits its detector can falsely flag up to 15% of fully human-written essays. GPTZero originalityAI and others rely on stylometry, perplexity and burstiness, which can mistake clear, polished human writing for AI.

4. Is paraphrasing enough to bypass detectors?

AI detectors analyze sentence predictability, rhythm and structural patterns. Simple synonym swaps or sentence reshuffles won’t fool tools like GPTZero or OriginalityAI. NetusAI bypasses detection by altering sentence flow, increasing burstiness and subtly shifting stylometry, preserving your message.

5. Will watermarking become mandatory for AI tools?

AI content may need humanization for SEO. OpenAI and Anthropic are testing watermarks and the EU AI Act supports provenance tracking. LinkedIn and Medium are piloting AI-tagging. Rewriting tools like NetusAI will be crucial as detection technology improves.

6. What’s the difference between rewriting and humanizing content?

Rewriting changes words. Humanizing changes how it feels. Think tone shifts, emotional variance, rhythm, pacing, all the traits that make content sound “lived-in.” Most rewriters only paraphrase. NetusAI rewrites high-risk phrasing, adjusts sentence dynamics and adds voice to make AI-generated content feel human-authored.

7. How can I make my AI content undetectable for school or SEO?

Use a multi-step process:

Detect → Rewrite → Retest.

That’s the exact workflow built into NetusAI. Start with the built-in AI detector, rewrite flagged sections using the smart engines and instantly retest, all in one place.