After hours of crafting a thoughtful, well-researched article, the result flows effortlessly, sounds polished and delivers authentic help. But the second you drop it into an AI detector, like ZeroGPT, the screen lights up with a big, red flag: “AI-generated.”

Sound familiar?

With platforms like Medium now flagging AI-generated content, brands face a real threat to reader trust. Simply prompting ChatGPT to ‘write more human’ isn’t a guaranteed solution.

Why Does AI Content Get Flagged?

Here’s the frustrating part: Your content may sound great to a human reader but still fail an AI detection test.

Why?

Because AI detectors aren’t judging your work like an editor or professor would. They’re scanning for patterns, specifically the kinds of patterns large language models (LLMs) like ChatGPT, Gemini or Claude leave behind.

For example:

- Perplexity: This measures how predictable your word choices and sentence structures are. The more “expected” your next sentence feels to the model, the more likely it is to trigger a flag.

- Burstiness: This refers to sentence variety. Humans naturally mix short, punchy lines with longer, more detailed ones. AI models often fall into rhythm traps, keeping sentence length and structure oddly consistent.

- Stylometric Fingerprints: Detectors also analyze your use of passive voice, repetitive phrases and syntactic patterns. If your content follows the same grammatical structure over and over (like AI typically does), it raises suspicion.

That’s why fixing AI-detected content isn’t just about making it “better.” It’s about making it feel messy enough, in the human way.

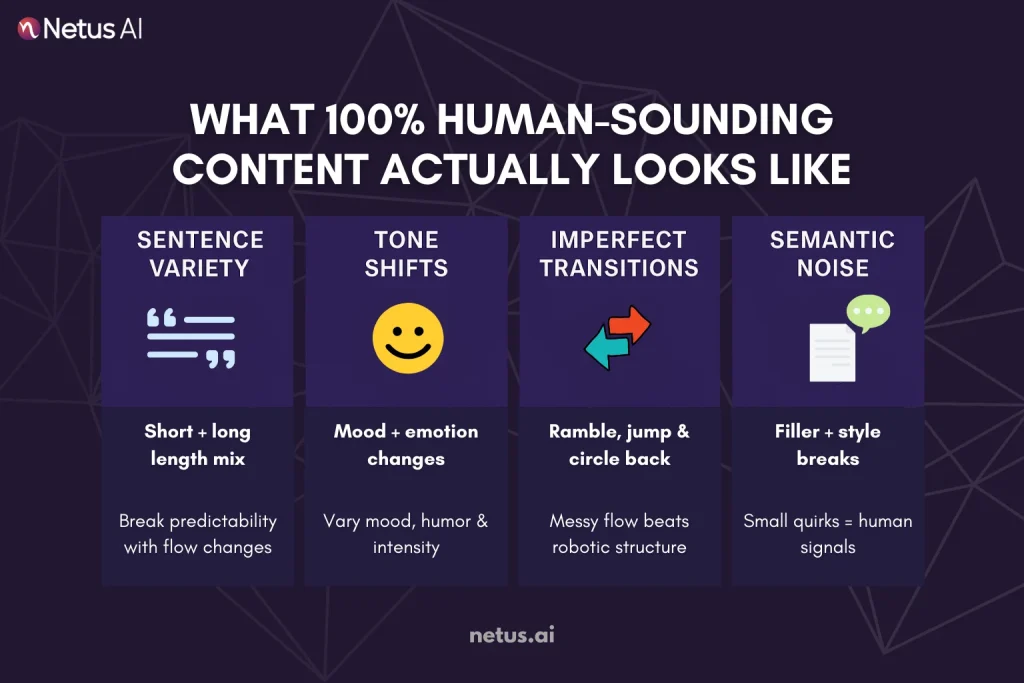

What 100% Human-Sounding Content Actually Looks Like?

So, what does “human enough” really mean?

- It’s not about perfect grammar.

- It’s not about complex vocabulary.

And it’s definitely not about writing like Shakespeare.

AI detectors identify elements such as randomness, inconsistency and emotional tone, which are the nuanced characteristics that give human writing its vitality.

Here’s what that looks like in practice:

1. Varied Sentence Lengths:

Humans jump between short bursts and longer thoughts. One sentence might be five words. The next could stretch for three lines. That natural imbalance makes your writing less predictable.

2. Tone Shifts:

Real people don’t sound flat. They change mood, drop humor, throw in rhetorical questions or suddenly get dramatic for effect. Detectors pick up on this emotional inconsistency as a sign of human authorship.

3. Imperfect Transitions:

AI loves smooth, templated flow: “In conclusion,” “On the other hand,” “It is important to note,”

Humans? We often jump topics, forget to transition neatly or ramble a little before circling back. That’s a good thing (at least for beating detectors).

4. Semantic Noise (In a Good Way):

We use filler phrases. We start sentences with “So,” or “But honestly,” We break grammar rules intentionally for style.

These micro-messy moments tell detectors: “A human was here.”

Best Practices for Making AI Content Look Human

Humanizing AI content demands more than rewrites or casual phrasing. It requires a multilayered strategy to mirror authentic human voice, rhythm and nuance. Here’s what that really means:

1. Mix Up Your Sentence Length and Structure

Humans don’t write like that. We mix long sentences with short ones. We add fragments for effect. We sometimes break grammar rules for tone or emphasis.

Before publishing, go through your draft and vary the sentence flow. Add a rhetorical question. Use an occasional one-liner. Break up dense paragraphs.

2. Add Personal Voice and Point of View

Break AI’s robotic vibe with deliberate subjectivity. Try: “From my desk” or “Let’s be blunt”. This isn’t decoration, it’s psychological handshaking.

3. Use Real-World Examples and Stories

AI-generated text tends to stay generic. Human writers add specifics, examples, mini-stories or real data points. Let’s say you’re writing about productivity tips.

Drop in a quick anecdote about how you manage your own workflow. Even one relatable sentence can make a huge difference in authenticity.

4. Break AI Writing Patterns

AI tends to favor predictable phrases like:

- “In conclusion”

- “It is important to note”

- “One possible reason is”

Swap stiff phrases like “In conclusion” for natural alternatives like “So, what’s the takeaway?” This shifts tone from academic lecture to coffee-shop conversation, instantly feeling more human.

5. Run Your Draft Through a Detector, Then Tweak

Before publishing, use an AI detector (such as HumanizeAI or ZeroGPT) to check your content for any flags. If it does, go back and rework the flagged sections. Focus especially on areas that look too uniform or formulaic.

6. Don’t Just Paraphrase, Reshape

A big mistake people make is just paraphrasing the AI output. They swap words but leave the structure and tone untouched. The problem? Detectors look at writing patterns, not just vocabulary.

Tools That Help Humanize AI Content

Skill alone can’t humanize AI content. You need precision tooling. Based on your workflow and objectives, leverage these three tool types to engineer genuine human resonance.

AI Detectors (For Diagnosing the Problem)

Before fixing anything, you need to know if there’s even an issue. AI detectors flag text that appears too AI-generated, based on factors like perplexity, burstiness and stylometry.

Popular examples:

- Quillbot – Used by many academic institutions and SEO teams.

- ZeroGPT – Quick and free but limited in accuracy.

- Turnitin’s AI Detection Tool – Widely used in schools.

When to use:

Before submitting an essay, publishing a blog or delivering client work, just to be safe.

Rewriters / Humanizers (For Fixing AI Patterns)

Basic tools offer surface-level fixes; true humanizers fundamentally reshape sentences, tone and add controlled chaos to bypass detection.

Key features to look for:

- Structural rewriting (not just word swaps)

- Tone variation (casual, academic, etc.)

- Preservation of original meaning

When to use:

Isolate flagged segments, then rebuild phrasing and rhythm around your key points, never compromising substance for evasion.

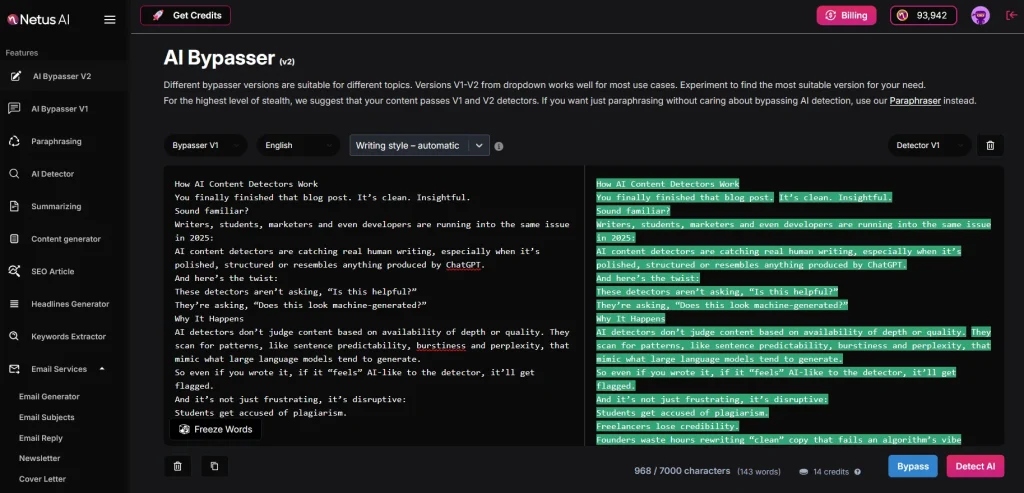

Hybrid Platforms (For All-in-One Workflows)

Combined detection-rewriting dashboards eliminate toggle fatigue. One scan → one edit interface → faster, more consistent results (deadline saviors).

Why it matters:

Hybrid tools let you scan, rewrite and instantly retest your content, all in one go. This feedback loop saves time and reduces guesswork.

Notable example:

- NetusAI bypasser (AI Bypasser V2) – Offers both detection and humanization inside the same interface.

Quick Tip: Don’t Guess. Test and Rewrite.

Whether you’re a student, marketer or blogger, the safest workflow is simple:

Detect → Rewrite → Retest → Repeat (if needed).

That way, you’re not relying on guesswork and your final content feels, reads and tests like it came straight from a human.

Final Thoughts:

Human-sounding AI content is vital for audience trust (readers, clients, professors, search engines).

“AI-like” content not only risks detection by AI tools but also jeopardizes your credibility. But don’t sweat it! NetusAI humanizer and smart rewriting help satisfy algorithms and readers.

FAQs

1. Why does my AI-generated content keep getting flagged as “AI”?

Because detectors don’t care about your topic, they care about patterns. AI-written content with low perplexity, flat burstiness or LLM-matching stylometric signals will be flagged, regardless of accuracy.

2. Is paraphrasing enough to fool AI detectors?

Not anymore. Basic paraphrasers just swap words. Detectors now analyze structure, rhythm and tone. If your sentences still follow AI-like pacing and syntax, you’ll still fail the check.

3. What’s the fastest way to humanize AI content?

Platforms like Netus merge detection and humanization into a closed loop. This eliminates context-switching hell, letting you scan, rewrite and rescan iteratively until authenticity sticks.

4. Does changing tone help beat detectors?

Yes. A conversational, varied or emotionally resonant tone can reduce AI detection scores. Detectors often flag content that sounds flat, overly structured or generic.

5. How important is sentence variety for avoiding AI flags?

Very important. Human writers naturally mix short and long sentences. AI tends to write in uniform blocks. Adding burstiness (sentence length variation) is one of the quickest ways to humanize your content.

6. Can Grammarly or other grammar tools help?

Tools like Grammarly enforce robotic uniformity: flattened tone, predictable syntax and error-free monotony, all triggers for false positives.

7. Do AI detectors look at factual accuracy?

No. AI detectors don’t check facts. They only analyze writing style, structure and statistical patterns that match known LLM outputs.

8. How many times should I rewrite before testing again?

Ideally, after every significant rewrite. The safest workflow is:

Write → Detect → Rewrite → Retest → Repeat until clean.

9. Can non-native English speakers get falsely flagged?

When non-native writers use rigid structures or excessive formality, they inadvertently mimic AI “tells.” Tools specializing in organic rhythm and tonal variance become critical for bridging this gap.