Despite perfecting your essay overnight, an AI detector still flagged it as “Likely Written by AI.” What a letdown! The same thing happens to marketers tweaking web copy, bloggers crafting listicles and even novelists posting excerpts online.

What’s going on? AI detectors like ZeroGPT aren’t mind readers; they don’t care who wrote the text. Instead, they judge how it’s written.

Predictable, uniform or “machine-clean” sentences lead algorithms to assume AI authorship, regardless of human effort.

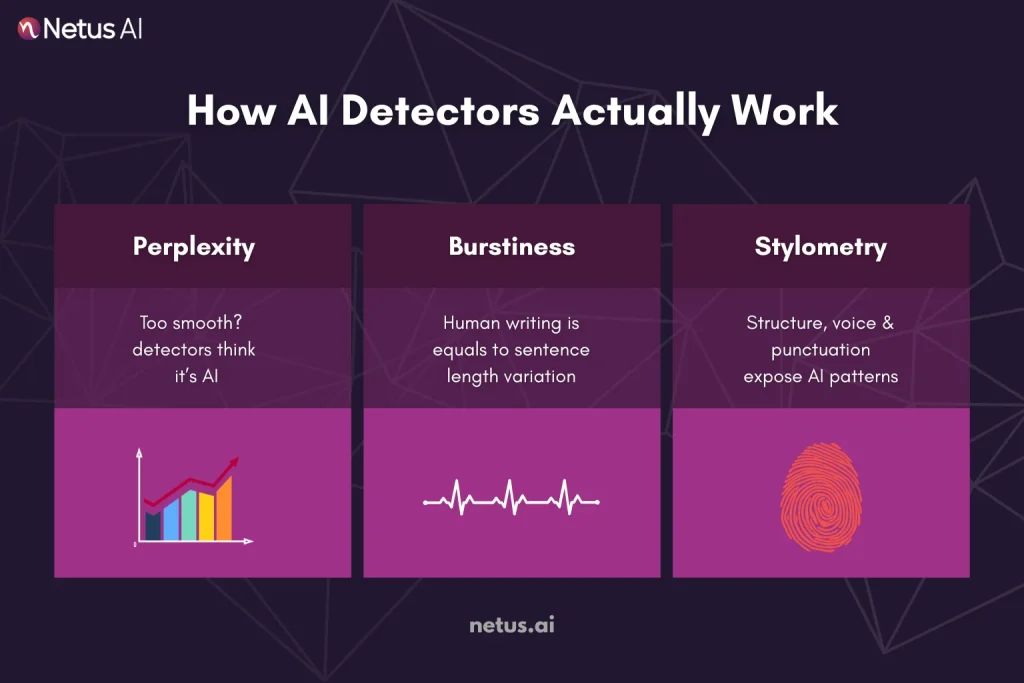

How AI Detectors Actually Work?

Think of detectors as forensic linguists, not watermark scanners. They statistically profile your writing against LLM fingerprints. Three metrics dominate the assessment:

Perplexity

Perplexity, a “surprise meter,” gauges how predictable a sentence is. LLMs’ predictable prose results in low perplexity, which AI detectors flag. Humans can avoid this by adding colloquialisms, rhetorical questions or abrupt tonal shifts to their writing.

Burstiness

Humans use varied sentence lengths (burstiness), creating a natural rhythm. AI-generated text often has uniform sentence lengths, which detectors flag as machine-made.

Stylometry

AI detectors check how you write. If your writing is super clear and formal, like how big AI models write, it might accidentally get flagged as AI-generated.

Top Reasons Human Writing Gets Flagged

AI detectors don’t single out plagiarists,they flag patterns. Unfortunately, many perfectly legitimate writing habits overlap with those patterns. Here’s where real authors accidentally look like bots.

1. Over-Polished Grammar

Using too many grammar checkers, like Grammarly, can make your writing sound a bit too perfect. This often tricks AI detectors into thinking a machine wrote it, not a human!

2. Rigid Essay or Blog Structures

AI detectors favor symmetrical writing with standard transitions, common in large language models. Human writing, even formal, tends to be less structured.

3. Sentence-Length Uniformity

Consistent sentence length (18-20 words) reduces “burstiness,” a human characteristic. Humans vary sentence length, using fragments and longer sentences. This variety signals human authorship, while uniformity raises suspicion.

4. Template-Driven SEO and Copy Tools

AI content tools create tidy drafts but inject repetitive phrasing and predictable keyword placement, which detectors quickly identify.

5. Non-Native Writers Playing It Safe

Many ESL authors adopt “safe” grammar and vocabulary to avoid mistakes. Ironically, that restraint can mimic AI’s controlled output. Mixing in idioms or personal voice helps re-balance the signal.

6. Heavy Reuse of Stock Phrases

“In today’s fast-paced world” or “It is important to note that” appear all over LLM training data. Sprinkle them too liberally and detectors assume bot origin.

False Positives: How Often Does Human Writing Get Tagged as AI?

False positives from AI detection are an increasing problem for schools, publishers and corporate communications.

Turnitin’s Own Numbers

Turnitin’s AI detector initially misidentified 4% of human-written papers as AI-generated, causing significant grading issues.

Reddit & Student Forum Stories

Online communities are flooded with examples:

- A graduate student’s meticulously cited thesis flagged at 92% “AI.”

- A freelance blogger’s SEO article rejected by a client because ZeroGPT called it bot-generated.

- Multiple ESL writers shared that their “safest” English gets flagged more often than their casual drafts.

Why the Trend Is Rising

- Detectors are tightening. As more LLMs flood the web, algorithms raise their sensitivity to stay ahead.

- Writing tools push uniformity. Grammarly, SEO optimizers and outline generators herd writers toward the same “perfect” structures.

- Stylometric drift. Over time, even human writing mimics AI outputs because we read so much AI-generated content online.

How to Avoid Getting Flagged

Detectors judge patterns, not intentions, so the antidote is to introduce healthy unpredictability without sacrificing clarity. Below are field-tested tweaks that raise the “human” signal while keeping your ideas front and center.

1. Draft in Layers, Not in One Pass

Start with a skeletal outline → expand into raw paragraphs → revise for voice and rhythm. This layered method forces you to recompose phrasing and break predictable patterns, avoiding the robotic first-draft trap.

2. Vary Sentence Rhythm on Purpose

Follow a long, winding sentence with a crisp, five-word punch. Mix simple statements with compound or rhetorical questions. This up-and-down cadence boosts burstiness, the metric detectors expect from real people.

3. Swap Template Transitions for Real Voice

Replace “In conclusion,” with something authentic to your style: “Big picture?” or “Bottom line?” Even a small switch disrupts the template patterns baked into large language models.

4. Sprinkle in Low-Stake Imperfections

Subtle human elements like contractions, parenthetical asides and informal phrasing increase perplexity, signaling human authorship.

5. Check Tone Alignment

Tailor your writing style: use formal diction for academic papers but loosen structure; for blogs, embrace conversational energy. Mismatched tone and rigid structure often resemble AI-generated content.

6. Run a Pre-Submission Scan, Then Targeted Rewrite

Before you hit submit, run your text through a detector to catch any flagged bits and rewrite them. Tools like NetusAI let you detect, rewrite and retest on the fly until your writing sounds totally human.

7. Keep Your Own “Voice Bank”

Maintain a personal list of favorite phrases, metaphors or storytelling habits. Sprinkling them into drafts instantly sets your writing apart from generic AI cadence.

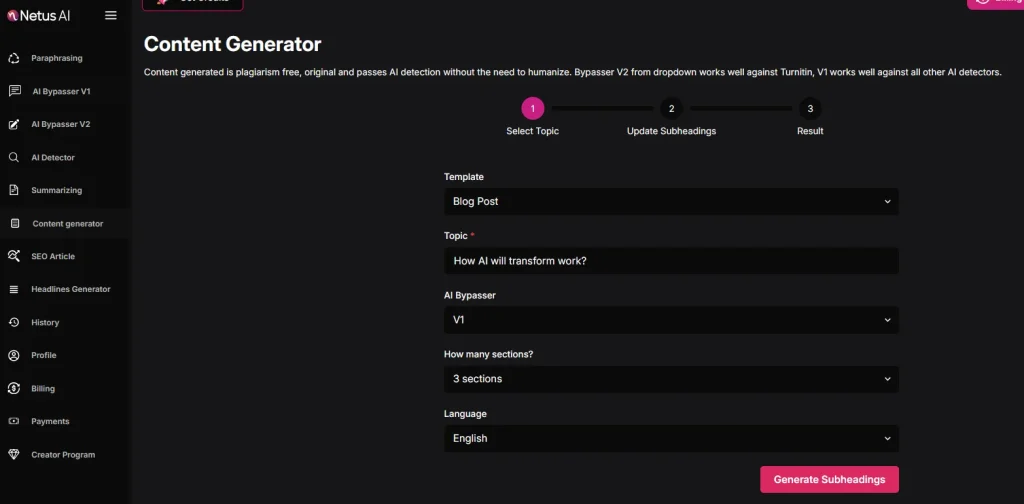

Plagiarism Free Content generator

NetusAI’s Content Generator is a tool designed to produce original, plagiarism-free content. It provides users with a straightforward way to create written material.

Users can easily access, download, and copy their generated content immediately after creation. This makes it super easy to pop it right into your projects. Plus, we keep a detailed history of everything you’ve made.

That way, you can always go back and see what you’ve done, track your progress, or grab an old version if you need it. Basically, you’re in full control of all your content creation, from getting it now to looking back at it later.

Final Thoughts:

AI detectors, though improving, still use algorithms that count sentence lengths, punctuation and word prediction. This means human writers risk being flagged as AI unless they add distinct human elements to their writing.

The goal isn’t sloppier prose, it’s authentic cadence. When clarity meets personality, detectors back off and real readers lean in. Write with NetusAI and your work stays yours, recognized as human by both people and the algorithms watching in the background.

FAQs

1. Why does my completely original essay still get marked as AI?

AI detectors can mistakenly flag human writing as AI-generated due to their reliance on pattern recognition over authorship.

2. Does using Grammarly make my writing more likely to be flagged?

Excessive grammar correction and over-polishing eliminate natural writing quirks, reducing perplexity, a key metric for AI detection.

3. Will adding random typos fool the system?

AI detectors assess writing based on statistics, sentence flow, passive voice and style, ignoring typos.

4. How can non-native English speakers avoid false positives?

Non-native English speakers can avoid AI detection by varying sentence structures to improve “burstiness” and sound more human.

5. Is paraphrasing enough to beat AI detectors?

To avoid AI detection, vary sentence structure, rhythm and inject your personal voice instead of just swapping words.

6. Are all detectors equally strict?

AI detectors vary. A draft passing one may fail another, so testing across multiple tools is wise for important writing.

7. Does passive voice trigger flags?

AI outputs often use excessive passive voice, reducing variety. A human-like style balances active and passive constructions for clarity.

8. Can ChatGPT pass detection if I keep tweaking the prompt?

Prompt engineering offers some help but the model’s predictable structures often necessitate manual or tool-assisted humanization post-generation.

9. What’s an easy DIY way to raise “burstiness”?

Read your draft aloud. Vary pacing with questions, echoes or longer sentences to improve burstiness.

10. Is it cheating to use AI humanizer tools?

AI humanizers are ethical tools, like grammar checkers, for refining tone and rhythm. Concealing AI involvement is unethical only when human originality is required.