After hours of crafting a thoughtful, well-researched article, the result flows effortlessly, sounds polished and delivers authentic help. But the second you drop it into an AI detector, like ZeroGPT, the screen lights up with a big, red flag: “AI-generated.”

Sound familiar?

AI detectors identify predictable patterns and similar writing, not creativity or research. This means they can sometimes flag your writing even when there’s nothing wrong with it.

AI content flagging leads to false positives, hurting writers with SEO drops and unfair plagiarism accusations. This threatens reader trust on platforms like Medium, as “human-like” writing prompts aren’t a foolproof solution.

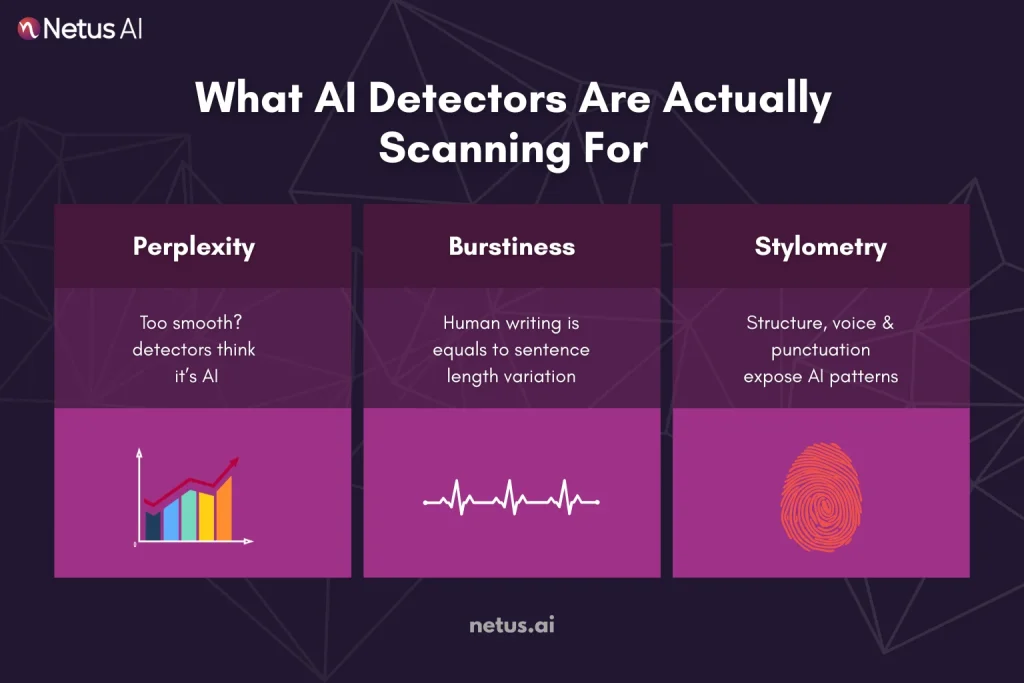

What AI Detectors Are Actually Scanning For?

AI-detection tools claim to instantly identify machine-generated text using three main algorithmic signals, not login history.

Perplexity: Measuring Predictability

At its core, perplexity is a measurement of how surprising each word in your sentence is, based on what came before it. If a language model expects the next word with high confidence, perplexity is low. If it’s genuinely surprised by the next word, perplexity is high.

AI writing is predictable and smooth, lacking human randomness. Even flawless human writing can be flagged for being too perfect.

Burstiness: Sentence Variety

Burstiness measures variation in sentence length and structure.

- Humans write with natural rhythm. We ramble. We pause. We use short and long sentences interchangeably.

- AI, by default, tends to write with a steady, uniform rhythm unless prompted otherwise.

Detectors like ZeroGPT and HumanizeAI use burstiness scores to judge how much your sentence flow mimics human behavior.

Too symmetrical = suspicious.

Too varied = probably human.

Stylometric Patterns: Your Writing’s DNA

Beyond raw scores, detectors also tap into stylometry, the analysis of your writing “style” based on:

- Word frequency

- Punctuation habits

- Average sentence length

- Passive voice usage

- Syntax trees

Even good writing can get flagged. Stylometric models can identify LLM-generated content with up to 90% accuracy through statistical fingerprinting.

How Even Honest Writers Get Caught?

A major frustration with 2025 AI detection is when well-written, researched articles are still flagged as AI-generated.

This happens not because your work lacks human touch but because AI detectors aren’t measuring creativity. They’re measuring patterns.

The False Positive Problem

AI detectors aren’t perfect. And while their false-positive rates have improved, they still flag plenty of human-written content, especially when it’s:

- Highly structured

- Too consistent

- Clean and grammar-optimized

- Written by non-native English speakers

According to HumanizeAI, even their latest model can misclassify up to 1 in 100 human-written texts. That might sound low, until you consider the volume of essays, blogs and emails scanned daily.

Turnitin has acknowledged this as well, stating:

“AI writing indicators should not be used alone to make accusations of misconduct.”

Non-Native Writers Get Hit Hardest

One of the most common sources of false flags?

Non-native English writers.

Even well-written content can be incorrectly flagged as AI-generated due to its similarity to LLM-produced text. This poses a significant problem for students and freelancers.

The Hybrid Content Dilemma

Even human-written content can be flagged by AI as similar to large language model outputs if it shares a 20-30% resemblance. Unlike Quillbot, Turnitin’s vague probabilities are unhelpful.

When “Good” = “Too Clean”

AI tools may flag highly coherent, logical, and rhythmic writing as AI-generated. So, you’re stuck: write perfectly and risk getting flagged, or write a bit messier and look unprofessional.

How to Humanize Without Compromising Quality?

So, what does “human enough” really mean?

- It’s not about perfect grammar.

- It’s not about complex vocabulary.

And it’s definitely not about writing like Shakespeare.

AI detectors flag human writing’s randomness, inconsistency, and emotional tone.

Here’s what that looks like in practice:

1. Varied Sentence Lengths:

Natural writing varies in sentence length, making it less predictable.

2. Tone Shifts:

Human writing varies in mood, humor, and dramatic effect, which detectors recognize as a sign of authenticity.

3. Imperfect Transitions:

To avoid AI flagging, write like a human, with natural detours, rather than using templated phrases favored by AI.

4. Semantic Noise (In a Good Way):

AI detectors flag even clean writing. Personal, vibrant writing, including filler phrases and grammar breaks, indicates human authorship better.

Content Types That Are Most Vulnerable

AI easily mimics and flags certain content types.

Here’s what to watch out for:

Essays

Academic essays, with their predictable intro-argument-evidence-conclusion structure, are easily copied and detected as AI-written. Ironically, even human-written essays can be flagged for being “too clean.”

Product Reviews

Automated detectors quickly flag generic praise like “great quality” or “works as expected,” especially in repetitive review patterns.

Testimonials

Like product reviews, testimonials often lack specific detail. If your testimonial sounds like a bot would have written it, it’ll likely be flagged like one.

Long-form Blog Posts

AI is pretty good at mimicking blog tone, especially when blogs are structured around SEO headers and keyword density. Clean structure + low personality = high detection risk.

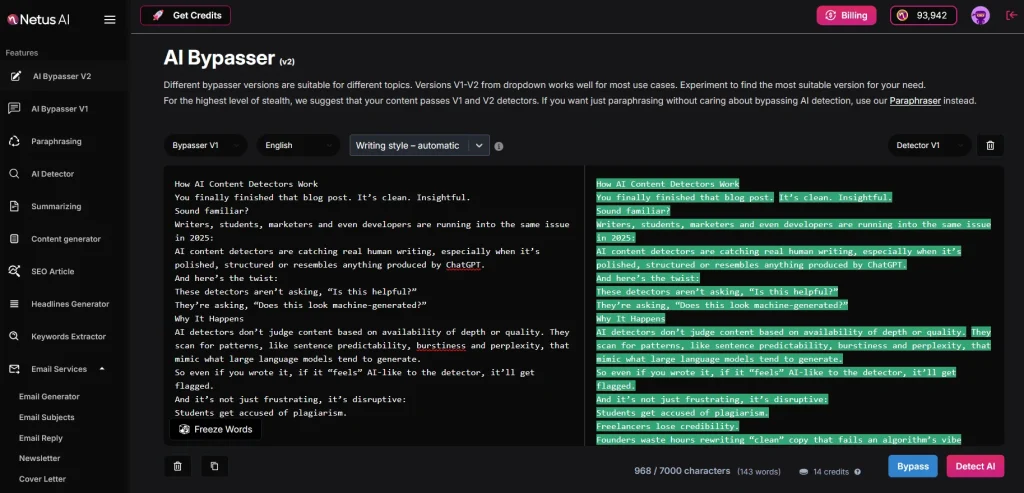

The Solution: Write → Test → Rewrite

This is where smart creators now work with a feedback system.

With tools like NetusAI bypasser, you can:

- Scan your content in real-time

- See which parts are triggering red flags

- Rewrite just those blocks using AI bypass engines

- Rescan until you hit “Human”

Choose from two rewriting engines:

- for fixing sensitive paragraphs

- for polishing full sections

Instead of guessing, you’re testing and avoiding the pain of false positives entirely.

Ethics: Is Humanizing AI Writing Cheating?

Let’s clear the air, using AI doesn’t automatically mean you’re cutting corners. But how you use it does matter.

AI is a Tool, Not a Ghostwriter

The line between assistance and deception lies in intent. If you’re feeding ideas into AI, editing its suggestions and shaping it with your voice, you’re still the author. Just faster.

Bypassing ≠ Cheating (When Done Right)

Running your text through a humanizer like NetusAI isn’t cheating if:

- You wrote the ideas

- You edited the flow

- You ensured the content feels real

That’s not deception. That’s refinement.

The Final Product Is Still Yours

Using AI tools to improve your writing, like Grammarly, isn’t unethical. Humanizing tools simply help you avoid false flags and maintain your message’s essence. If AI helps you express your own thoughts, you’re adapting, not cheating.

Final Thoughts

AI detectors assess writing by analyzing patterns, not ideas. This can lead to original content being wrongly flagged as AI-generated due to surface-level similarities.

So how do smart writers stay ahead?

They don’t just write. They revise. They self-check. And they use tools like NetusAI to push their writing through a smarter loop, one that enhances authenticity, not hides it.

Detection-aware tools help you write clear, confident, high-quality, undetectable content that reflects your unique voice.

FAQs

1. Why was my writing flagged even though I wrote it myself?

Because AI detectors don’t detect authorship, they detect patterns. Even original human writing can get flagged if it’s too predictable, too formal or lacks personal nuance.

2. Do tools like Grammarly trigger detection?

Even polished or robotic-sounding writing can appear AI-generated. This is especially true if lots of people use the same tool to churn out similar stuff.

3. What’s the difference between paraphrasing and humanizing?

- Paraphrasing = swaps words, keeps structure

- Humanizing = changes tone, rhythm, sentence variety and intent

Tools like NetusAI go beyond paraphrasing to actually rework your text like a real editor would.

4. Can someone tell if content has been bypassed?

NetusAI generates human-like text, making AI detection difficult. It rephrases AI-generated content, adds a human touch, and allows user fine-tuning.

5. Does NetusAI guarantee full protection from all detectors?

NetusAI provides instant detector feedback, allowing you to refine content for a “Green Signal” (human-sounding). You control the process; NetusAI helps your work bypass detection.

6. Why do long-form articles get flagged more often?

Longer pieces are more susceptible to detection. Even clean writing can be flagged for repetitive phrasing, sentence structures, or a lack of personal insight.

7. How do I know if a sentence “sounds too AI”?

Ask yourself:

- Would I say this out loud?

- Does this sentence feel overly robotic or too polished?

If it lacks rhythm, emotional beats or natural imperfections, it’s probably too AI-like. Reading aloud is your best test.

8. Can I use AI writing tools safely without getting flagged?

Use AI for structure and drafts, not as a ghostwriter. Revise with your unique voice and experiences. NetusAI helps refine AI-like phrasing.

9. Will bypassed content still pass plagiarism checks?

Yes, if properly rewritten using tools like NetusAI that regenerate structure, tone, and flow to reduce similarity. Always plagiarism-scan to double-check.

10. Are AI detectors always accurate?

Detectors guess based on statistics, not comprehension, often flagging even human writing. The key is to beat the pattern, not the detector.