Stylometry goes far beyond surface-level word scanning, it reverse-engineers your unique writing DNA.

These statistical signatures form an identifiable profile, one that AI detectors mine to label content “machine-made.”

When writing is deemed synthetic, credibility, organic traffic and authority are immediately lost as audiences question its authenticity.

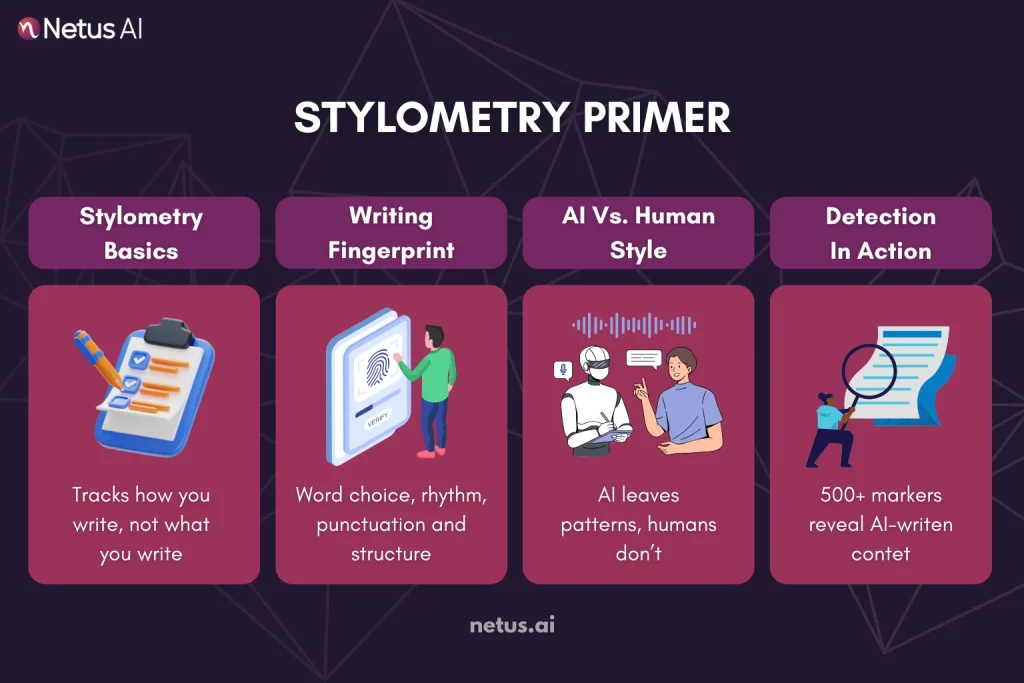

What Is Stylometry? A 90-Second Primer

Think of stylometry as forensic linguistics for text. It’s the science of quantifying how you write, not what you write.

Style + Measurement = Stylometry

The Core Principle:

Your writing possesses a distinct “fingerprint” defined by word choices, sentence rhythm, punctuation and structural habits.

Stylometry analyzes 500+ subtle markers like these, building a statistical profile of your style. AI models (like GPT-4) have their own “fingerprints”, patterns humans rarely replicate. Stylometry spots these.

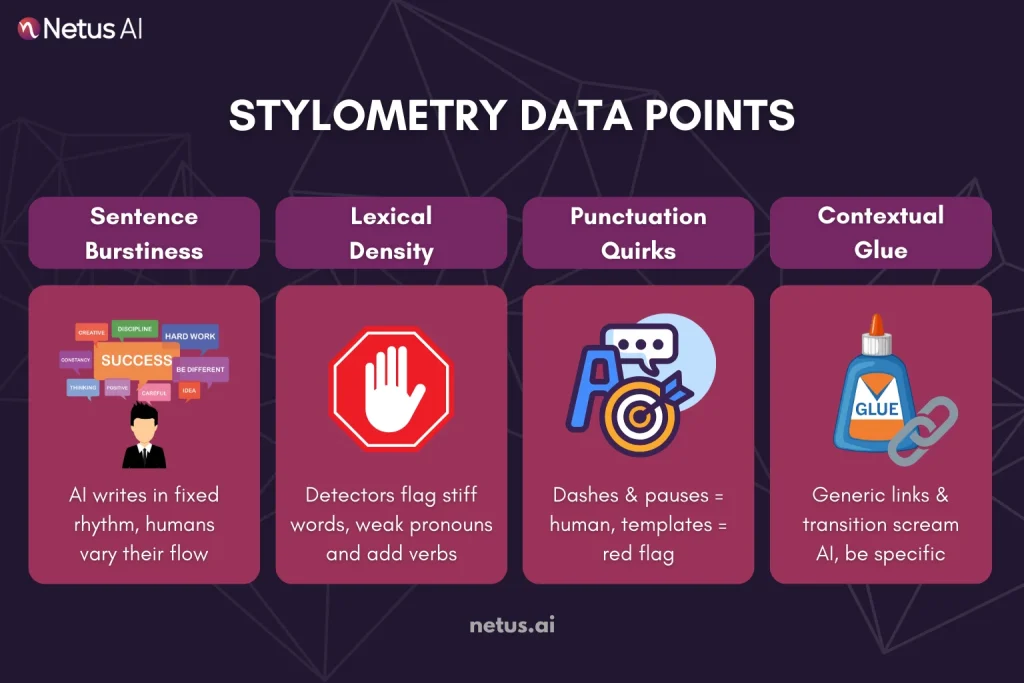

The Data Points Detectors Actually Measure

Stylometry doesn’t “read” meaning, it crunches numbers. Here’s what AI detectors scan to fingerprint your writing:

A. Sentence Burstiness (The Rhythm Trap)

- What it is: Variance in sentence length and structure.

- Human: Mixes short punchy lines (“See?”) with complex, clause-heavy sentences.

- AI red flag: Uniform 12–18 word sentences → low burstiness.

Example:Robotic: “The algorithm processes data. It generates output. Results are analyzed.”

Human: “Boom, the algorithm crunches data. Then? It generates output, but here’s the twist: results need human analysis.”

B. Lexical Density (Word Choice Grids)

- What it measures: Frequency of:

- Filler words (however, furthermore)00

- Rare vs. common vocabulary (utilize vs. use)

- Pronouns (I, we) vs. passive voice

- AI red flag: Overly formal diction + missing colloquial spikes.

C. Punctuation & Structural Quirks

- Tracked:

- Comma/dash/semicolon ratios

- Paragraph opener patterns (e.g., always starting with a transition)

- Bullet/list uniformity (identical formatting = machine-made)

- Human advantage: Unpredictable pauses (…), dashes or fragments.

D. Contextual “Glue”

- Transitional phrases: AI overuses “Therefore, Additionally, In conclusion.”

How Modern AI Detectors Turn Stylometry Into Scores?

Tools like BypassAI and ZeroGPT don’t just “guess” if text is AI-written. They run a hybrid analysis:

Step 1: Perplexity Check (The Predictability Test)

What it measures: How “surprised” an AI model is by your word choices.

- Human text: Unpredictable word patterns → high perplexity.

- AI text: Uses statistically “safe” words → low perplexity.

Example:

Human: “Stylometry? It’s like a linguistic lie detector, wildly complex, yet brutally simple.”

→ High perplexity (uncommon phrasing).

AI: “Stylometry is a method for detecting writing patterns through statistical analysis.”

→ Low perplexity (predictable word chain).

Step 2: Stylometry Layer (The Fingerprint Scan)

Detectors cross-reference perplexity with stylometric red flags:

➔ Low burstiness (sentence rhythm)

➔ Transition over-reliance (“However,” “Therefore”)

➔ Perfect grammar + zero voice

➔ Structural repetition (bullet/formula patterns)

The verdict formula:

Low Perplexity + Low Stylometric Variance = AI-Generated

Why Hybrid Models Win

Perplexity alone fails with:

- Human-like AI (e.g., Claude 3 Opus)

- Technical/academic writing (inherently low-perplexity)

Stylometry adds the human quirk factor detectors needed.

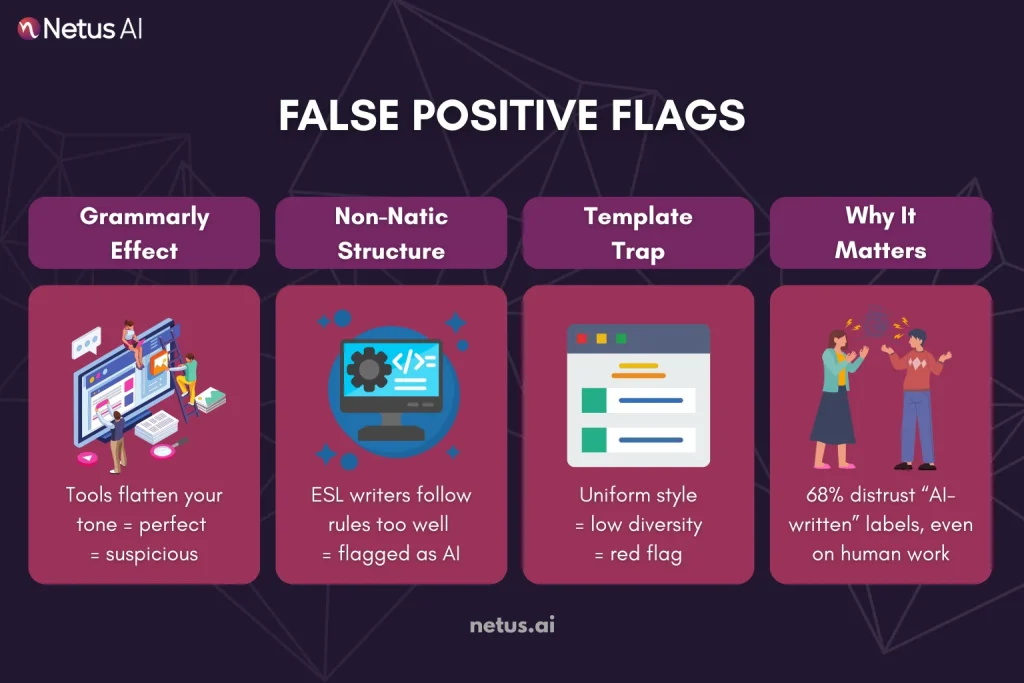

When Human Writers Get Mis-Tagged?

Stylometry isn’t foolproof. Human writing often shares “AI-like” patterns, triggering false positive AI detection. Here’s why you might get unfairly flagged:

A. The “Grammarly Effect”: Over-Edited Prose

What happens:

Heavy grammar tool use → erases contractions, idioms and rhythm quirks → text becomes too perfect.

Detector verdict:

“Low burstiness + low perplexity = AI”

Example:

Original: “We can’t ignore stylometry, it’s getting scary good.”

Grammarly-ized: “One cannot disregard stylometry; its efficacy is formidable.” → 87% AI score on ZeroGPT.

B. Non-Native English Structure

What happens:

Formulaic syntax + avoidance of slang/idioms → mimics AI’s “safe” patterns.

Why it hurts:

Tools often misread ESL precision as synthetic text.

Key insight: Detectors train on native English patterns, yours may fall outside their “human” baseline.

C. Template-Driven Content (Marketing, Academia)

What happens:

Strict style guides enforce:

- Uniform transitions (“Furthermore, studies indicate”)

- Identical section structures

- Passive voice dominance

Detector verdict:

“Structural repetition + low lexical diversity → AI”

False positives aren’t just annoying, they damage credibility.

Humanizing Without Losing Your Voice

Beating detectors isn’t about “tricking” algorithms, it’s about reclaiming natural rhythm. Use these stylometry-aware fixes to humanize AI text without sounding like a different writer:

Targeted Anti-Stylometry Tactics

|

Stylometry Red Flag |

Humanizing Fix |

|

Low burstiness |

→ Alternate short and long sentences. Example: “Boom. Then, analyze the unpredictable cadence of human thought.” |

|

Transition overuse |

→ Swap 30% of transitions: Replace “However” with “Here’s the twist,” or “Therefore” with “So what?” |

|

Perfect grammar |

→ Inject controlled “flaws”: Use contractions (can’t), fragments (“See?”), ellipses (…) and em dashes, like this. |

|

Generic examples |

→ Add hyper-specificity: “Backlinko’s 2024 CTR study (n=12,000 sites)” not “research shows.” |

|

Template structures |

→ Break formatting: Mix bullets (▪), arrows (→), bold headers (Like this) and one-line paragraphs. |

The Psychology Behind Fixes

These tweaks don’t just fool detectors, they trigger unconscious trust in readers:

- Burstiness → feels conversational

- Specificity → signals expertise

- Format variety → implies original thinking

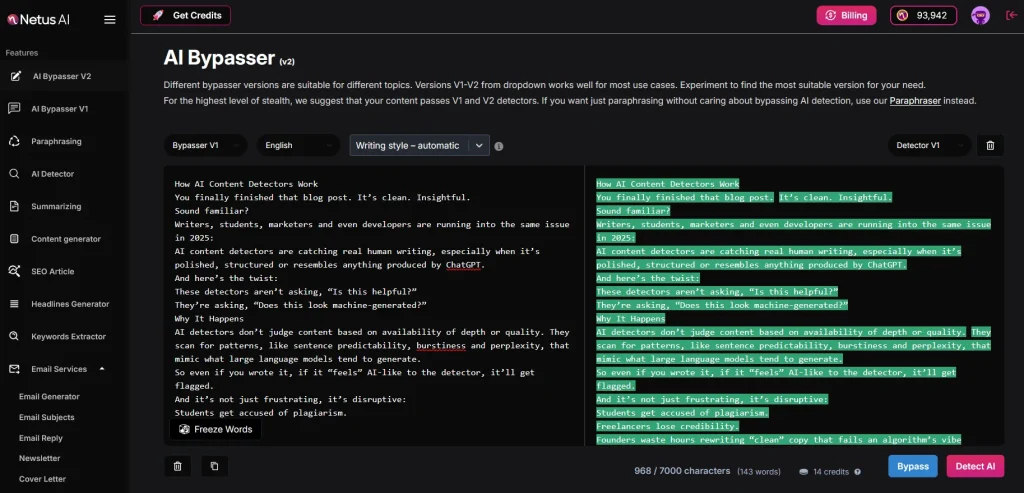

Use an AI bypasser tool like NetusAI for diagnostics, not just rewriting. It scans for:

🔴 Stylometry risk zones (e.g., “78% sentences same length”)

🟢 Voice retention score (“Your idiom density: 4% → ideal 8-12%”)

then suggests minimal edits to preserve your tone.

Smart Workflow: Detect → Rewrite → Retest with NetusAI

Publishing means writing for two audiences at once, humans and algorithms. The most reliable way to satisfy both is to build a tight feedback loop into your process:

1. Drop-in Detection

Paste a paragraph or an entire draft, into NetusAI’s detector. In under a second you’ll see a color-coded verdict: 🟥 Detected, 🟡 Unclear or 🟢 Human. The tool highlights stylometric “red flags” in sentences, allowing you to rewrite effectively and freeze words.

2. Targeted Rewriting with the AI Bypasser

Click any flagged block and launch the AI Bypasser. It restructures cadence, sentence length and transition patterns, the stylometric signals, while preserving meaning. Think of it as a surgical edit, not a thesaurus swap.

3. Instant Retest

The moment the rewrite lands in your editor, hit Rescan. If the block flips to 🟢 Human, move on. If it’s 🟡, tweak a phrase or add a personal aside and rescan. The cycle takes seconds and prevents you from over-editing clean prose.

Treat NetusAI as a stylometric stress test on demand. Detect the patterns, rewrite with intention and retest until you’re confidently human.

Final Thoughts

Stylometry, the statistical engine behind AI-detection tools, analyzes your writing’s rhythm, cadence and punctuation, not ideas or accuracy.

Traditional “good writing” (better grammar, cleaner structure) can sometimes increase the likelihood of a false positive.

Detectors target patterns, not prose. Human variation is your best defense. A rapid detect-rewrite-retest loop is now essential.

Tools like NetusAI fit into that loop precisely because they respect the difference between style and substance. They help you retain expertise, data and brand voice while adding organic unpredictability to bypass stylometric detectors.

FAQs

1. What exactly is stylometry in the context of AI detection?

Stylometry statistically analyzes writing style. Detectors analyze word frequency, sentence length, punctuation and syntax to create a “fingerprint.”

2. Can stylometry really identify who wrote something?

Academic models identify authors in small groups, while commercial AI detectors classify the probability of human vs. AI-generated text, rather than definitive identification.

3. Why does “clean” writing trigger false positives?

LLMs are trained for clarity, symmetry and grammatical consistency, traits we traditionally equate with good prose. Uniform sentences make your writing style more like an A.

4. Do grammar tools like Grammarly increase the odds of being flagged?

They can. Heavy, automated cleanup flattens burstiness (sentence-length variation) and removes idiosyncratic phrasing, both key human signals.

5. Is paraphrasing enough to beat stylometric detectors?

No. Simple synonym swaps leave deeper patterns, rhythm, clause order, passive-voice frequency, largely intact.

6. How does NetusAI help with stylometry issues?

NetusAI detects predictable passages, then its AI Bypasser rewrites them for better rhythm and varied syntax while preserving meaning. You can test, tweak and retest in real time until the stylometric metrics land in the “Human” zone.

7. Will increasing temperature in ChatGPT solve the problem?

Higher temperature boosts randomness but also risks incoherence. It rarely fixes stylometry fully, because core sentence scaffolding remains LLM-like.

8. What kinds of content are most vulnerable to stylometric flags?

Highly structured formats, academic essays, listicles, product descriptions, tend to follow rigid templates that mimic AI output. Long-form guides polished by grammar tools are next in line.