Whether you’re drafting a blog post, product page or school essay with AI. Detectors like Turnitin and ZeroGPT analyze grammar, structure, tone, rhythm and sentence fingerprints. It’s not about what you say but how you say it.

The problem? Even human-written content can get flagged for sounding too “AI.”

Too polished. Too structured. Too predictable. Writing well isn’t enough.

Your content needs to sound human, unpredictable, emotionally aware, a little messy. That’s what builds trust with readers and passes detection.

This guide breaks down:

- What detectors look for

- How to shape prompts that dodge detection

- And how smart rewriting tools can help content stay authentic

Whether you’re using AI to brainstorm or draft full pieces, understanding how to control tone and flow is now a core writing skill.

What Human-Like Writing Actually Means?

You’ve probably heard this advice a hundred times: “Make your AI content sound more human.” But here’s the problem, most people take that way too literally.

They start tossing in a few contractions, maybe an idiom or two or pad sentences with filler words. Unfortunately, that’s not enough.

So let’s break it down:

1. Unpredictability Is Human

Humans write with flow, not formulas. One sentence might be short. The next rambles a bit. Sometimes we start with a bold claim, other times we meander before a point. This natural variation, called burstiness, is often missing from AI drafts.

Real writers mix tempo. AI doesn’t, unless guided.

2. Emotional Subtext Feeds Trust

Think of a personal blog post, a teacher’s email or a product review. Even if factual, it carries intent, curiosity, frustration, excitement. AI writing? Often flat. It lacks implied tone unless explicitly prompted.

Even technical content benefits from subtle emotion.

3. Voice Means Subjectivity, Not Just First-Person

People don’t just present information. We editorialize. We joke. We raise doubts. We contradict ourselves mid-thought. AI models often produce content that’s factually fine but feels detached, because it lacks personal judgment. Voice = perspective, not just pronouns.

4. Imperfections Build Credibility

It sounds ironic but mistakes are human. A quick aside. A missing “that.” A playful exaggeration. These are cues that a real person wrote something. AI’s default goal is to sound correct, which makes it sound robotic.

Strategic messiness ≠ poor writing. It’s authenticity.

So What’s the Real Definition?

Human-like writing isn’t about typos or tone alone. It’s about writing with:

- Variable structure (burstiness)

- Subtle emotion

- A visible point of view

- Micro-imperfections that feel real

This is where tools like NetusAI humanizer outperform basic paraphrasers. NetusAI doesn’t just swap words, it reshapes the entire signal of your content. That includes:

- Mixing sentence cadences

- Adjusting tone per section

- Rewriting in a way that feels owned

The result? You’re not just sounding human. You’re avoiding detection at the rhythm level.

Workflow Blueprint: Detect → Rewrite → Retest

Most people stop after writing a decent draft. To survive AI detection and engage real readers, upgrade your workflow for real-time feedback and refinement.

Here’s the 4-part blueprint:

Draft With Human Intent

Start with the goal of sounding real, not perfect. That means:

- Use your natural voice

- Vary sentence lengths and tone

- Tell mini-stories or use personal phrasing

The first draft isn’t about avoiding detection, it’s about expressing thought clearly, like a real person would.

Run a Detection Scan

Before polishing, run your draft through tools like:

- ZeroGPT

- Turnitin’s AI detection

You’re not just looking for a red flag, you’re looking for why it’s flagged. Is the tone too regular? Is vocabulary too safe?

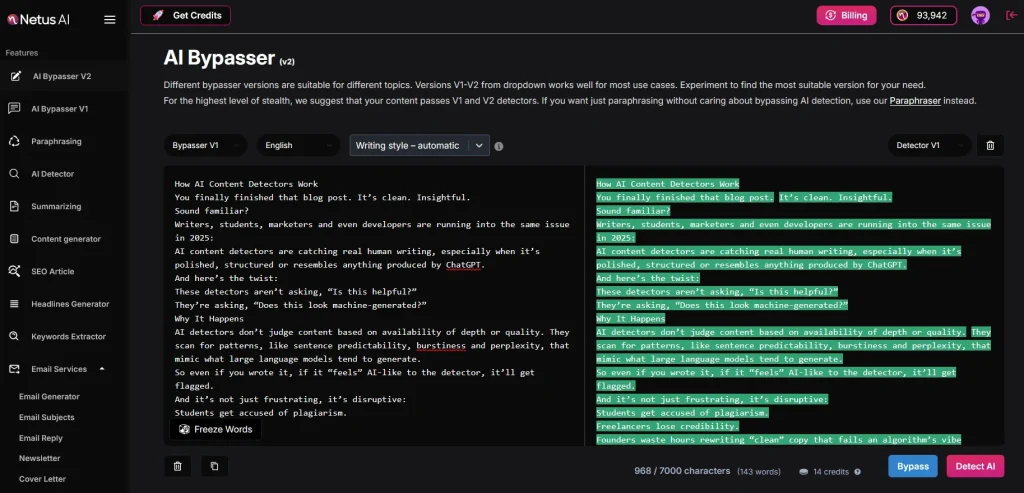

Rewrite Using NetusAI’s Detection Loop

This is where most writers guess, NetusAI humanizer lets you test.

- Paste your flagged content into NetusAI

- Select a bypass engine (V1 or V2) based on sensitivity

- Instantly see detection verdicts (🟢 Human / 🟡 Unclear / 🔴 Detected)

- Adjust and retest until you land a “🟢 Human” score

NetusAI stands out because of its feedback-first loop. You can edit, retest and improve your work right on the page until you ensure detection safety, without having to leave the site.

Final Scan + Publish With Confidence

After rewriting, do a final scan using the same detector you started with. Most users see a full shift from “AI-detected” to “Human” when using the advanced rewriting engine.

Now your content doesn’t just look human, it tests human.

Bonus: It Works Across Languages

Whether you’re writing in English, French or Japanese, NetusAI works. Every rewrite respects the tone and structure of your input language, while still restoring variation, burstiness and unpredictability.

Future Trends: Watermarked Outputs, Content Provenance & Regulating AI Tone

AI detection isn’t staying static. It’s evolving fast and future-proofing your content now could save you from flags later. Here’s what’s coming next:

Token-Level Watermarking (OpenAI, Anthropic)

OpenAI and Anthropic are investigating invisible watermarks in AI-generated text, which are detectable only by machines. OpenAI may have paused the deployment of watermarks in 2024 but research is still ongoing. One notable study is the 2023 work titled “A Watermark for Large Language Models” by Kirchenbauer.

Future detectors won’t guess. They’ll know.

Content Provenance & Metadata Chains

Adobe, Microsoft and The New York Times lead the Content Authenticity Initiative. They advocate for the integration of embedded metadata layers that monitor:

- Who created the content

- Whether AI was used

- What changes were made

It’s like a blockchain for blog posts. The C2PA standard makes this traceability public.

Legal & Institutional Enforcement

Governments aren’t waiting around:

- The EU AI Act (2025) categorizes some AI-generated content, like political speech, education and journalism, as “high-risk.”

- In the U.S., universities and corporations have begun requiring authorship attestations and proof of originality for submissions.

Even hybrid drafts (LLM + human edits) may fall under scrutiny if you can’t prove which part was yours.

Why NetusAI Matters in This Future:

This isn’t just about beating a detector anymore, it’s about authorship integrity.

NetusAI rewrites your content from the ground up, adjusting its tone, cadence and rhythm. This approach helps you avoid detection today while ensuring your work is original tomorrow.

Final Thoughts

AI writing is here to stay but so is AI detection. As tools improve, writing must have rhythm, cadence and emotional flow to effectively mimic human expression.

Paraphrasing alone isn’t enough; both what you say and how you say it are crucial for engaging your audience. Human-like writing fosters trust, leading to clicks, grades or conversions.

Run Your Draft Through the NetusAI Loop

Use the detect → rewrite → retest loop with NetusAI humanizer to enhance your AI-assisted content. It’s built not just to humanize your text but to help you own your tone.

Want to learn more about what detectors look for? Read this article:

FAQs

1. Can AI-generated text pass detection without edits?

Advanced models like GPT-4 show detectable patterns, making content vulnerable to detection tools. NetusAI can help by reshaping structure and tone for natural variation.

2. What’s the best way to humanize AI content without losing meaning?

Humanizing doesn’t mean dumbing down, it means reshaping tone, rhythm and sentence variety. Start by rewriting rigid structures into conversational ones. Vary sentence lengths. Add rhetorical questions or analogies.

3. Does using slang or typos help bypass detectors?

AI detectors evaluate patterns such as grammar and syntax. To create more human-like content, implement structural changes, adjust tone and vary style, as demonstrated by NetusAI.

4. How does sentence length variation impact detection?

Human writers vary sentence lengths, while AI often produces medium-length sentences, which detection tools recognize as synthetic. NetusAI improves this by automatically adding variation to sentence structure during rewrites.

5. Can stylometry detect AI even after paraphrasing?

Stylometry analyzes elements like sentence rhythm and punctuation, which simple paraphrasers don’t alter. NetusAI rewrites content with stylometric awareness, adjusting cadence and voice to better resemble human authorship.

6. Is it legal to rewrite AI content to avoid detection?

Yes, as long as the content is original, ethical and used responsibly. Misrepresenting AI-generated content as human without disclosure may breach integrity policies. NetusAI offers a detection-safe rewrite to help creators publish confidently.