You used AI for your blog, expecting high-quality, fast content and massive SEO scaling. You published, but traffic is non-existent. Your AI SEO blog is invisible and underperforming. Was using AI a mistake?

The real problem is not using AI at all. It is in taking that raw output straight from AI and thinking it counts as complete SEO content. Your blog stays down in the rankings. It just does not have the real depth or the human feel.

Nor does it include the kind of smart tweaks that Google looks for. Readers want that too. Still, do not drop AI from your process. You need to learn how to shape that basic material. Turn it into something that can actually hold its own and come out on top.

The harsh reality: Why is your AI content not magically ranking?

The core issue is the false assumption that “AI-generated content” equals “SEO-optimized, rank-worthy content.” Fluency isn’t expertise and coherence isn’t value. Your AI content is failing to rank without intervention.

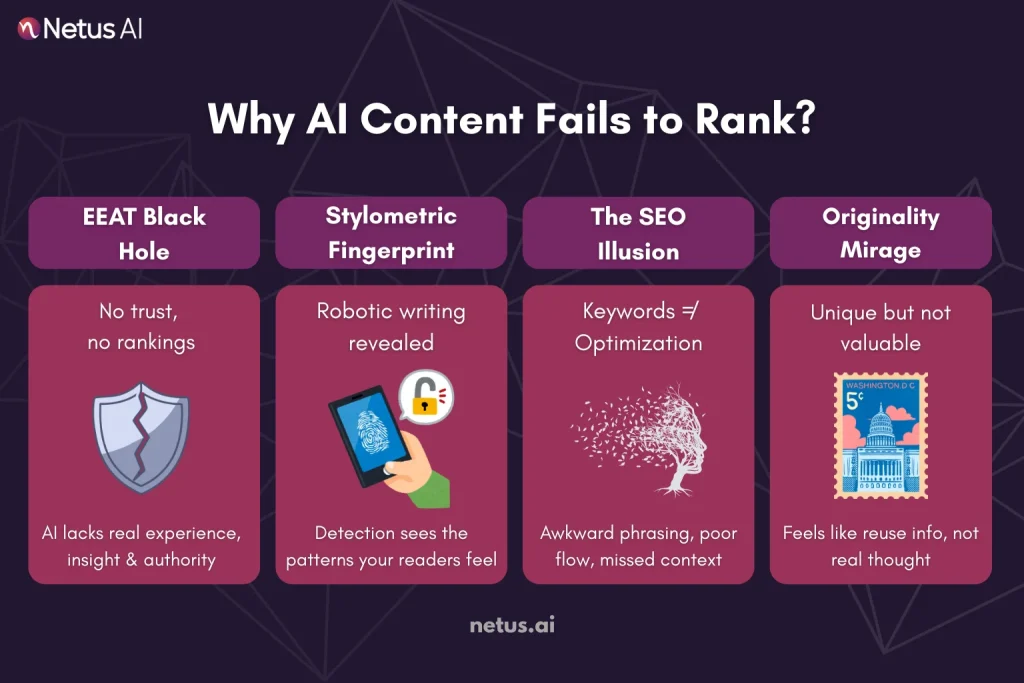

- The EEAT black hole: Raw AI content fails Google’s E-E-A-T standards (Experience, Expertise, Authoritativeness, Trustworthiness) because it lacks lived experience and knowledgeable authorship, hurting trust and rankings.

- The stylometric stain: Beyond EEAT, AI writing has a detectable fingerprint, a stylometry. It often exhibits:

- Predictable patterns: Repetitive structures, monotonous rhythm, overused transition words.

- Emotional flatline: Generic, anonymous tone lacks human passion/perspective.

- Surface-skimming syndrome: AI is great at breadth but often fails at depth. It lists points without connecting them meaningfully, explores topics superficially and avoids the complex nuances or counter-arguments that demonstrate true understanding. This screams “thin content” to algorithms.

- The optimization mirage: You prompted the AI with keywords. It used them. So why isn’t it optimized? Because AI content SEO tools integrated into writers often handle keywords mechanically. They might:

- Stuff unnaturally: Forcing keywords in creates awkward, robotic phrasing that harms readability and user experience.

- Miss semantic nuance: Ignoring related entities, latent topics and the contextual meaning around keywords, resulting in content that lacks topical depth and relevance.

- Botch structure: Creating heading hierarchies that look right but lack logical flow or clear signaling of content importance. The underlying architecture is weak.

- The originality illusion: Raw AI content often repackages common information, lacking the unique angle, original data or compelling narrative needed to rank.

Decoding Google: It’s not who wrote it but how it serves searchers

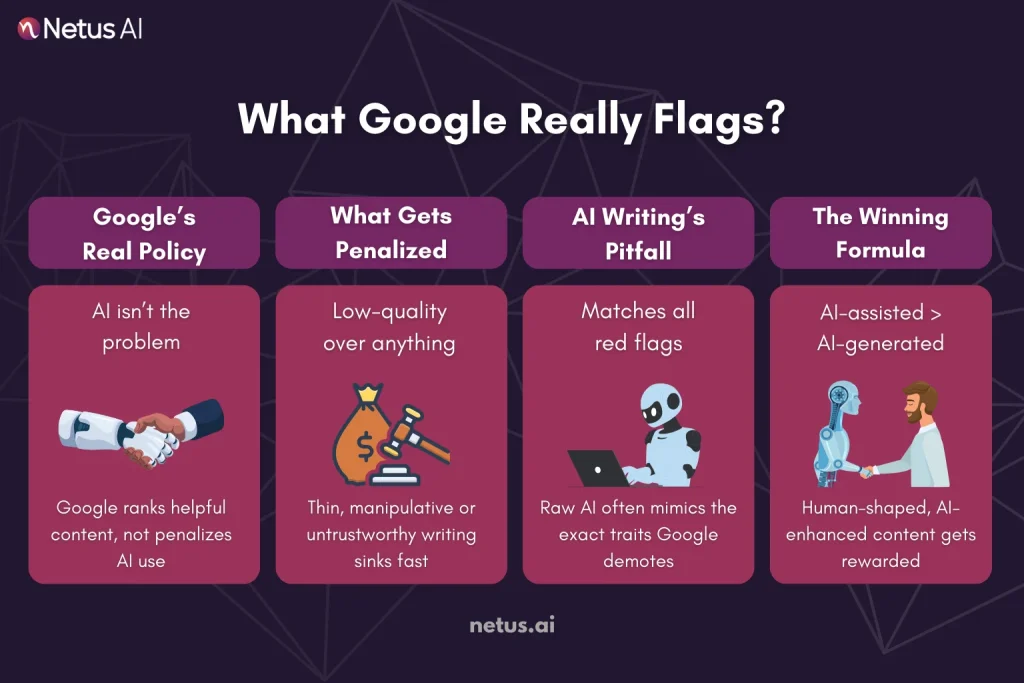

The panic often starts with a misconception: “Google penalizes AI content!” Crucially, let’s clarify Google’s actual stance.

- The official policy (refreshed for 2025): Google doesn’t penalize AI content; it prioritizes “helpful, reliable, people-first content” based on the Helpful Content Update and EEAT, regardless of creation method.

- What Google actually penalizes (the root cause): Google demotes content that is:

- Low-quality: Thin, unoriginal, unhelpful.

- Manipulative: Primarily designed to rank, not to inform (keyword stuffing, cloaking, sneaky redirects).

- Lacking EEAT: Failing to demonstrate real experience, expertise, authoritativeness or trustworthiness.

- Misleading or harmful: Factually inaccurate, promoting scams or spreading misinformation.

- Why AI content often gets caught in this net: Raw, unedited AI content fails to rank because it exhibits characteristics Google penalizes: low quality, poor EEAT and potential manipulation/hallucinations. It’s a quality issue, not an AI issue.

- The nuance: AI-Assisted (AI as a tool, human expertise, fact-checked) ranks well. AI-Generated (minimal human edit, copied from AI) is flagged as low-quality, risking poor rankings and detection due to identifiable patterns and lack of EEAT. Detection of low quality matters more than who wrote it.

Red alert: Signs Google has flagged your AI blog

How do you know your AI content not ranking is specifically due to quality/EEAT flags and not just general competition? Watch for these telltale signs, often visible in Google Search Console (GSC):

- The organic traffic cliff dive: Your AI-written post is indexed, sees a brief flicker of traffic, then experiences a precipitous drop (near-vertical) in organic traffic. This rapid demotion signals Google’s algorithm has quickly reassessed the page, found it lacks quality and removed it from relevant SERPs.

- Indexing purgatory (the “crawled, not indexed” curse): Check your GSC Coverage report. Is your shiny new AI blog post stuck in “Crawled,currently not indexed” or “Discovered,currently not indexed” status for an extended period? This is a major red flag. Google actively chooses not to include it in its index.

- The phantom page (indexed but invisible): Sometimes the page is indexed (GSC says “Indexed, Submitted and indexed”). Yet, it gets zero impressions for any relevant queries, even long-tail ones you know it should appear for.

This suggests Google has indexed it but assigns it such low quality/EEAT signals that it’s buried pages deep, effectively invisible. It exists in the index but has no meaningful visibility.

- Vanishing act (de-indexing): In more severe cases, especially if multiple pages are flagged or a manual action is applied (less common but possible for egregious, spammy AI use), previously indexed pages can be removed from Google’s index entirely. You’ll see a sharp drop to zero clicks/impressions and a “Not Found (404)” or “Page removed” status in GSC.

- User engagement implosion: Look beyond GSC to your analytics. For your AI-powered pages:

- Does the Bounce Rate skyrocket (e.g., 80%+)?

- Is the Average Session Duration painfully low (e.g., under 30 seconds)?

- Is Scroll Depth minimal (users barely get past the intro)?

- Are Comments non-existent or purely spam?

These metrics scream user dissatisfaction. Visitors arrive, instantly perceive the content as unhelpful, generic or “off,” and leave immediately.

Google interprets this mass rejection as a powerful negative ranking signal, confirmation that your content fails user intent. This is often the clearest sign the AI content flagged user experience alarms.

Content starts at generation: NetusAI’s SEO article writer and content generator

Avoiding stylometry and detection issues shouldn’t begin after content is written, it should start with the writing itself. That’s where NetusAI steps in.

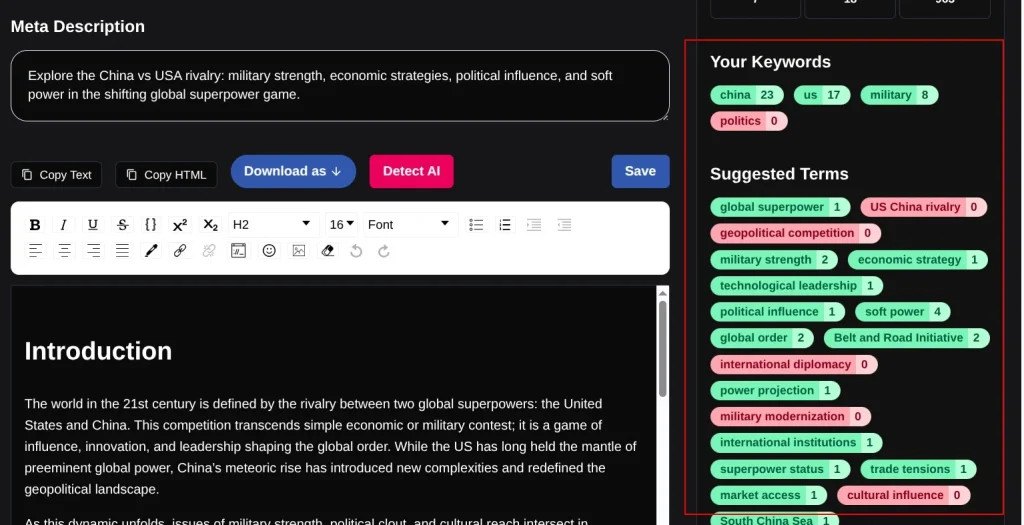

Unlike generic AI tools that churn out robotic, easily flagged paragraphs, The NetusAI SEO Article Generator is designed to help you create full-length blog posts that are already optimized for clarity, tone and search intent. Unlike generic tools, it goes beyond simple drafting. It:

- Lets you input headlines and targeted SEO keywords

- Supports long-form templates for full blogs

- Auto-generates a structure with Title → Outline → Content

- Works in multiple languages for global teams

And most importantly: it ties directly into the Netus AI Bypasser + Detector system, meaning your output isn’t just readable, it’s already tuned to avoid detection.

You can generate, review and rewrite all in one interface without needing third-party tools to patch the gaps. It’s built for marketers, freelancers and bloggers who want their AI content to actually pass as human-written.

Final thoughts

AI blogs fail because they lack human refinement. Google prioritizes original, clear, well-structured, human-feeling content.

Use hybrid creation and NetusAI (SEO Article Writer, Content Generator, AI Bypasser) to fix it.

FAQs

1. Why isn’t my AI-written blog ranking on Google?

Because AI content often lacks structure, EEAT signals and originality or worse, it triggers AI detectors and gets suppressed by search algorithms.

2. Does Google penalize all AI content?

No. Google penalizes low-quality, thin or clearly auto-generated content. If AI helps you write helpful, human-like blogs, you’re safe but only if it passes detection.

3. How do I fix low-performing AI blog posts?

Start by reworking them with a humanizing tool like Netus.AI. Adjust structure, improve clarity and verify it passes AI detection before republishing.

4. Can I keep using ChatGPT to write SEO blogs?

Yes but never publish ChatGPT outputs as-is. Run them through a detection-safe rewriter, like Netus.AI’s Bypasser and optimize the structure for SEO.

5. What tools help AI blogs rank better?

You’ll want a stack: keyword tools (like Ahrefs), humanizers (like Netus.AI), plagiarism checkers (like Copyscape) and SEO tools (like SurferSEO or Clearscope).

6. How does NetusAI improve blog performance?

It rewrites flagged content, restructures paragraphs for SEO and gives you real-time verdicts on whether your blog looks human or robotic to detection tools.

7. Should I delete my AI blogs that didn’t rank?

Not necessarily. You can repurpose or rewrite them using NetusAI and re-optimize for updated keywords, no need to waste indexed pages.

8. What’s the best way to test if my blog sounds human?

Paste it into Netus.AI’s AI Detector. If it flags as “Detected,” rework it with the Bypasser engine until it gets a “Human” verdict.

9. Will paraphrasing help AI blogs rank?

Only if it’s deep paraphrasing, not word swaps. Netus.AI’s paraphraser restructures sentence flow and tone, which helps avoid both detection and stylometry tracing.