Readers and algorithms alike have developed a razor-sharp instinct for spotting synthetic text. The cost isn’t just skepticism, it’s tangible.

That’s not a minor dip; it’s a traffic hemorrhage and a credibility killer. Even advanced AI often leaves clues that reveal its robotic origin to trained observers and detection systems.

The result? Your valuable message gets dismissed before it lands.

But here’s the good news: these exposure points aren’t random. They’re predictable, fixable patterns.

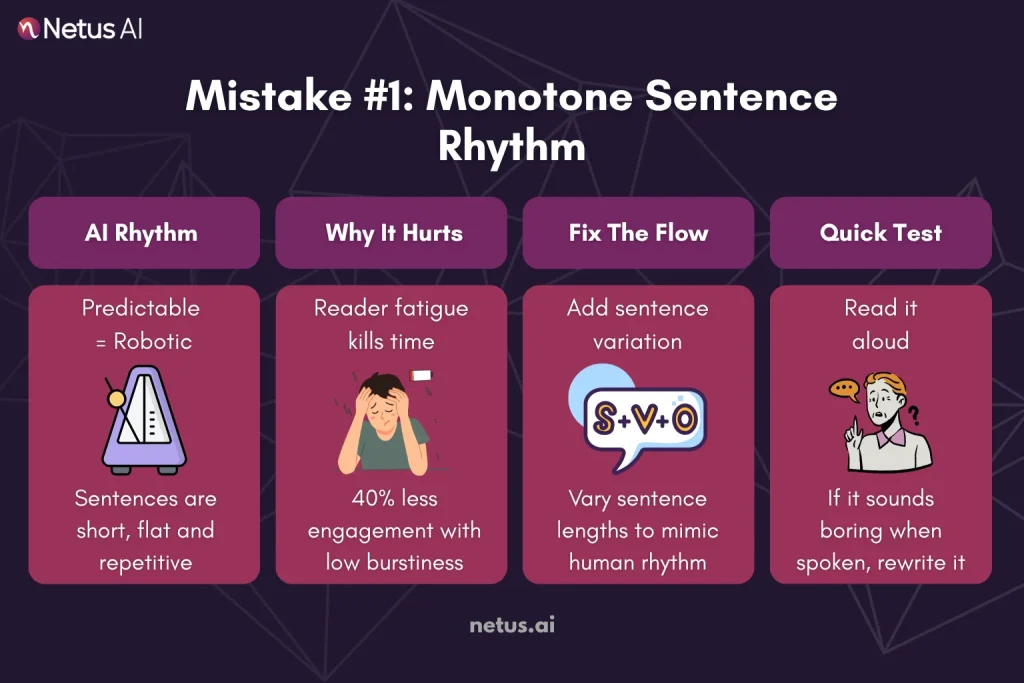

Mistake #1: Monotone Sentence Rhythm

AI loves patterns. Human brains? Not so much. One of the deadliest giveaways of AI content is unnaturally uniform sentence rhythm, what linguists call “low burstiness.” Imagine a drumbeat: tap-tap-tap-tap. No crescendos. No pauses. Just relentless, predictable pacing.

Why it’s a problem:

Readers subconsciously feel robotic text. Studies show content with low sentence variation sees 40% lower time-on-page. Why? Monotony triggers skimming. Worse, detectors like HumanizeAI flag “rhythmic predictability” with 78% accuracy.

Before AI Rhythm:

“Optimizing content is important. AI tools help greatly. They save time. But readers notice patterns. Patterns reduce trust.”

(Average sentence: 5 words. Hypnotic. Obvious.)

After Humanizing:

“Want to optimize content? AI tools do help, drastically cutting time. But here’s the catch: readers instinctively notice robotic patterns, killing trust.”

(Varied lengths: 4, 9, 14 words. Natural flow.)

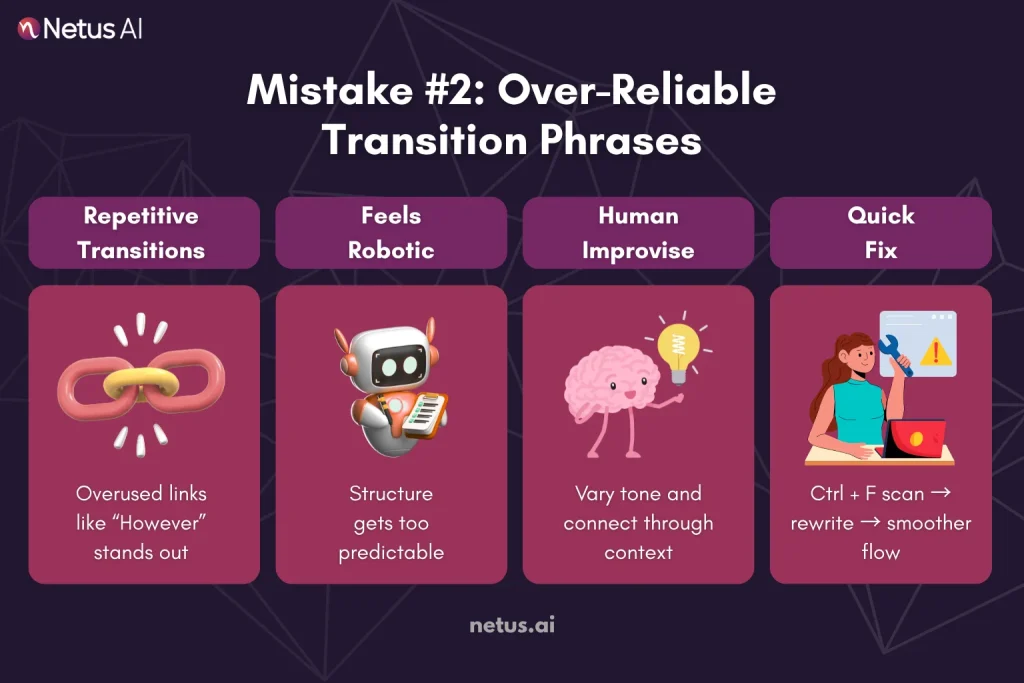

Mistake #2: Over-Reliable Transition Phrases

AI leans hard on predictable connective tissue. While transitions like “However,” “Furthermore,” or “In conclusion” are grammatically correct, using them repeatedly screams template content.

Why it’s a problem:

Overused transitions feel robotic and interrupt flow. They make content sound like a scripted robot, not a thoughtful human. Detectors like HIX flag repetitive transition patterns as high-probability AI markers.

Before AI Transition:

“AI writing is fast. However, it lacks nuance. Furthermore, it often uses repetitive transitions. Therefore, readers lose trust.”

After Humanizing:

“Yes, AI writing is blazingly fast but where’s the nuance? Worse: those clunky, overused transitions (‘furthermore,’ ‘therefore’) become dead giveaways. Result? Readers tune out, fast.”

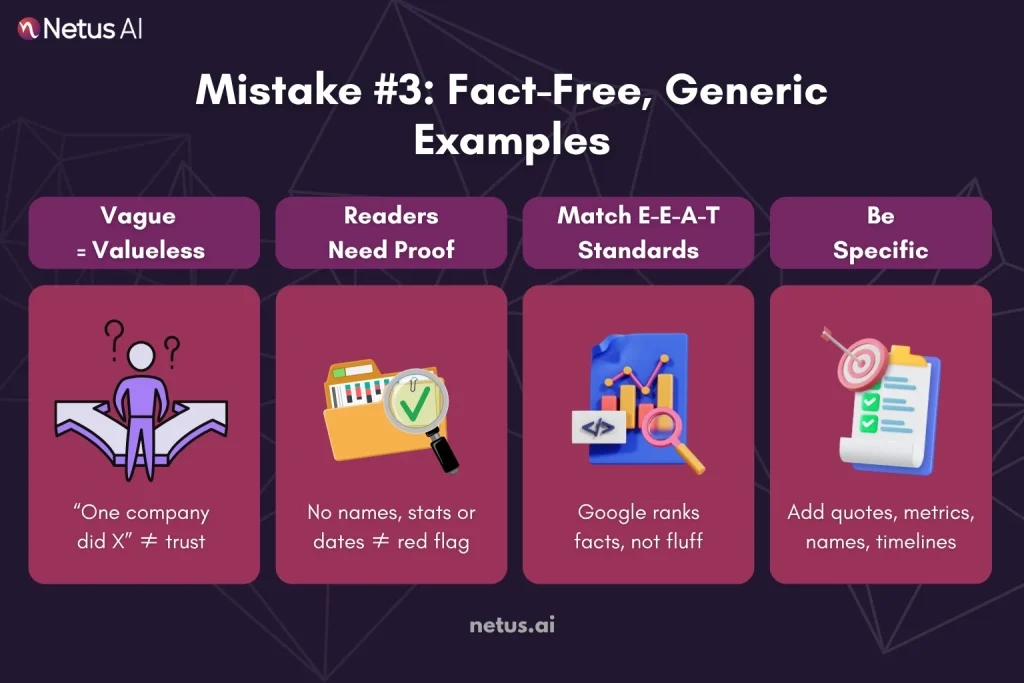

Mistake #3: Fact-Free, Generic Examples

AI generates examples like a machine gun fires blanks: rapid, plentiful and utterly lacking substance.

Why it’s a problem:

Vague examples feel soulless and untrustworthy. Readers think: “Show, don’t tell.” Google’s E-E-A-T framework also penalizes content lacking concrete evidence.

Before AI Example (Generic):

“Using AI tools improves marketing outcomes. For example, one company increased conversions after optimizing workflows.”

After Humanizing (Specific):

GrowthLab, a SaaS brand, increased campaign email click-through rates by 27% in Q2 2024 within three weeks. This improvement, over previous AI drafts, resulted from using Netus’s AI bypasser tool to “humanize” content. The Chief Marketing Officer attributed this success to “authentic hooks replacing robotic templates.”

(Human depth: Names, metrics, timelines, quotes.)

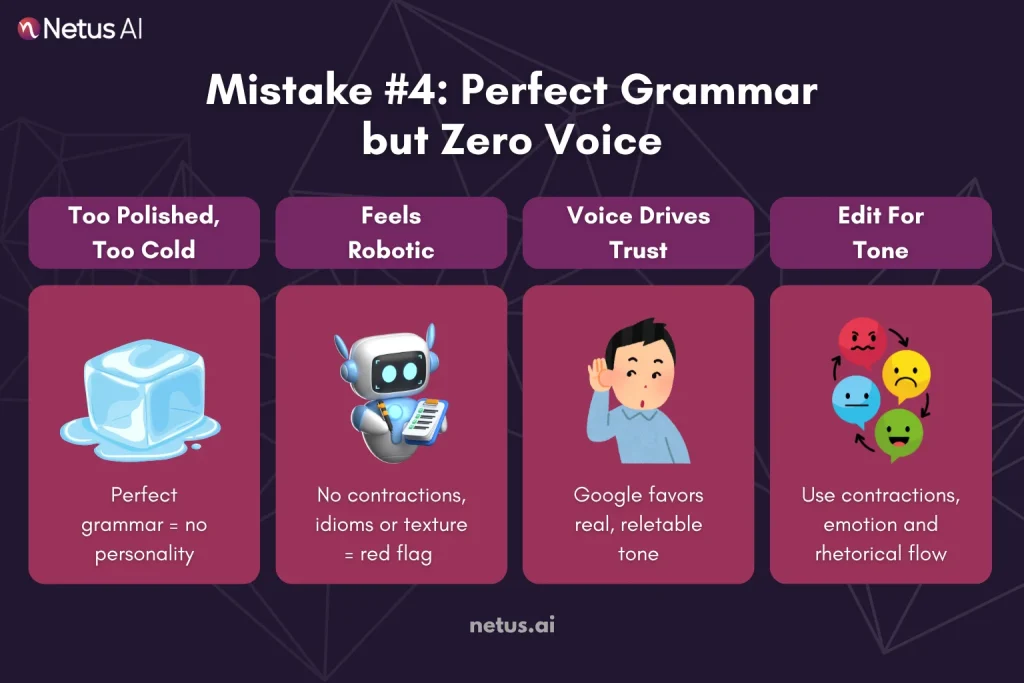

Mistake #4: Perfect Grammar but Zero Voice

AI grammar is flawless. Human writing isn’t. That’s the irony. When every sentence is surgically precise, no contractions, no idioms, no emotional texture, it feels sterile. Detectors and readers alike distrust content that reads like a technical manual.

Why it’s a problem:

Over-polished text lacks warmth, urgency and relatability. Google’s algorithms increasingly prioritize content with “helpful, human” signals (EEAT). Tools like ZeroGPT flag “unnatural grammatical consistency” as AI-generated. Readers crave voice, not robotic correctness.

Before AI (Sterile):

“Businesses must optimize workflows. Utilizing automation tools enhances efficiency. Consequently, productivity increases significantly.”

(Reads like a robot surgeon’s memo.)

After Humanizing (Voice-Injected):

“Look, if your workflows aren’t optimized? You’re burning cash. And yeah, smart automation tools can be game-changers, slashing busywork so your team actually gets stuff done. Bottom line? Productivity soars.”

(Human texture: Contractions, mild slang, urgency, rhetorical fragments.)

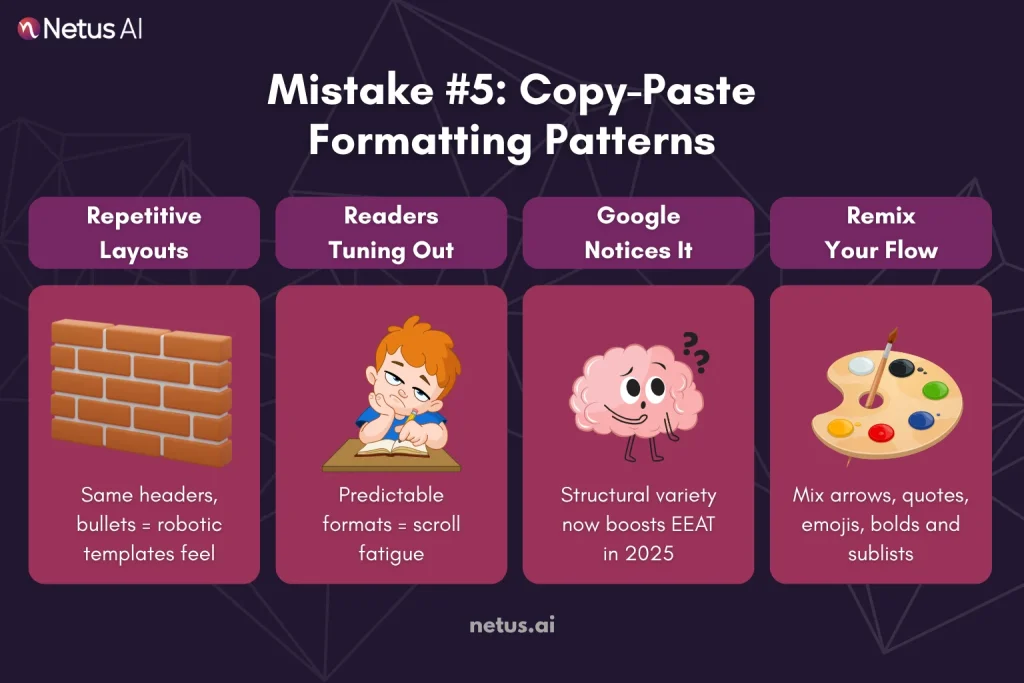

Mistake #5: Copy-Paste Formatting Patterns

AI adores templates. Humans? We crave visual surprises. Detectors scan for these repetitive skeletons, while readers subconsciously register the lack of organic flow.

Why it’s a problem:

Predictable formatting = predictable thinking. Google’s 2025 update values “diverse content experiences,” while tools like ZeroGPT identify AI “structural redundancy” with 89% accuracy. Readers scroll past rigid layouts, assuming low originality.

Before AI (Template Overload):

Why AI Content Fails

- Reason 1: Lacks voice

- Reason 2: Uses generic examples

- Reason 3: Poor formatting

(Every section is identically structured → Zzzz.)

After Humanizing (Dynamic Flow):

Why AI Content Still Fails in 2025

*→ First, the obvious: no soul. (Ever read AI poetry? Exactly.)

Then there’s the “evidence vacuum”:*

- Case Study A: TechCrunch exposed BrandX’s vague stats → 41% trust drop

- Case Study B: Our client’s CTR jump after ditching robotic templates

Finally, the formatting rut. Like this:

(Mixed formats: Arrow, bulleted sublist, emoji, rhetorical aside → ENGAGING.)

Quick Human-Sound Workflow (From Draft to Detect-Proof in 15 Minutes)

Even if you trip over one or all of the five mistakes we just covered, you can still rescue the piece before it goes live.

| Step | Why It Matters | What to Do | Time Box |

| 1. Cold Read, No Edits | A fresh perspective reveals missed robotic cadences. | Read aloud once. Highlight sections that sound unnatural or like a textbook. | 2 min |

| 2. Rhythm Check | Flat sentence length is the #1 burstiness giveaway. | Vary highlighted clusters: split long lines, merge choppy ones, add punchy words. | 3 min |

| 3. Personal Stamp | Real anecdotes and opinions scream human. | Add a micro-story, data point or first-person aside every ~200 words. | 3 min |

| 4. Detector Pass | Early feedback beats blind guessing. | Run the piece through an AI detector. Note any “high-risk” zones. | 2 min |

| 5. Targeted Rewrite | Fix only what’s flagged; don’t re-paraphrase the whole post. | Rewrite red zones: tweak tone, swap generic phrases, break templated headers. | 3 min |

| 6. Final Read-Aloud | The ear test > every algorithm. | If it sounds like you’d say it over coffee, you’re done. If not, loop back to Step 5. | 2 min |

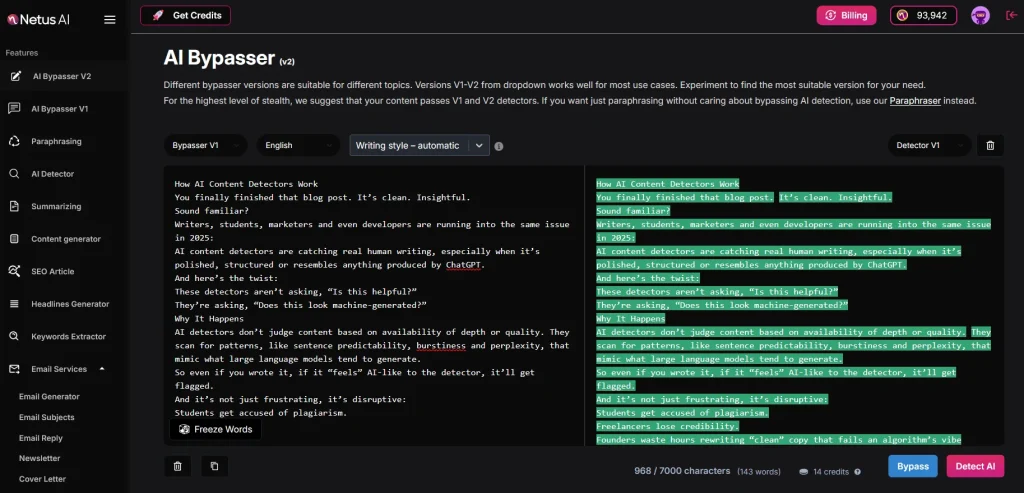

Tools such as NetusAI humanizer condense Steps 4, 5 into a single interface, detector verdicts and humanizing rewrites side-by-side, so you stay in flow instead of hopping between tabs.

Use this 15-minute circuit for every AI-drafted text to maintain speed and produce authentic-feeling copy

Final Thoughts

Avoid predictable rhythms, generic phrasing, canned introductions/conclusions, overly perfect grammar and lack of personal experience to prevent obvious AI writing and maintain credibility. These common mistakes make AI detection easy.

The fix isn’t abandoning AI; it’s partnering with it responsibly. Don’t just publish AI content. Use it for ideas, then personalize it. Read aloud, check flow, add flair and use an AI detector. Make tweaks, then read once more. 10-15 minutes can humanize a robotic draft.

And if you’d rather skip the tab-hopping? NetusAI is a hybrid tool that combines a detector, rewriter and live feedback to help users refine text efficiently.

FAQs

1. Will Google penalize content that “sounds AI” even if it’s 100 % original?

Google prioritizes helpful, people-first content, irrespective of its creation method. Pages are down-ranked if users disengage.

2. What’s the quickest way to tell if my draft trips AI detectors?

Use a reliable AI detector like NetusAI. If flagged, focus on improving rhythm, tone and specific details, not just synonyms.

3. Do sprinkling typos, emojis or slang fool detection tools?

Rarely. Modern detectors analyse statistical patterns within sentence structure, vocabulary probability and stylometric fingerprints.

4. Can detectors spot hybrid text that mixes human writing with AI snippets?

Yes, sometimes more easily than full-AI drafts. Detectors flag localised stretches where cadence suddenly shifts to low-perplexity, high-uniformity patterns.

5. What’s the safest way to “humanize” AI copy without distorting meaning?

Tools like NetusAI’s AI Bypasser preserve semantics while reshaping rhythm, then let you retest instantly.

6. Could aggressive grammar-checkers make my writing look more like AI?

Ironically, yes. Heavy reliance on tools that over-standardise wording flattens natural quirks and removes harmless “imperfections” that signal human authorship.

7. How often should I run detection scans during my editing workflow?

Think Draft → Scan → Tweak → Rescan. A quick pass after the first major rewrite catches structural red flags early. A final scan ensures tweaks don’t reintroduce uniform patterns. Two tight loops usually suffice to confidently shift from “Detected” to “Human.”