You might know that AI helps people write cool and smart stuff fast.

But AI isn’t always right; it can make mistakes and sometimes copy others. That’s where tools to find AI-written texts help. These tools can tell if a piece was made by AI.

*Update Aug 2024: OpenAI won’t watermark ChatGPT Text*

OpenAI tried but didn’t succeed in making a tool that could spot ChatGPT’s writing. Instead, they worked on a new idea. They thought about marking the text like leaving a special mark, which shows through word choice. This mark helps identify the writing later.

Yet, even with this new marking idea, they haven’t shared it with everyone. Here’s why:

- Even though this would help a lot in spotting AI writing, it wouldn’t catch every single case.

- If someone knows this text comes from ChatGPT and it has a special mark, they could just use another AI, like Claude or Llama, to rewrite it. This makes the mark go away.

- Some people who use AI for writing might not want their text to be found as AI-made.

*Update July 25, 2023: OpenAI Shuts Down Their Text Classifier*

We’ve got some updates on OpenAI’s Text Classifier to talk about.

OpenAI decided to stop using their text classifier because it wasn’t accurate enough, Techcrunch says. You can check out how well it did in our post about accuracy here: https://originality.ai/blog/ai-content-detection-accuracy.

This field is tough, and we really feel for OpenAI’s team. Knowing they made ChatGPT, we get the huge pressure they were under to make sure everything was right.

Looking at this from the outside, it’s clear that OpenAI, being a big name in AI tech and seen as the ones who started AI-made stuff, probably had to be extra careful. Since they made ChatGPT, getting things wrong and catching stuff that isn’t actually there would have been really tough for them to handle.

Making a tool that can spot text made by ChatGPT isn’t simple at all! There are lots of hard parts to figure out, and it’s important to understand that no solution will be perfect 100% of the time. Trying to be super accurate without making mistakes and causing problems is very tricky, especially for OpenAI more than others.

We believe that both transparency and accountability are crucial in this industry, and we commend OpenAI for making the tough call to shut it down when its performance fell short of the desired standard.

You might already know that AI tools help people create fun and smart content fast. But, since AI isn’t perfect, it can sometimes make mistakes like copying others or not being good quality. This is where tools that find AI-written stuff are useful.

These tools can spot if something was made by AI, even if it doesn’t copy from anyone. This is really helpful these days because Google doesn’t like it when websites have text that doesn’t sound real or was made by a machine.

Marketers, businesses, and educators all want to know if a text is written by AI. They use tools to check.

Today, we’re looking at the OpenAI Text Classifier. OpenAI made big AI models like ChatGPT and GPT-3. We’ll see how good their tool is compared to others.

Features

OpenAI Text Classifier is a tool that makes finding important topics in big groups of documents or chats easy. It works really well and is simple to use, here’s how:

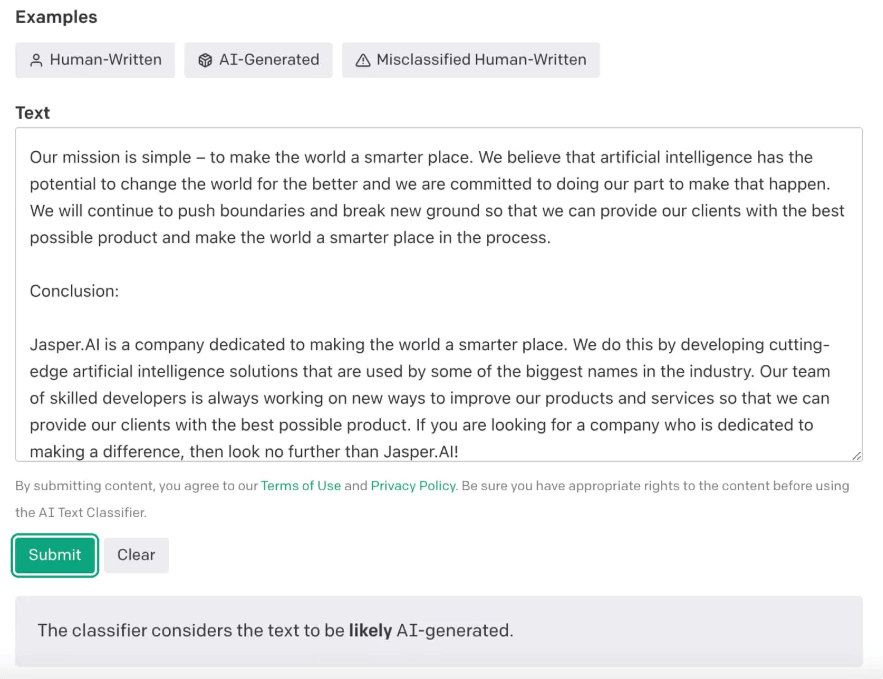

- Easy interface: With OpenAI, using AI to find topics is easy. Just copy your text, paste it into a box, and hit “submit” to see results right away.

- Trained well: OpenAI’s Text Classifier learned from 34 different places, like itself. It also looked at stuff people wrote on Wikipedia, links from Reddit, and examples from an earlier OpenAI project.

- No cost: OpenAI made the Text Classifier free. They want everyone to use this great tech, to help more people create new things.

Remember, the OpenAI Text Checker doesn’t catch everything. It needs at least 1,000 characters to work, which is about 150-250 words.

Sadly, even though it can create text, it can’t spot copied work.

Here are the good and bad points about it:

Good Points:

- The way it works is easy to get.

- It gives you answers fast.

- You can scan as much as you want without paying.

Bad Points:

- It only works right 26% of the time, based on what it says.

- Right now, it can’t check if something is copied from somewhere else.

- It’s not good for texts that are not in English.

- It’s missing some extras.

Checking How Well OpenAI’s Text Checker Works

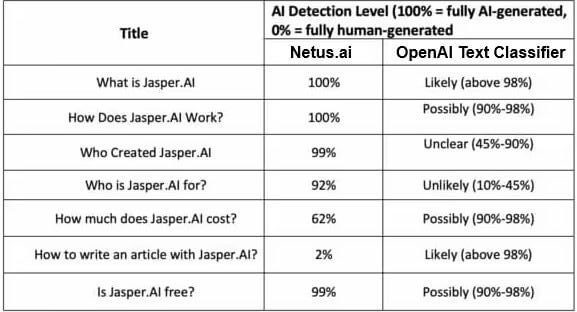

Let’s dive deep into this. We’re going to see how OpenAI’s Text Classifier and Originality.ai stack up in spotting AI-made texts. We used Jasper, a big deal in GPT2-powered writing, to create our samples.

Now, Originality.ai is really good at spotting stuff made by GPT2, GPT3, GPT3.5, or even ChatGPT. It’ll be cool to see which one of these, between OpenAI and Originality.ai, does better at finding content made entirely by AI.

The OpenAI Text Classifier uses a unique way to guess if text was made by AI. It decides by looking at chances – from “very unlikely” (under 10% chance) to “unlikely” (10%-45%), then “unclear” (45%-90%), next “possibly” (90%-98%), and finally “likely” (more than 98%).

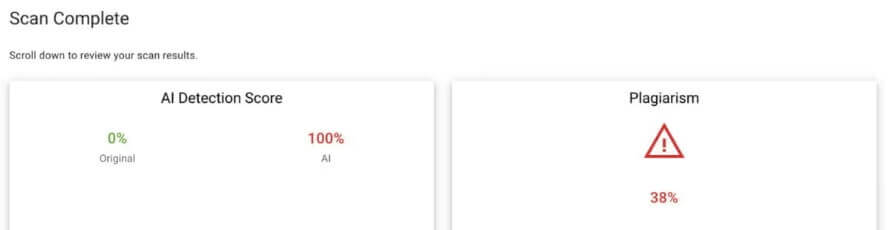

Originality.ai does something different. It shows numbers that tell you how much of the text AI made and if any part is copied.

Let’s compare the OpenAI Text Classifier and Originality.ai side by side.

Right from the start, the OpenAI Text Classifier seems to miss the mark on spotting that our examples were written by AI.

OpenAI has openly shared that their detection tool doesn’t hit the mark much, finding AI-written text right only about 26% of the time in their tests. They also saw mistakes where it wrongly flagged texts 9% of the time. In our own check with seven examples, the tool got it wrong twice.

On the other hand, Originality.ai did a good job, correctly spotting AI content in five out of seven tries. They only got it wrong once and were unsure another time.

We need to understand that OpenAI checks if something is made by AI by being really sure about it. But Originality.ai sees if it’s right about something being made by AI. That’s why Originality.ai says it’s really good at this.

Even though they seem similar, OpenAI might not always seem as good. Not because it’s not smart, but because it’s extra careful.

OpenAI has said their Text Classifier should not be the only thing people use to make decisions. It should help with other ways of figuring out who wrote something.

Based on what we found, Originality.ai stands out as the best option for those who need a reliable way to tell if AI made the content.

Deep Dive: OpenAI Text Classifier Versus Originality.ai Test

We’re diving deep now. Let’s see how the OpenAI Text Classifier and Originality.ai do in spotting AI writings. Our examples come from Jasper, a strong tool for making content.

Originality.ai is great at finding stuff written by GPT2, GPT3, GPT3.5, and even ChatGPT. It’ll be cool to see how these two compare when checking content made entirely by AI.

The OpenAI Text Classifier uses a unique system to figure out if writing was done by AI. It looks at the chances, marking them as “very unlikely” if there’s less than a 10% chance, “unlikely” for 10%-45%, not sure for 45%-90%, “possibly” if it’s 90%-98%, and “likely” if it’s more than 98% likely AI did it.

Originality.ai works a bit differently. It gives you numbers showing how much of the text AI made and how much is copied from somewhere else.

Right from the start, the OpenAI Text Classifier couldn’t really tell if our examples were made by AI.

OpenAI has openly said that their tool for spotting AI-written text isn’t very good. It could only get it right 26% of the time when they tried it out. Also, it made mistakes 9% of the time, saying something was AI-written when it wasn’t. In our own check with seven examples, the tool got it totally wrong two times.

On the other hand, Originality.ai was able to spot AI-made content in five out of seven tries. It made one mistake and was unsure once.

OpenAI judges how accurate it is by checking if it’s really sure something was made by AI. On the other hand, Originality.ai counts how many times it’s right about AI-made content, which is why they say they’re so accurate.

Even though they’re similar, OpenAI might not seem as sharp. But it’s not about being worse; it’s more about being extra careful.

OpenAI has said that their Text Classifier isn’t the main tool to decide where text comes from. It’s more like a helper alongside other ways of finding out text sources.

Because of this, Originality.ai stands out to us as the clear pick for anyone who wants a reliable and accurate program to recognize AI-made content.

A Simple Look at OpenAI Text Checker vs Originality.ai Test

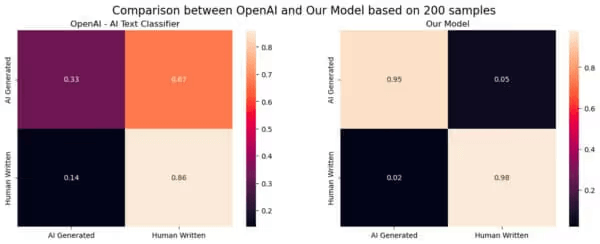

We looked closely at how OpenAI’s Text Classifiers compare with Originality.ai’s ability to spot AI-written text.

Introduction

We’re going to see how our AI Detector (Originality.ai) matches up against OpenAI’s AI Text Classifier. (https://platform.openai.com/ai-text-classifier)

Data Breakdown:

- We looked at 200 pieces of writing.

- Out of these, 100 were written by people.

- Then, 20 pieces came from GPT-3.

- Another 20 were made by GPT-J.

- We also had 20 from GPT-Neo.

- 20 more were from GPT-2.

- And 20 pieces were just reworded versions of the original ones.

Evaluation

“OpenAI labels each document with terms like very unlikely, unlikely, unclear if it is, possibly, or likely AI-generated.”

We use two categories: AI and Human. OpenAI uses five, so we include the unsure ones (unclear if it is, possibly, likely) as AI. The others (very unlikely, unlikely) mean Human.

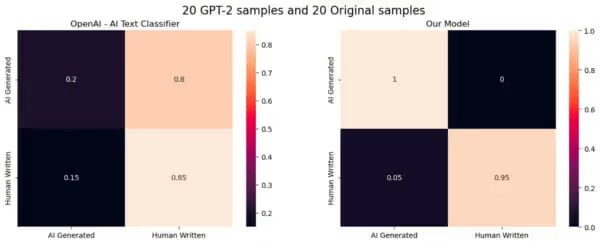

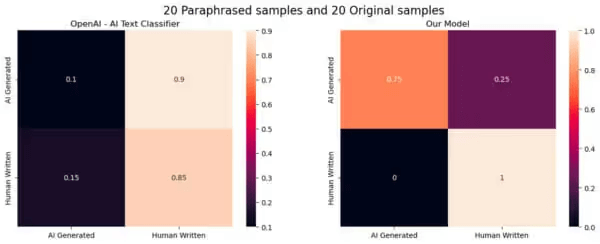

In the pictures below, the Y-Axis shows what actually is, and the X-Axis shows AI’s guess.

- Every example

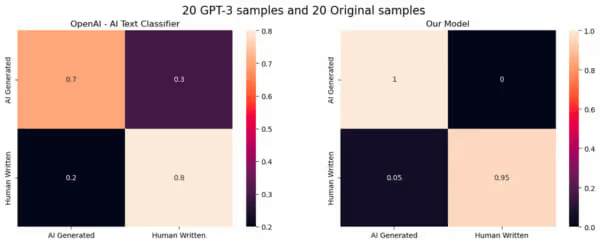

- 20 examples made by a computer program GPT-3 and 20 made by people.

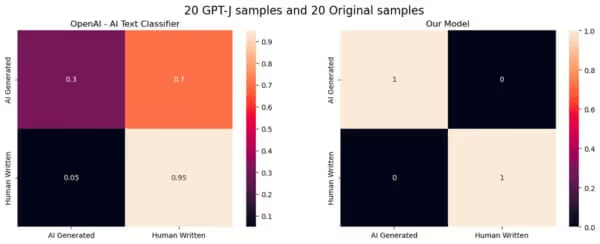

- 20 examples made by another computer program GPT-J and 20 made by people.

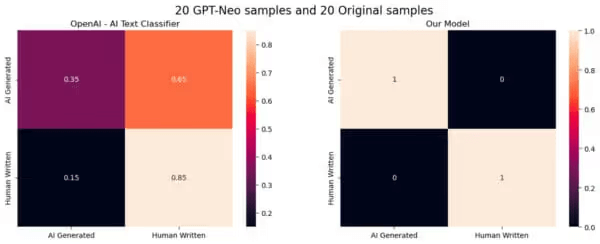

- 20 examples made by a third computer program GPT-Neo, and 20 made by people.

- 20 examples made by yet another computer program GPT-2, and 20 made by people.

- 20 examples changed from the first ones (people changed them) and 20 made directly by people.

Let’s talk about how the OpenAI Text Classifier stacks up against other tools like Originality.ai

We see some key differences in how well they work:

- One big thing is OpenAI doesn’t have a tool to catch copied work. This is different from services like Originality.ai which can tell if work is original or not.

- Also, OpenAI’s tool isn’t very good at spotting when something is made by AI; it’s only right about 26% of the time. But, Originality.ai gets it right 79% of the time, making it a lot better at this job.

- Lastly, even though OpenAI lets you know if content looks like it’s made by AI, it doesn’t really explain how it decides

Why OpenAI’s Text Classifier is Great

If you want to find AI-made content quickly and easily, OpenAI is a good choice. It’s simple to use and gives you clear results fast.

Another good thing is that this tool comes from the people who made ChatGPT. So, it’s likely to get even better over time. Plus, it’s free, unlike some other tools that try to catch AI tricks.

Conclusion

Want a fast and simple way to find out if a text was made by AI? The OpenAI Text Classifier is a great pick. It uses a special version of the GPT model to figure out if AI, especially ChatGPT, created the text.

But if you’re after something even more dependable, try Originality.ai. This tool offers a pay-as-you-go plan, so it’s easy on your wallet. Plus, it’s super accurate and has cool features that make it stand out from the rest.