AI Content Detector & AI Bypasser

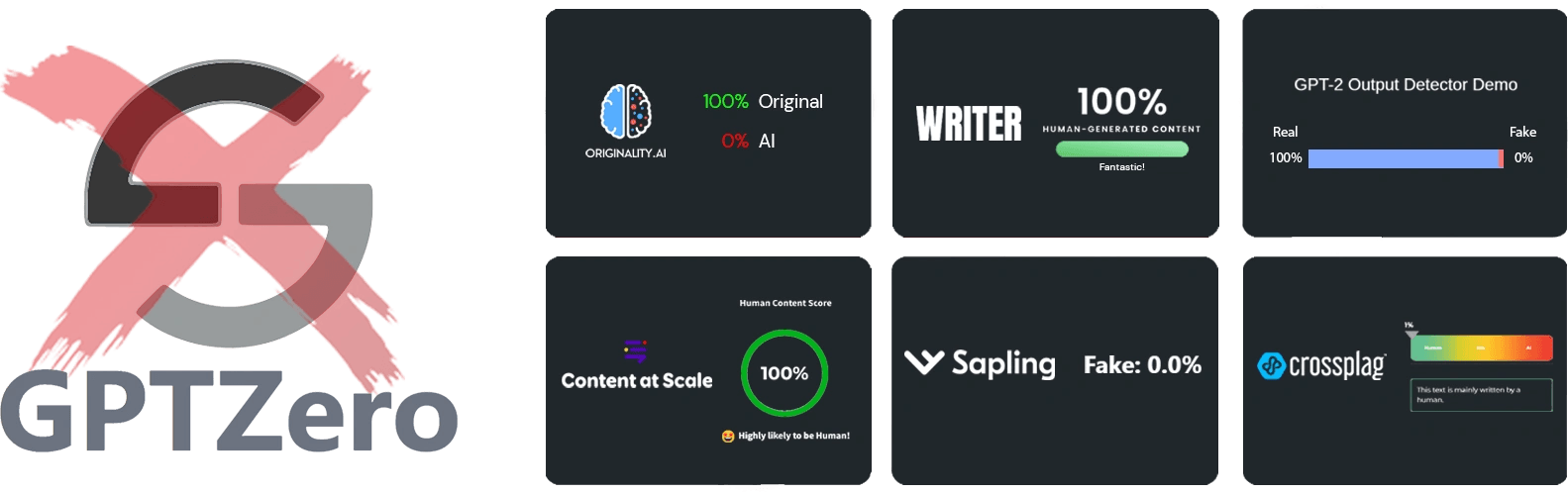

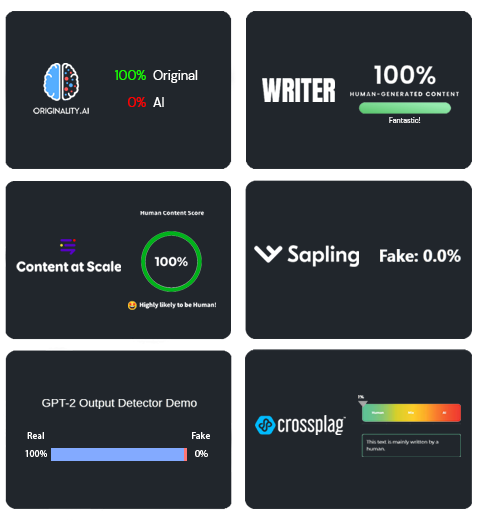

With over 99% accuracy and AI detection coverage that includes ChatGPT and Gemini, you can rest assured that you’re fully covered with the market’s most comprehensive AI detector.

No credit card needed

Avoid getting detected & banned

Google can crack down on AI-generated content. According to the statement from Google SearchLiaison, using automation or AI to produce content with the primary goal of manipulating search engine rankings is a direct violation of their spam policies.

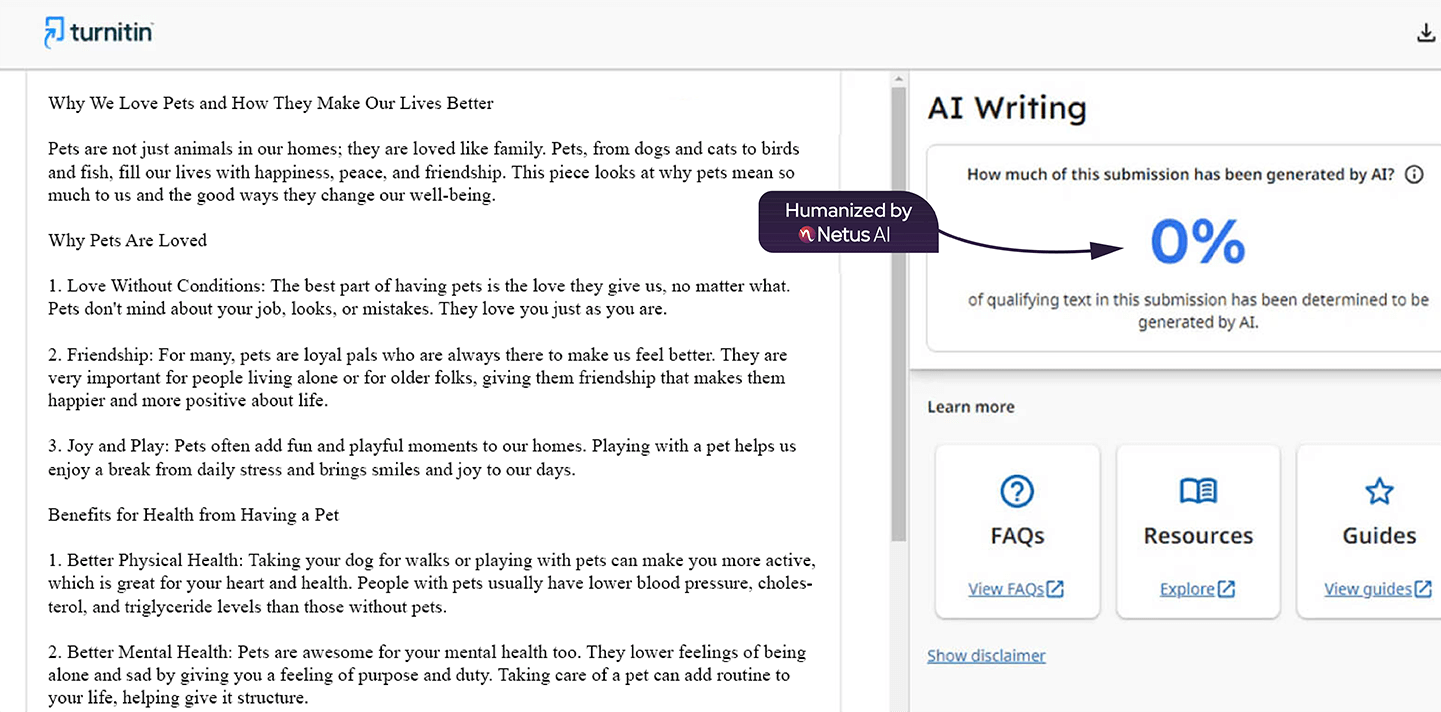

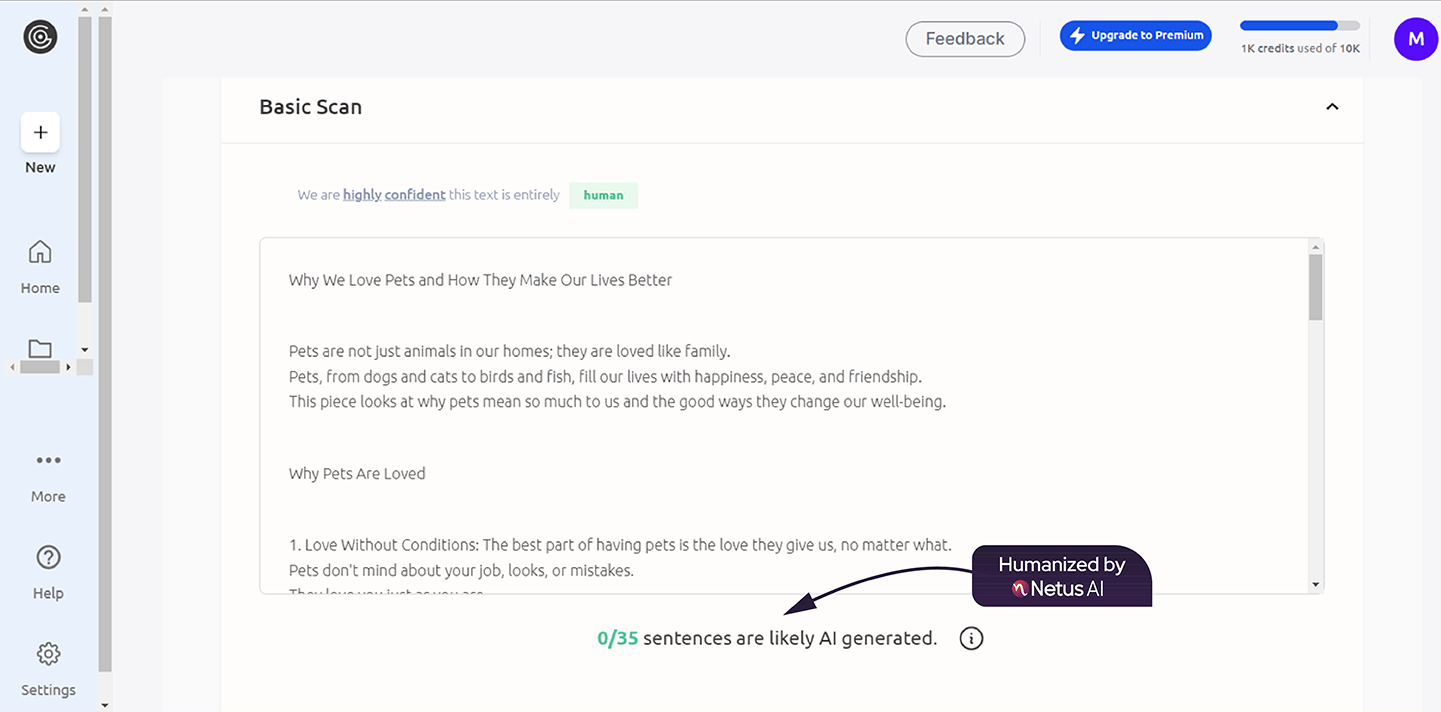

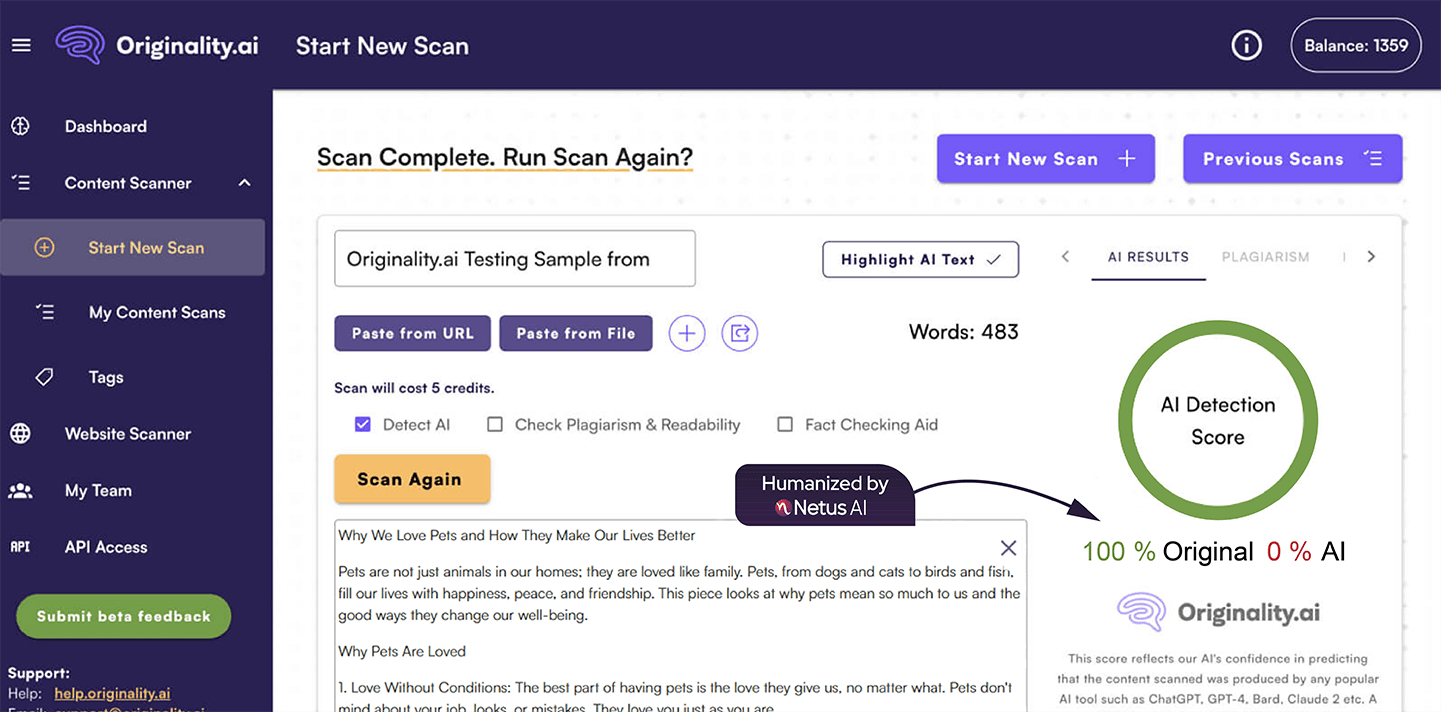

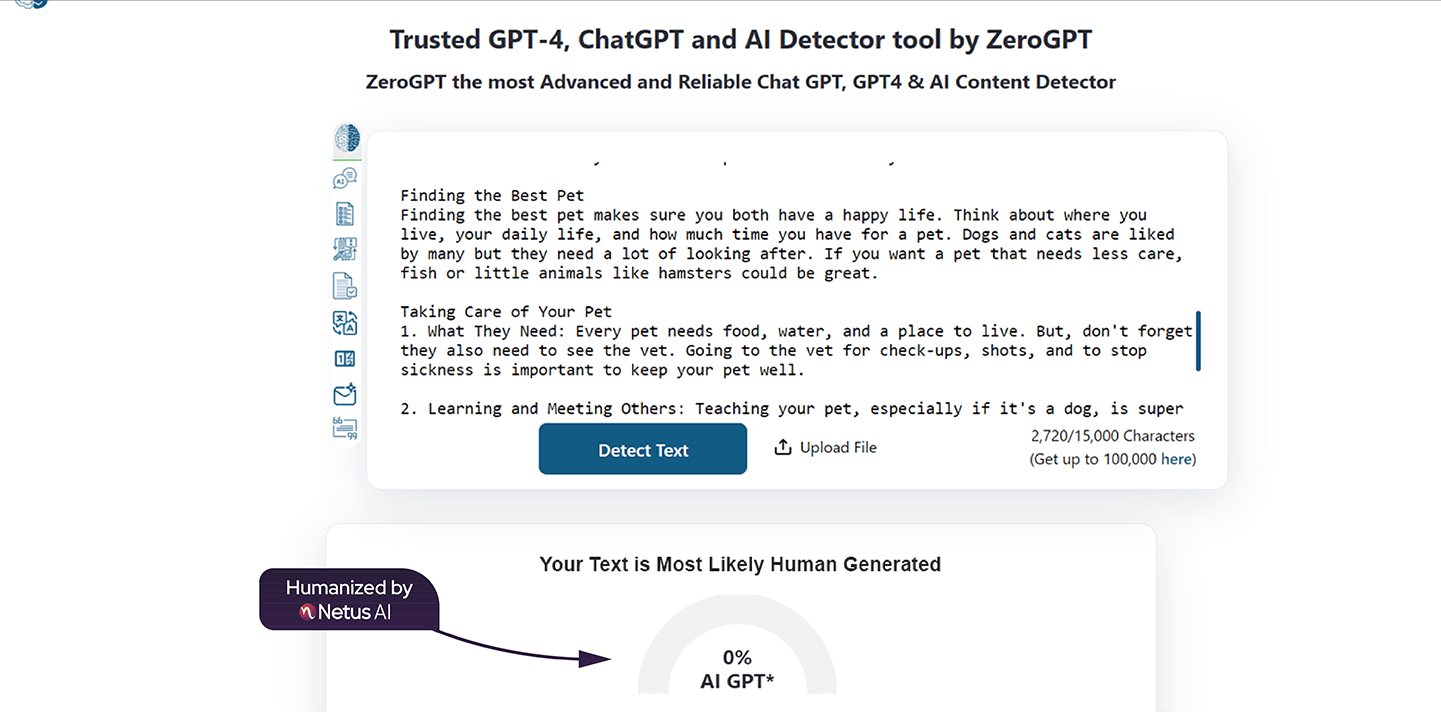

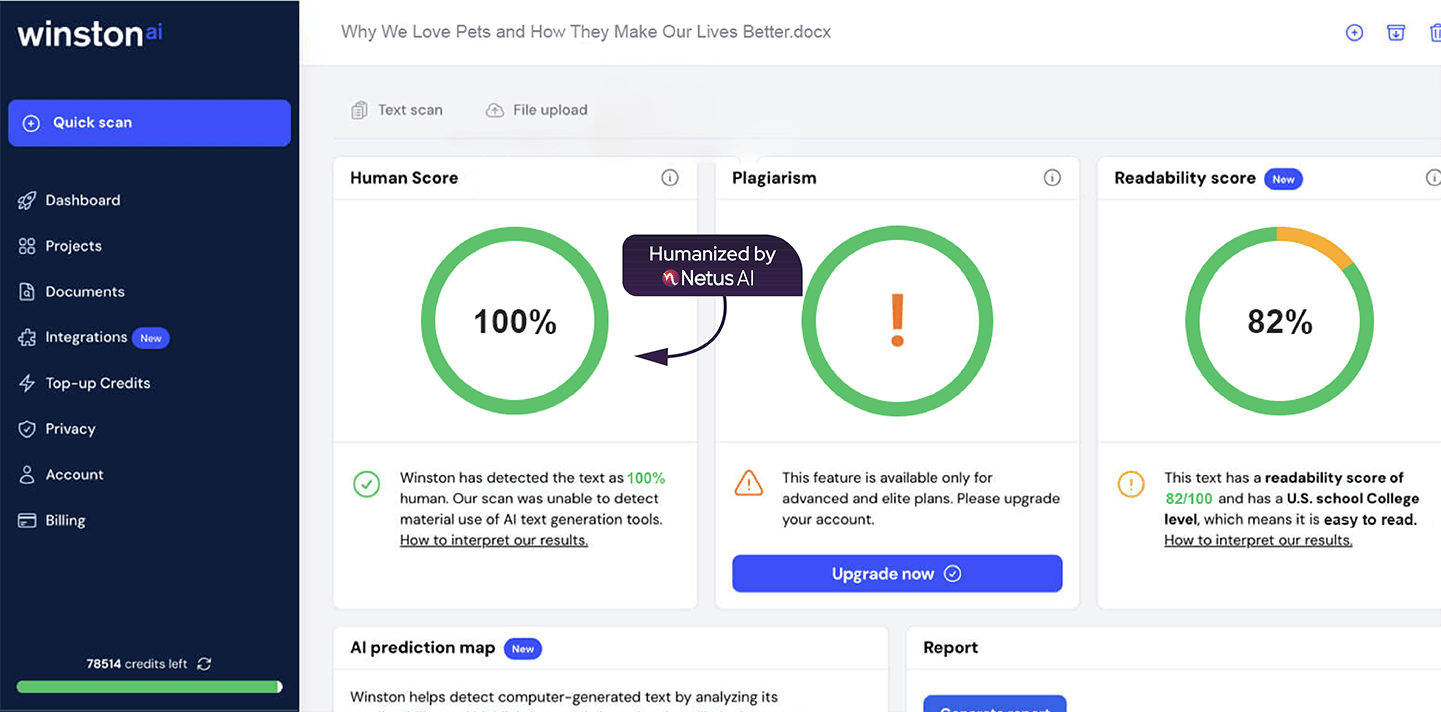

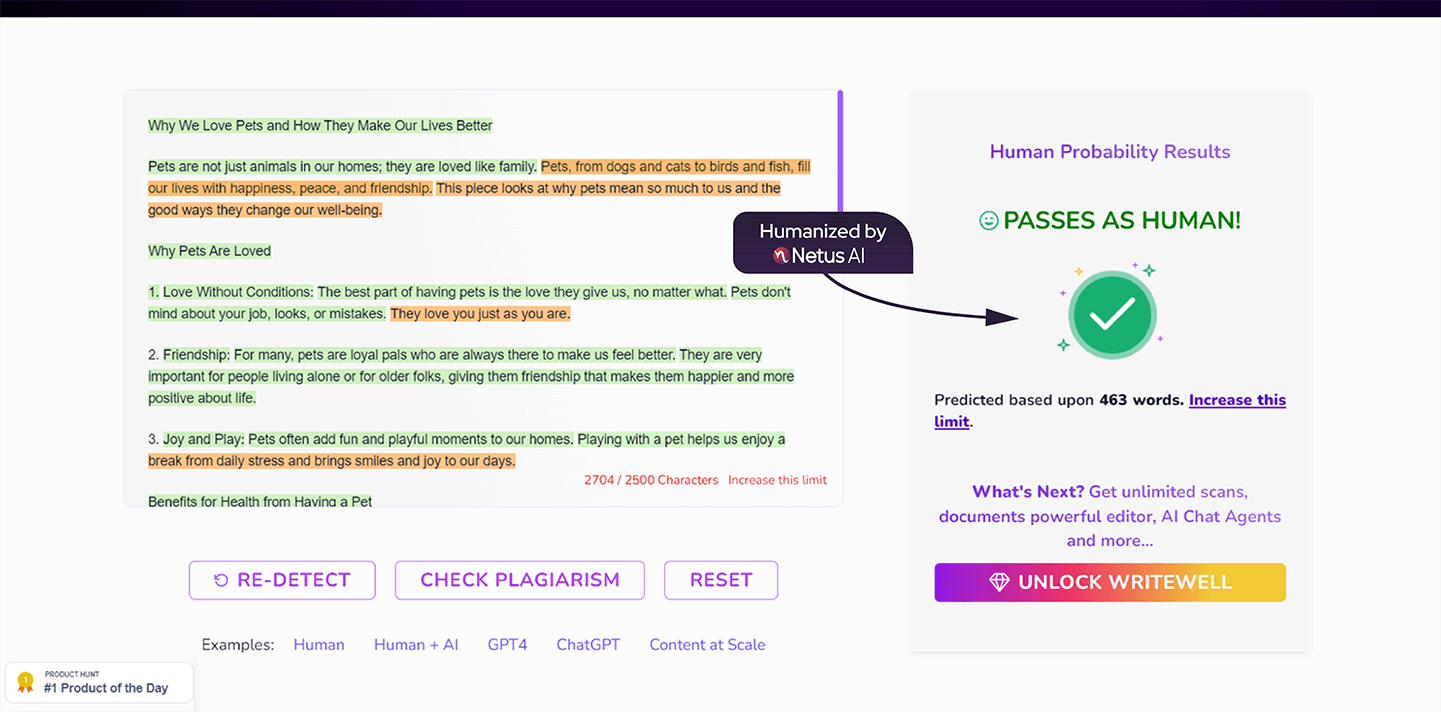

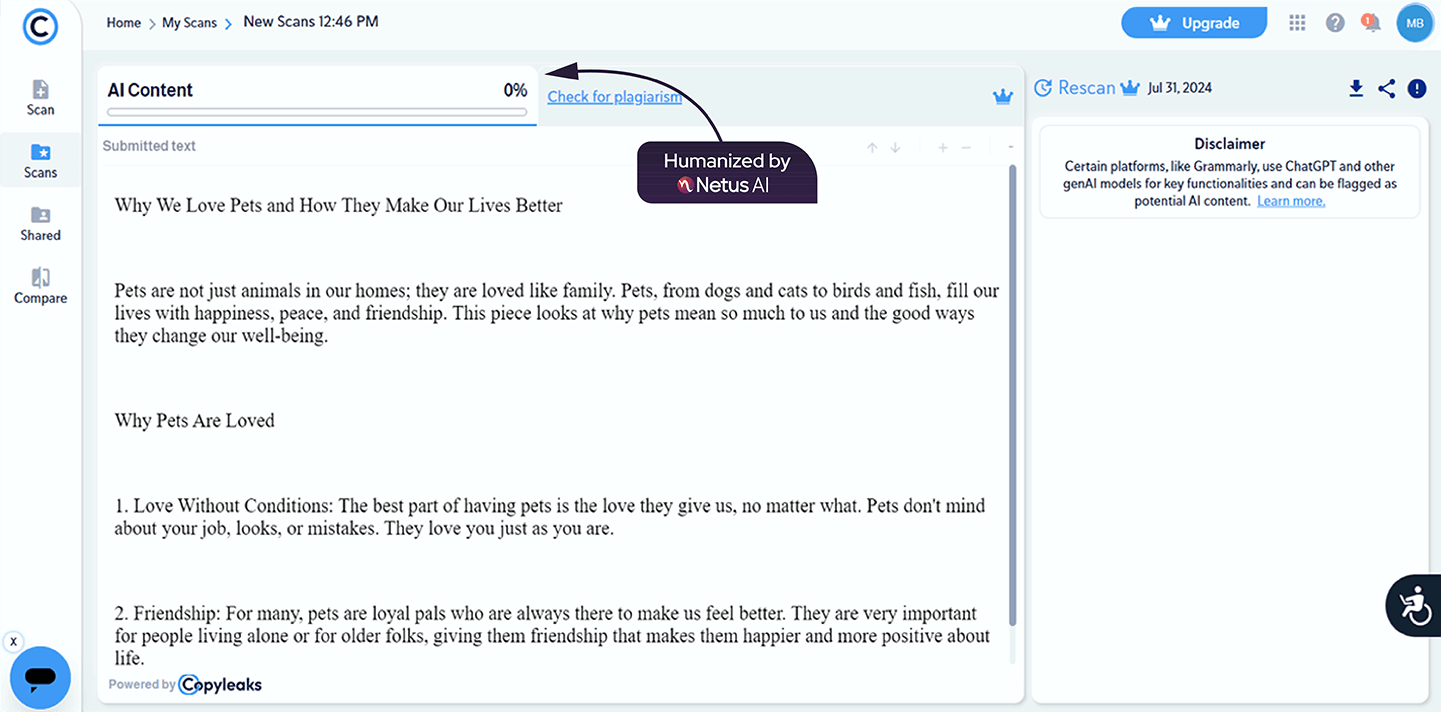

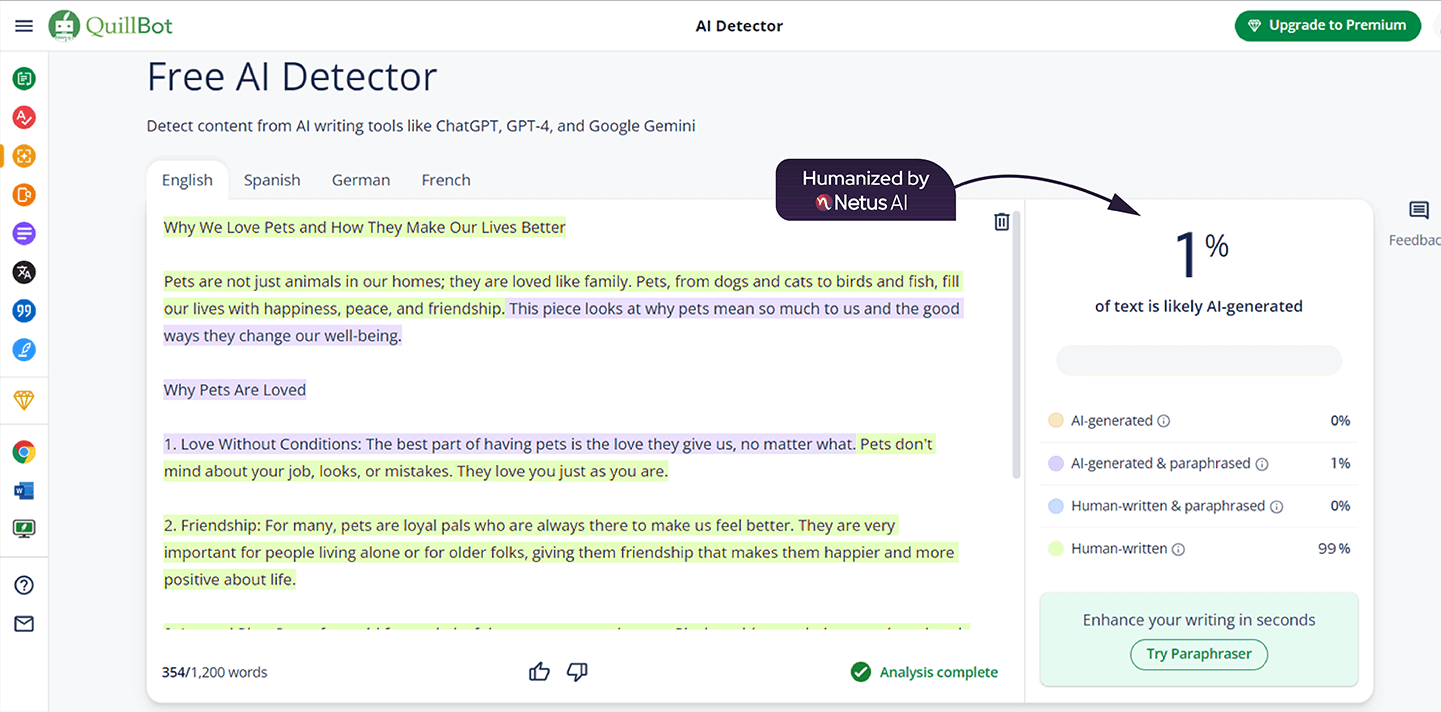

With Netus' AI Bypasser , you can easily bypass AI detectors and avoid getting banned. You can create undetectable AI content that looks and feels like it was written by a human.

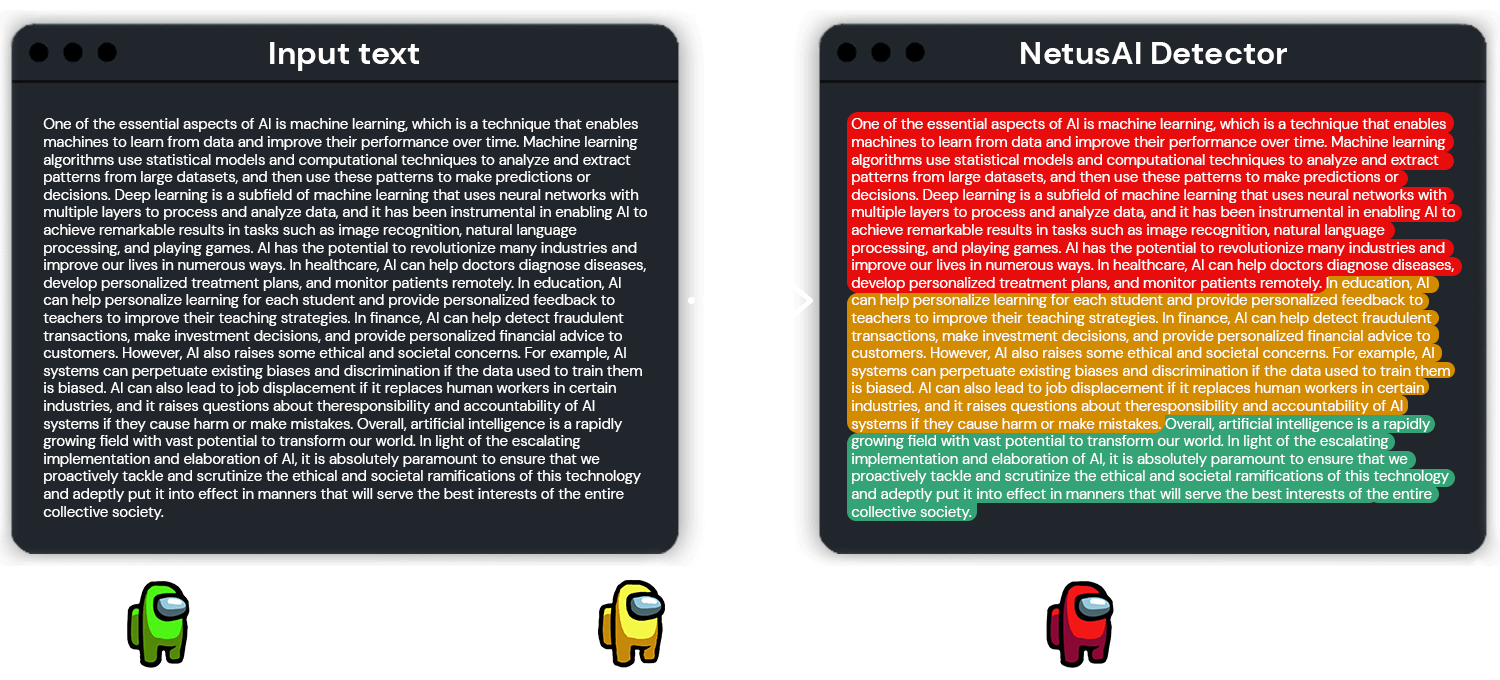

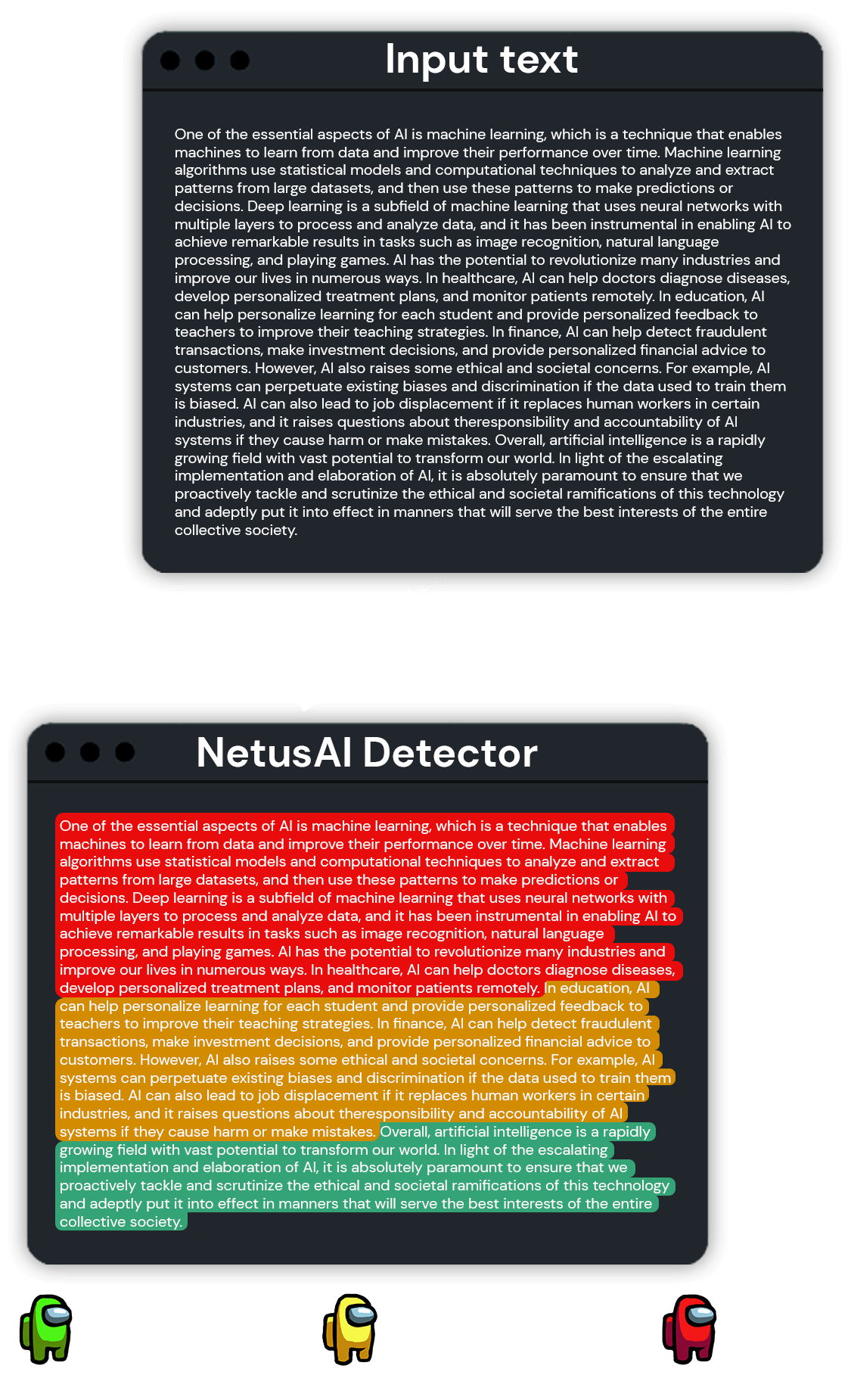

AI detector to differentiate AI and human text

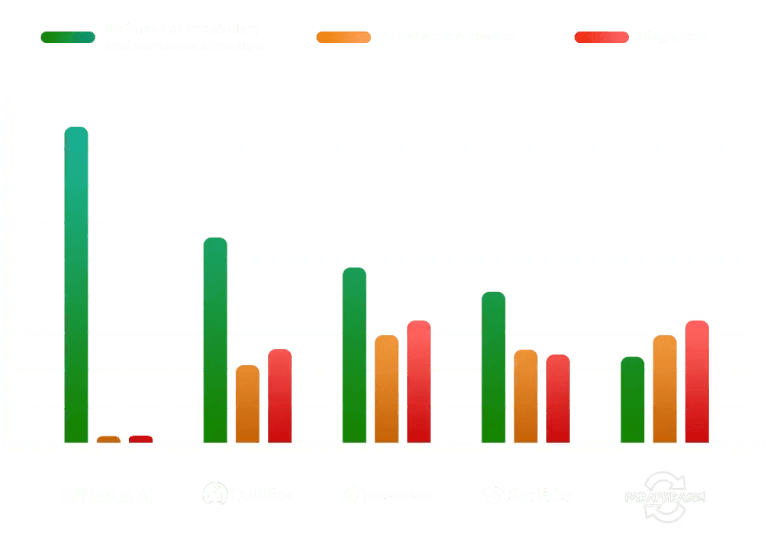

Netus AI detector is designed to identify and differentiate between human-written and AI-generated content with 99% probability. We're utilizing the same advanced technology employed by Turnitin. It evaluates textual patterns, context, syntax, and semantics which helps to identify differences of AI-generated content. As AI content generation evolves, we continue to stay ahead of the curve.

NetusAI offers one of the best AI bypasser tool

Create amazing things

With Netus AI paraphrasing and summarization tools

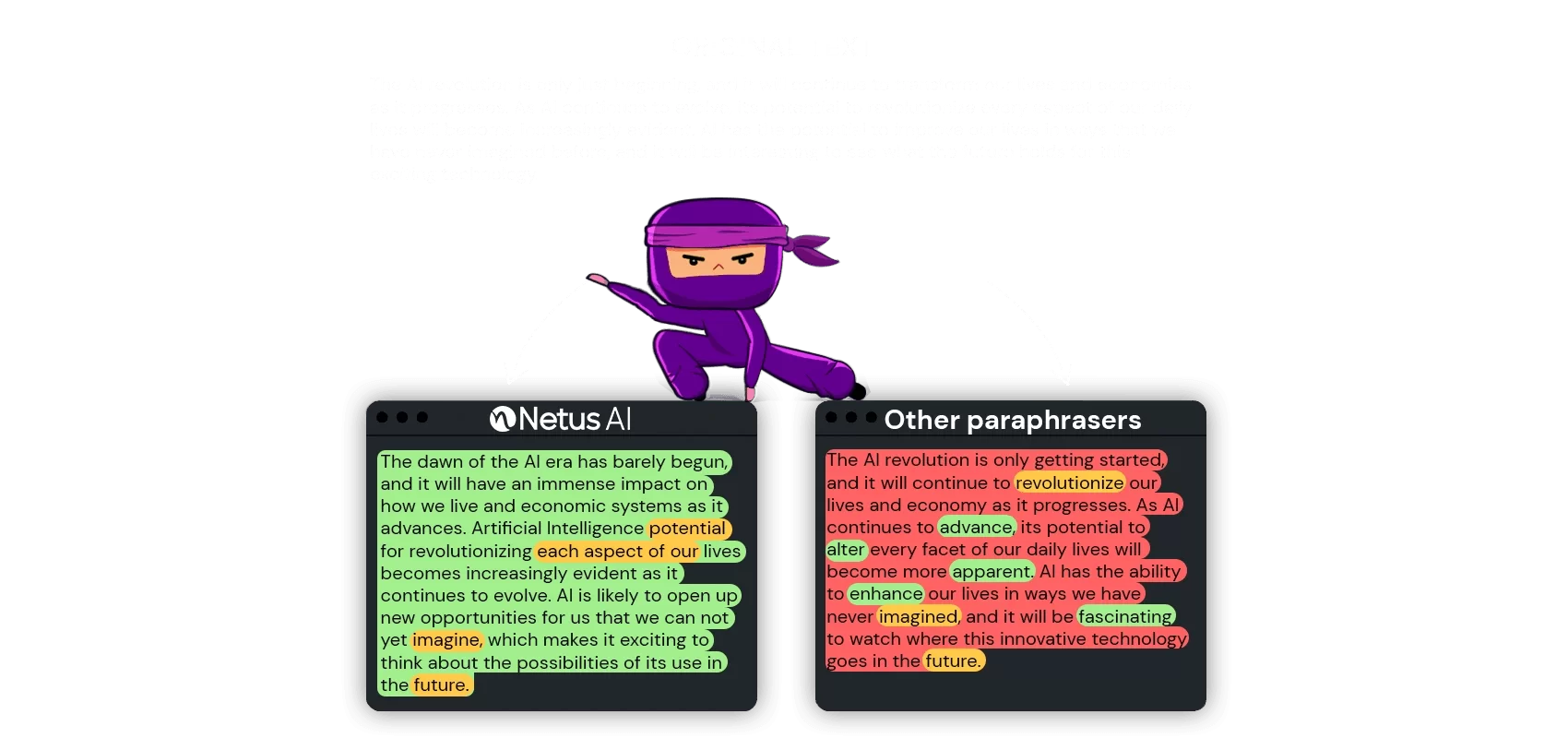

Paraphrasing tool

Netus AI paraphrase tool is designed to help you rephrase text while preserving its meaning. It can help you create unique, high-quality content in a matter of seconds. The model is trained on a vast amount of data, allowing it to understand the nuances of language and produce high-quality paraphrased text.

Summarization tool

Netus AI summarization tool is a powerful tool that can help you quickly and efficiently extract the most important information from a piece of text. It can help you condense long documents into shorter, more manageable summaries. This can be especially useful for tasks such as content curation, research, and information management.

Suitable for all kinds of creators

Our AI writing tools help many types of creators in the digital space.

Suitable for all kinds of creators

Our AI writer helps many types of creators in the digital space.

Digital Marketers

Digital Marketers Create an abundance of copy for your ad and landing page tests.

Content Marketers

Increase your content output substantially with our tools.

Founders

Acquire a personal AI writer. Produce material quicker.

SEO Specialists

Our suite of tools will help you create more persuasive meta content and blog topic ideas.

Copywriters

Generate more persuasive copy for your marketing material and products.

Bloggers

We've got you covered. Increase your blogging output with our web app.

100% plagiarism free paraphrasing tool

As we move further into 2024, the evolution of plagiarism detectors continues to make it increasingly challenging to use copied content without being caught. Popular anti-plagiarism tools like Turnitin, Quetext, and Copyscape use sophisticated algorithms to detect similarities between texts, even if they have been paraphrased. However, as detection algorithms advance, so too does the technology behind paraphrasing tools. At NetusAI, we stay ahead of these advancements by developing state-of-the-art technology which paraphrasers texts while maintaining the original meaning, tone, and style. With a success rate of 99.97%, NetusAI is a reliable solution for anyone who wants to produce high-quality content without the risk of plagiarism.

Fine-tune Netus AI paraphrasing tool with your own style of voice

Turnitin and other similar plagiarism technologies recently introduced “fingerprint” technology to distinguish a person’s unique writing style. By collecting a writing samples such tools will be able to compare future submissions to this original style and detect any discrepancies with up to 97% accuracy, even spotting parts of an essay not entirely written by human hand. This is due to the predictable word frequency and distribution in machine-generated text, which differs from the more natural writing style of humans. At NetusAI, we understand the importance staying ahead and are committed to helping create original and authentic work fast. We offer fine tuning option to maintains the original meaning, tone, and style while producing well-written content.

Beat any AI detector with Netus AI Bypasser

Flexible pricing based on credits

Free

-

50 credits/month

-

AI Detector - unlimited usage

-

AI Bypasser - 500 words/month

1 credit = 10 words

50 credits = 500 words -

Paraphraser - 500 words/month

1 credit = 10 words

50 credits = 500 words -

Summarizer - 500 words/month

1 credit = 10 words

50 credits = 500 words -

Readability Checker - 50 analysis/month

1 credit = 1 analysis

50 credits = 50 analysis -

SEO-optimized Article Generator

260 credits = 1 article

Not enough credits for full articles with 50 credits -

Keywords Extractor

1 credit = 5 input words

50 credits = 250 input words -

Title Generator

-

Slogan Generator

-

Email Writer

1 Email = 25 credits

1 Email Reply = 25 credits

Email Subject = Unlimited

1 Newsletter = 70 credits

1 Cover Letter = 70 credits -

100% privacy

-

36 languages support

Basic

-

1,500 credits/month

-

AI Detector - unlimited usage

-

AI Bypasser - 15,000 words/month

1 credit = 10 words

1,500 credits = 15,000 words -

Paraphraser - 15,000 words/month

1 credit = 10 words

1,500 credits = 15,000 words -

Summarizer - 15,000 words/month

1 credit = 10 words

1,500 credits = 15,000 words -

Readability Checker - 1,500 analysis/month

1 credit = 1 analysis

1,500 credits = 1,500 analysis -

SEO-optimized Article Generator

260 credits = 1 long article

160 credits = 1 short article -

Keywords Extractor

1 credit = 5 input words

1,500 credits = 7,500 input words -

Title Generator

-

Slogan Generator

-

Email Writer

1 Email = 25 credits

1 Email Reply = 25 credits

Email Subject = Unlimited

1 Newsletter = 70 credits

1 Cover Letter = 70 credits -

100% privacy

-

36 languages support

Standard

-

10,000 credits/month

-

AI Detector - unlimited usage

-

AI Bypasser - 100,000 words/month

1 credit = 10 words

10,000 credits = 100,000 words -

Paraphraser - 100,000 words/month

1 credit = 10 words

10,000 credits = 100,000 words -

Summarizer - 100,000 words/month

1 credit = 10 words

10,000 credits = 100,000 words -

Readability Checker - 10,000 analysis/month

1 credit = 1 analysis

10,000 credits = 10,000 analysis -

SEO-optimized Article Generator

260 credits = 1 long article

160 credits = 1 short article -

Keywords Extractor

1 credit = 5 input words

10,000 credits = 50,000 input words -

Title Generator

-

Slogan Generator

-

Email Writer

1 Email = 25 credits

1 Email Reply = 25 credits

Email Subject = Unlimited

1 Newsletter = 70 credits

1 Cover Letter = 70 credits -

100% privacy

-

36 languages support

Premium

-

40,000 credits/month

-

AI Detector - unlimited usage

-

AI Bypasser - 400,000 words/month

1 credit = 10 words

40,000 credits = 400,000 words -

Paraphraser - 400,000 words/month

1 credit = 10 words

40,000 credits = 400,000 words -

Summarizer - 400,000 words/month

1 credit = 10 words

40,000 credits = 400,000 words -

Readability Checker - 40,000 analysis/month

1 credit = 1 analysis

40,000 credits = 40,000 analysis -

SEO-optimized Article Generator

260 credits = 1 long article

160 credits = 1 short article -

Keywords Extractor

1 credit = 5 input words

40,000 credits = 200,000 input words -

Title Generator

-

Slogan Generator

-

Email Writer

1 Email = 25 credits

1 Email Reply = 25 credits

Email Subject = Unlimited

1 Newsletter = 70 credits

1 Cover Letter = 70 credits -

100% privacy

-

36 languages support

Elite

-

100,000 credits/month

-

AI Detector - unlimited usage

-

AI Bypasser - 1,000,000 words/month

1 credit = 10 words

100,000 credits = 1,000,000 words -

Paraphraser - 1,000,000 words/month

1 credit = 10 words

100,000 credits = 1,000,000 words -

Summarizer - 1,000,000 words/month

1 credit = 10 words

100,000 credits = 1,000,000 words -

Readability Checker - 100,000 analysis/month

1 credit = 1 analysis

100,000 credits = 100,000 analysis -

SEO-optimized Article Generator

260 credits = 1 long article

160 credits = 1 short article -

Keywords Extractor

1 credit = 5 input words

100,000 credits = 500,000 input words -

Title Generator

-

Slogan Generator

-

Email Writer

1 Email = 25 credits

1 Email Reply = 25 credits

Email Subject = Unlimited

1 Newsletter = 70 credits

1 Cover Letter = 70 credits -

100% privacy

-

36 languages support

Case studies

Supp*******.com, a leading Europe e-commerce provider of fitness supplements, encountered a critical issue when a rival firm filed a DMCA (Digital Millennium Copyright Act) complaint alleging that the product descriptions on their store were plagiarized. Utilizing the capabilities of the Netus AI tool, Supp*******.com team successfully rewrote all 800 product descriptions in a 2 weeks period. Subsequently, the implementation of the newly rewritten descriptions on website resulted in a 38% growth in organic search traffic and a 45% increase in revenue within the initial quarter.

Inte************.org was asked to republish research papers for Nonprofit blog. However, papers were contained specialized and very technical language that was difficult for non-experts to understand. The team decided to use an Netus AI to help them rewrite their research papers in a way that was more accessible to a wider audience. As a result, the institution were able to produce 267 blog posts in 3 weeks period. The institution’s writers also received positive feedback from readers who appreciated the clarity and accessibility of their papers.

Newy*********.com news agency wanted to update their readers in a timely and accurate manner. However, regarding fast-paced nature of the news industry and often found themselves publishing articles that were similar to those of their competitors and because of that ranked lower than competitors. After partnering with NetusAI portal resulted in a significant increase in website traffic (25%) and boosted SEO positions for local news.

Supp*******.com, a leading Europe e-commerce provider of fitness supplements, encountered a critical issue when a rival firm filed a DMCA (Digital Millennium Copyright Act) complaint alleging that the product descriptions on their store were plagiarized. Utilizing the capabilities of the Netus AI tool, Supp*******.com team successfully rewrote all 800 product descriptions in a 2 weeks period. Subsequently, the implementation of the newly rewritten descriptions on website resulted in a 38% growth in organic search traffic and a 45% increase in revenue within the initial quarter.

Inte************.org was asked to republish research papers for Nonprofit blog. However, papers were contained specialized and very technical language that was difficult for non-experts to understand. The team decided to use an Netus AI to help them rewrite their research papers in a way that was more accessible to a wider audience. As a result, the institution were able to produce 267 blog posts in 3 weeks period. The institution’s writers also received positive feedback from readers who appreciated the clarity and accessibility of their papers.

Newy*********.com news agency wanted to update their readers in a timely and accurate manner. However, regarding fast-paced nature of the news industry and often found themselves publishing articles that were similar to those of their competitors and because of that ranked lower than competitors. After partnering with NetusAI portal resulted in a significant increase in website traffic (25%) and boosted SEO positions for local news.

Upcoming features for Netus AI

Plagiarism checker

You will be able to make sure your content is original by seamlessly checking it with the integrated plagiarism checker.

Content writing module

Netus AI will have AI content writing module to make writing proccess much easier and faster.

Bypass AI detection for every detector

Our advanced AI detection bypassers were created to avoid AI detectors such as Turnitin, Originality.ai, GPT Zero and many others. Our models, are trained on over 200 million data points, to paraphrase AI-generated text into human-like content, bypassing AI detection tools.

Bypass AI Detectors with Netus AI

Netus AI bypasser, can paraphrase AI text to replicate human writing.

Copy AI-generated content into our bypasser.

Choose one of the bypasser versions and click submit.

Once it bypasses, you’re free to use the content anywhere.

by Eddie Simmons

I tested various AI content detection tools and one of the top ones I encountered was Netus.ai now with the capability to detect AI-generated paraphrased content, Netus.ai is significantly more powerful.

by Nathan Patterson

Top tier tool for every student. Thank you Netus Team!

by Max Russell

I use this tool since 3 months. It’s one of my fav paraphrasing tools, really helpful and useful.

by Brendan Burnett

I rewrote a 20-page paper in a couple of days, and after that, the rest of the fall vacation was just for me and my snowboard Willy.

by Brian Turner

I work in a marketing company and came across Netus some time ago. The site has helped me to write journalistic content faster and better.

by Gordon Foster

Well done NETUS! You have accomplished something which Google, an enormous powerhouse, has yet to achieve.

Multilingual paraphrasing

Netus AI multilingual paraphrasing tool can rewrite text and sentences in many languages. Netus AI multilingual paraphraser supports 36 languages including: Arabic, Bengali, Bulgarian, Chinese, Croatian, Czech, Danish, Dutch, English, Estonian, Finnish, French, German, Greek, Hindi, Hungarian, Indonesian, Italian, Japanese, Korean, Latvian, Lithuanian, Norwegian, Polish, Portuguese, Romanian, Russian, Serbian, Slovak, Slovenian, Spanish, Sweden, Turkish, Thai, Ukrainian, Vietnamese.

Arabic

Bulgarian

Bengali

Chinese

Czech

Croatian

Danish

Dutch

English

Estonian

Finnish

French

German

Greek

Hindi

Hungarian

Indonesian

Italian

Japanese

Korean

Latvian

Lithuanian

Norwegian

Polish

Portuguese

Romanian

Russian

Slovak

Slovenian

Spanish

Swedish

Serbian

Turkish

Thai

Ukrainian

Vietnamese

Netus AI Rephraser - Undetectable AI Rewriter

In the ever-evolving digital landscape, ensuring the authenticity and integrity of AI-generated content has become paramount. That's where AI Rephraser - Netus AI software, steps in to make AI text undetectable. Specifically designed to make ChatGPT undetectable, Netus AI Rephraser harnesses sentence restructuring techniques and word alterations while preserving the original meaning and making the output appear authentic and natural. Creating an undetectable AI rewriter truly presents a significant challenge, but with the right tool it is possible to effectively eradicate any traces of paraphrasing making it impossible for detection algorithms to flag the content as modified. Effortlessly generate undetectable AI content and bypass AI detection algorithms. Make AI undetectable with Netus AI paraphrasing tool.

Humanize AI text with Netus AI bypasser tool

In an age where AI-generated content is becoming the norm, the ability to add a human touch to AI-generated text, or in other words humanizing AI is becoming essential. Tools like ChatGPT can generate hundreds of articles in minutes, but they are often easily detected by AI detectors. Detectability can also undermine the user experience and authenticity of the content or even lead to problems.Netus AI, essentially is a ChatGPT humanizer. Netus AI Text Humanizer algorithm modifies AI content to mimic human writing style, allowing users to quickly transform AI-generated content into text that's clear, engaging, and easy to understand. It's like having a personal AI text humanizer at your fingertips. Netus AI understands that turning AI generated text to human like writing isn't just about replacing words or rewriting sentences, but understanding the depth of human language and replicating it as closely as possible. It's about making AI content not just sound human, but feel human! Sign up today and try Netus AI to human text paraphrase tool and humanize AI text with ease!

FAQs

What is an AI paraphraser?

A paraphraser is a tool designed to help you paraphrase or rewrite while maintaining the original meaning. It helps users create new versions of a given text by using different vocabularies, sentence structures, synonyms, grammar and syntax. Professional paraphrasing is often a valuable way to avoid plagiarism, improve readability, or present the same information in a different way. A paraphrase writer can be especially useful for writers, researchers, students, SEO specialists, copywriters or anyone who needs AI for paraphrasing to present information in a unique and original way while maintaining the main idea from the original text. NetusAI can paraphrase formal, informal and all types of writing.

How can I use a Netus AI text paraphraser tool?

Using a Netus bypass tool is easy – you just need to choose the bypasser version and reformulate text. Different AI bypasser versions are suitable for different topics. Paste your text into the tool and click submit, it will generate a paraphrased version for you. Netus AI rewording tool works well for paraphrasing scientific texts, blog posts or texts written by other humans, for doing literature reviews and for a wide variety of topics.

How can I use a Netus AI bypasser tool?

Netus AI Humanizing tool is suitable for ChatGPT and other llms’ humanizing needs. Different AI bypasser versions are suitable for different topics. Please experiment and try to find the most suitable version for your requirements. Simply paste in the text and press the Submit button. For the highest level of stealth, we suggest that your content passes both v1 and v2 detectors.

Does your AI paraphraser support multiple languages?

Yes, our AI paraphraser supports 36 languages including English.

How accurate is the AI that rephrases text provided by Netus AI paraphraser?

Netus AI provides good accuracy and practically the same meaning as the original input. This is done through sophisticated systems that carefully preserve the intended meaning of the original text. This careful process ensures that the resulting outputs are not only accurate but coherent, consistent, and effectively convey the desired message to the reader.

Can Netus AI paraphrasing tool bypass AI detection and act as an AI detection remover or AI bypasser?

Our Paraphrasing tool does not ensure that paraphrased content can bypass ai detection. Paraphrasing tool is just to rewrite the content. You can instead use the AI Bypasser tool that paraphrases content such that it avoids being flagged as AI generated content.

Is there a paraphrasing AI app available for mobile devices?

Yes, we offer a paraphrasing AI app that is available for Android devices. You can download it from the Google Play Store to conveniently access our paraphrasing tool on your Android device. We are also actively working on developing an iOS version of the paraphrase app to cater to iOS device users in the near future. Stay tuned for updates on our progress!

Can your AI rephraser be used as a word changer or rewriter tool?

Yes, our AI rephrase tool can be used as a word changer or rewriter, providing you with alternative word choices and sentence structures. AI rephraser can be used on very short sentences or even phrases.

Can your AI work as a rewording tool to effectively enhance the clarity and readability of my text?

Yes, our AI can act as a rewording tool that can enhance the clarity and readability of your text by providing alternative word choices and sentence structures that improve overall comprehension.

How does your AI text paraphraser ensure the original meaning is preserved during paraphrasing?

Our AI text paraphraser employs sophisticated algorithms to ensure that the original meaning of the text is preserved while providing alternative wording and sentence structures.

Is there a Chrome extension available for your paraphrasing software?

Yes, we offer a convenient free Chrome extension for our paraphrasing tool, allowing you to access it directly from your browser. The extension provides a seamless integration, enabling you to paraphrase text with ease while browsing the web. You can find it here: https://chromewebstore.google.com/detail/netus-ai/lkgnajinfngghpapjkoifknbakmnbcod

Is there a Google Docs add-on available for your paraphrase bot?

Yes, we provide a free Google Docs Add-on for Netus rephrasing bot, allowing you to seamlessly integrate it into your Google Docs workflow. With the add-on, you can conveniently paraphrase text directly within your Google Docs documents, enhancing your writing process. You can find the add-on here on this site: https://workspace.google.com/marketplace/app/netus_ai_paraphrasing_tool/1074096923875

Does your AI rephrase tool include a humanizing text feature?

Yes, our AI Bypasser is designed to humanize the content. It rephrases text to avoid the AI generated content.

Is paraphrasing considered as plagiarism?

Paraphrasing is a useful and legitimate technique used to restate information in your own words while retaining the original meaning. It itself is not considered plagiarism. AI that rewrites text is nothing new on the market. However, if paraphrasing is done without citing the sources used, it can become plagiarism.

Is using a paraphrasing tool legal?

Using a sentence restructuring tool or AI paraphraser itself is legal. However, the legality of the content generated by the tool depends on the use of proper source citations. Utilizing paraphrased content as original work may cause you legal or academic trouble.

Welcome to the cutting-edge realm of content creation with NetusAI, your go-to for an undetectable ai experience. With our flagship undetectable ai free tool, we offer a paraphrasing tool that’s second to none. Our netus ai bypasser is crafted with precision to produce undetectable content. The AI Bypass functionality frees you from the clutches of ai detection. We’ve designed this platform to be a haven for writers seeking the best paraphrasing tool to avoid ai detection.

This tool ensures your that paraphrased content is not just undetectable by ai, but also enriched with the finesse of human touch, thanks to the ai to human paraphrase functionality.

For those seeking a comprehensive ai paraphrasing tool to avoid ai detection, our undetectable ai paraphraser, stands ready to serve. And for those wary of Turnitin’s gaze, our undetectable ai tool promises peace of mind. Choose NetusAI for a paraphrasing experience that’s truly undetectable, seamless, and above all, authentically human.