You followed every rule. Write it yourself. Double-checked for clarity. But the AI detector still screamed: “Generated.” This happens more than people think, especially with essays, blog posts or marketing copy that sound polished.

Because AI detectors aren’t asking who wrote it. They’re asking how predictable it is.

The fix isn’t to give up on tools, it’s to use the right ones. Most “bypass” tools only shuffle words. The smarter ones rewrite rhythm, restructure tone and reshape intent, not just for passing scores, but for trust.

What Makes AI Content Detectable?

AI detectors don’t “read” your content like humans, they scan it for patterns. Specifically, they look for mathematical footprints that scream: “This was machine-generated.”

Here’s what triggers the red flags:

Low Perplexity

AI tends to write in a clean, overly predictable flow. Detectors spot this by calculating how easy it is to guess the next word. The lower the “perplexity,” the more AI it feels.

Flat Burstiness

Humans naturally vary sentence length and rhythm. AI often doesn’t. If your writing flows with too much symmetry or structure, it’s flagged for lack of “burstiness.”

Stylometric Fingerprints

Stylometry analyzes writing style, from passive voice to punctuation use. AI has certain quirks (like perfect formatting or repetitive phrasing) that reveal its origins.

Lack of Contextual Reasoning

AI tools often lack nuance, leading to detectable, generic content. That’s why basic paraphrasers often fail. They tweak words, not patterns.

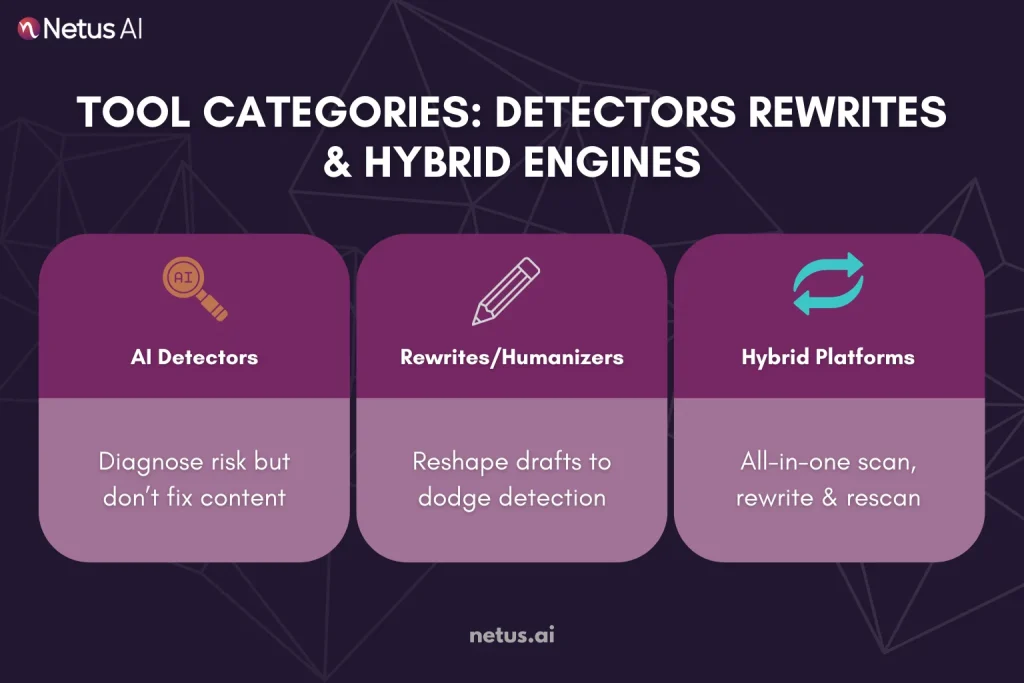

Tool Categories: Detectors, Rewriters & Hybrid Engines

Before exploring AI humanizers, understand the three main tool categories. Choosing incorrectly can be inefficient or counterproductive.

1. AI Detectors

These tools don’t change your content, they analyze it. AI content detectors such as OriginalityAI, ZeroGPT, and Turnitin identify AI-generated patterns. These patterns include low perplexity, consistent sentence length, and distinct writing styles.

- Purpose: Risk Assessment

- Use Case: Assess your blog, essay, or email drafts for AI detection risks.

- Reality Check: These tools are great for diagnostics, but they don’t help you fix anything.

2. Rewriters / Humanizers

These tools take an AI-generated draft and reshape it, not just reword it. Top AI content tools go beyond synonyms, adjusting rhythm, flow, and tone for human-like writing. Examples include StealthWriterAI, HumanizeAI and NetusAI.

- Purpose: Bypassing Detection

- Use Case: You’ve written with ChatGPT or Gemini, but it gets flagged. These tools rewrite the content to reduce its AI signals.

- Reality Check: Many free tools claim to humanize, but few truly understand detection algorithms. If it reads like a thesaurus remix, it likely won’t pass.

3. Hybrid Platforms

These are the powerhouses, tools that combine detection + rewriting into a single workflow. They allow users to scan, edit, rescan and refine, all from one interface.

This feedback loop is what makes tools like NetusAI bypasser (Bypasser V2 Engine) stand out.

- Purpose: Full Loop Humanization

- Use Case: Instead of bouncing between tools, you rewrite, check and tweak on the fly. This is especially helpful for high-volume writers or anyone working under deadline pressure.

- Reality Check: These platforms are built for strategic rewriting, not just cosmetic paraphrasing.

Best Tools to Make AI Content Undetectable

Below is a breakdown of the most talked-about tools, tested, compared and categorized by what they actually do.

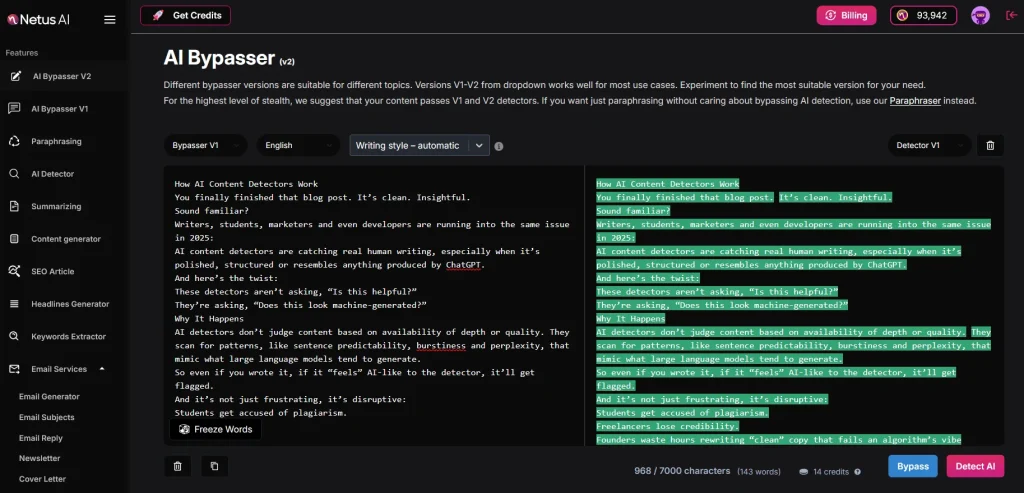

1. NetusAI

Type: Hybrid Engine (Detection + Rewriting)

Why It Stands Out:

NetusAI bypasser doesn’t just paraphrase, it restructures your content’s tone, cadence and semantic rhythm. Its bypasser V2 Engine allows real-time testing inside the same interface, so you can tweak until your copy reads as 100% human.

- Instant detection preview: 🟢 Human / 🟡 Unclear / 🔴 Detected

- Built-in AI Bypasser optimized for academic and SEO text

- Supports 36+ languages, long-form rewriting and original generation

- No aggressive upsells, full history tracking and credit transparency

Best For: Marketers, students and SEO writers aiming for detection-safe content with minimal editing.

2. HumanizeAI

Type: Rewriter

Key Features:

- Rewrites up to 3,000 characters per request

- Targets detection patterns like repetition and syntax flatness

- UI is clean and beginner-friendly

Limitations: Lacks built-in detection or feedback loop, requires manual testing elsewhere.

3. StealthWriter

Type: Rewriter

Key Features:

- Offers tone adjustments (e.g., casual, academic, witty)

- Targets stylometry-based signals like passive voice and structure

- Allows batch processing

Limitations: Detection performance varies by input style; less transparent about rewrite mechanics.

4. Originality

Type: Detector

Key Features:

- Detection scores + readability + plagiarism check

- Team management features for agencies

- API access for bulk scans

Limitations: No rewriting support; not ideal for solo creators looking to fix content on the spot.

5. ZeroGPT

Type: Detector

Key Features:

- Simple text-based scanner

- Highlights suspicious areas

- Free access with word count limits

Limitations: Basic compared to Originality; detection accuracy isn’t always consistent.

How to Pick the Right AI Bypasser Tool for You?

With so many AI rewriters and detectors out there, the best tool for you depends on what you’re actually trying to do. Here’s a smart breakdown based on different goals:

Students & Academic Writers

Your Priority: Avoid Turnitin flags without losing structure.

Best Fit:

- NetusAI (bypass engine + real-time detection feedback)

- Avoid tools that only rephrase a few words, academic detectors rely on stylometry.

SEO Writers & Marketers

Your Priority: Get ranked without being penalized for AI patterns.

Best Fit:

- NetusAI (optimized for long-form SEO and detection-safe rewrites)

- HumanizeAI (if you want light edits but still need manual detection testing)

Researchers & Fact Writers

Your Priority: Keep technical accuracy intact while rewriting.

Best Fit:

- StealthWriterAI (tone options + passive voice control)

- Always verify citations, most AI rewriters don’t fact-check.

Bloggers & Copywriters

Your Priority: Make content sound human, emotional and engaging.

Best Fit:

- NetusAI (tone shifting + burstiness editing)

- HumanizeAI (fast tweaks for social or newsletter content)

Agencies & Editors

Your Priority: Scale rewriting across teams or clients.

Best Fit:

- OriginalityAI (for team detection + reporting)

- Pair it with NetusAI or StealthWriter to actually humanize at scale

If you’re switching between detection and rewriting across tools, you’re wasting time. Tools like NetusAI that unify the whole process will save you both effort and credibility.

Final Thoughts

AI content detection is now integrated into publishing, search, and hiring. And it’s far from perfect. Even your writing can get flagged just for sounding “too clean.”

That’s the frustrating part: detectors aren’t asking who wrote it, they’re scanning how it was written. So while it’s tempting to blame the tools, the real move is to adapt smarter. Tools like NetusAI bypasser don’t just beat detectors, they help align your writing with human rhythm, intent and flow. That’s what gets seen, trusted and remembered.

FAQs

1. Does adding typos, emojis or slang help bypass AI detectors?

AI detectors analyze deeper patterns like sentence structure and rhythm, not just grammar. Randomness makes writing messy but won’t fool models trained to spot algorithmic cadence.

2. Why did my original, human-written content get flagged?

AI detectors often flag overly clean or conventional content, even if human-written. AI-generated content, like blog posts, often sounds “AI-like” due to its low perplexity and limited burstiness.

3. Can ChatGPT pass AI detection if I just tweak the prompt?

No, even advanced prompting usually results in detectable AI patterns. Tools like NetusAI rewrite content to break these deeper signals.

4. Which tool rewrites AI content without ruining meaning?

NetusAI reworks sentence structure, burstiness, and tone to bypass AI detectors without changing your message. Other tools like HumanizeAI or StealthWriterAI offer similar features but may lack the same nuance.

5. What’s the best workflow to stay undetectable?

To make AI-generated content undetectable, first draft the content. Then, scan it for AI detectors. Rewrite any sections flagged as AI-generated using tools such as NetusAI. Repeat this process until the content is recognized as “Human.”

6. Can AI detectors differentiate between hybrid content (AI + human edits)?

Rewriting AI content by hand can sometimes bypass detection, but stylometric analysis may still flag it. Tools like NetusAI offer smarter rewrites to target detector logic directly.

7. Are paraphrasing tools enough to beat detectors?

Simple paraphrasers are insufficient because detectors analyze pacing, tone, and structural predictability, not just vocabulary. Modern bypass tools must alter writing style, not just content.

8. Will future AI detectors get harder to fool?

AI detection is evolving towards watermarking and provenance chains, like digital fingerprints. Projects such as C2PA and OpenAI’s research indicate detection will become more traceable, making smart humanization crucial.

9. Is it legal or ethical to bypass AI detection?

It depends on the context. For marketers, bloggers or entrepreneurs, humanizing AI writing is about clarity, trust and visibility. But for students or journalists, it gets murky. Platforms like Turnitin or Substack may treat detected content as misconduct. NetusAI encourages responsible use: fix tone and structure, not fabricate authorship.