If your content sounds too perfect, it might get penalized. That’s the uncomfortable truth of writing with AI.

The problem? AI detectors aren’t judging whether your content is actually helpful. They’re scanning for signs that it might be machine-generated. And if it trips that wire, it risks being flagged, pushed down in search results or even questioned in academic settings.

That’s where the idea of “humanized AI content” comes in. Writing should go beyond mere clarity; it should emulate the organic, unpredictable and emotionally intelligent style of human authors.

Clarity alone isn’t enough. You also have to convince the bots you’re not one of them.

Why ‘Sounding Human’ Became Table Stakes for AI Writers?

Paraphrasing isn’t enough anymore. Swapping synonyms in AI-written text won’t bypass detectors, which analyze writing style, not just words.

Humanized content breaks away from this mechanical pattern. It adds:

- Voice, Personal tone, nuance, even humor.

- Intent, Clear reasoning, not generic filler.

- Emotional flow, Shifts in energy, rhythm and surprise.

- Unpredictability, No templated transitions or robotic structure.

Real humanization isn’t just about swapping words or tweaking sentences. It’s about adding those little quirks, personal touches and tiny flaws that make writing feel real, like a person wrote it, not a computer.

That’s where tools like NetusAI differ from traditional paraphrasers. NetusAI doesn’t just change words; it completely rewrites sentences to fool AI detectors or Turnitin’s AI classifier.

It’s like having a rewrite assistant trained to dodge detectors and sound human.

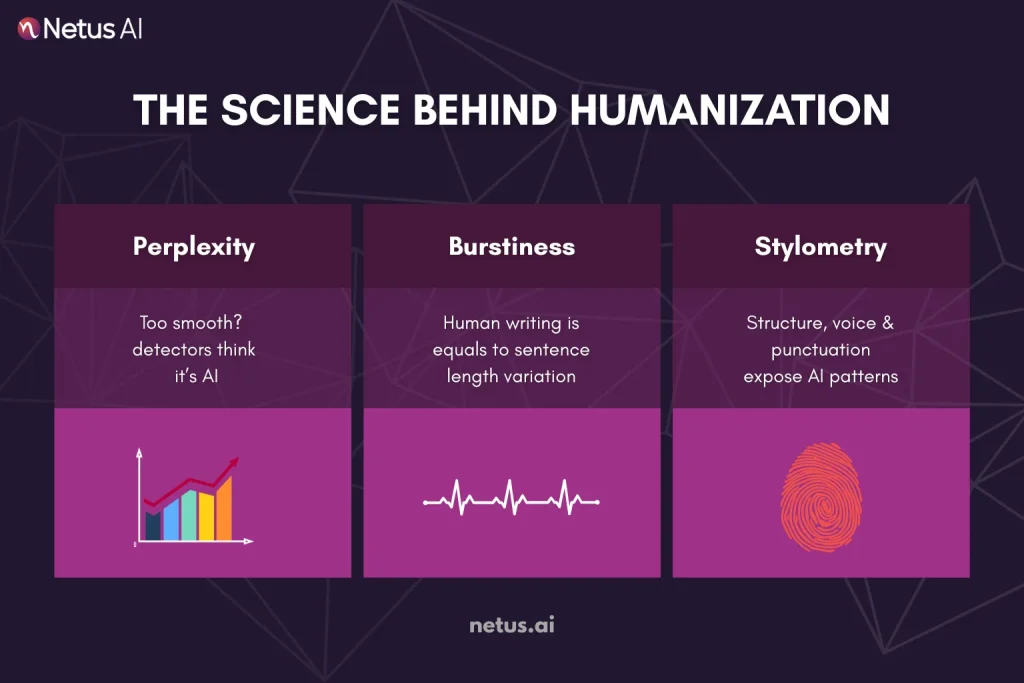

The Science Behind Humanization

If your content reads too “perfect,” that’s a problem. AI detectors aren’t reading your work like a human editor. They scan patterns using metrics like:

Perplexity

Low perplexity indicates predictable, machine-like text, often seen in AI-generated content. However, human-written content can also score low, leading to detector errors.

Burstiness

Human writers vary sentence length and complexity, unlike AI. Little variation is a red flag.

Stylometry

Stylometry analyzes writing for unique digital fingerprints like sentence rhythm, passive voice, punctuation and structure, which AI detectors use to identify AI-generated content.

Here’s where NetusAI bypasser steps in. NetusAI doesn’t just reword things. It gets how the signals work and fixes your text to make it varied and clear again.

You’re not just paraphrasing anymore. You’re actively breaking the AI pattern.

Why AI Content Risks more than just Detection

It’s not enough to pass detection, your content also has to earn trust.

Search Engine Rankings

Google’s Helpful Content System is evolving at lightning speed and it’s getting sharper by the day. Now it rewards content that genuinely demonstrates experience, expertise, authority and trust (E-E-A-T).

Even great AI writing can miss the personal touches Google loves, like real stories or a genuine voice.

Reader Trust Signals

Studies show that readers are getting better at feeling when something is AI-written, even without detectors. That slight robotic tone? It makes content feel impersonal. Humanized writing, on the other hand, builds emotional resonance.

Academic & Platform Enforcement

Platforms like Turnitin have already flagged students for using AI, even in hybrid drafts. Even though Turnitin says its flags shouldn’t be the only reason to suspect cheating, people still face consequences.

With NetusAI bypass tool, you’re not just bypassing AI detection. You’re restoring authenticity. That means keeping your rankings, reputation and reach intact.

Workflow Blueprint: Detect → Rewrite → Retest with NetusAI

Humanizing AI content isn’t a one-click solution. It’s a loop and NetusAI was built for that loop.

Step 1: Detect

Drop your text into NetusAI’s real-time AI Detector. It scans your draft and instantly flags areas likely to trigger ZeroGPT-style tools or originality checkers. Each section gets a verdict:

🟢 Human, 🟡 Unclear, 🔴 Detected.

This helps you identify which parts of your writing sound “too AI,” instead of guessing blindly.

Step 2: Rewrite (Smart, Not Just Synonyms)

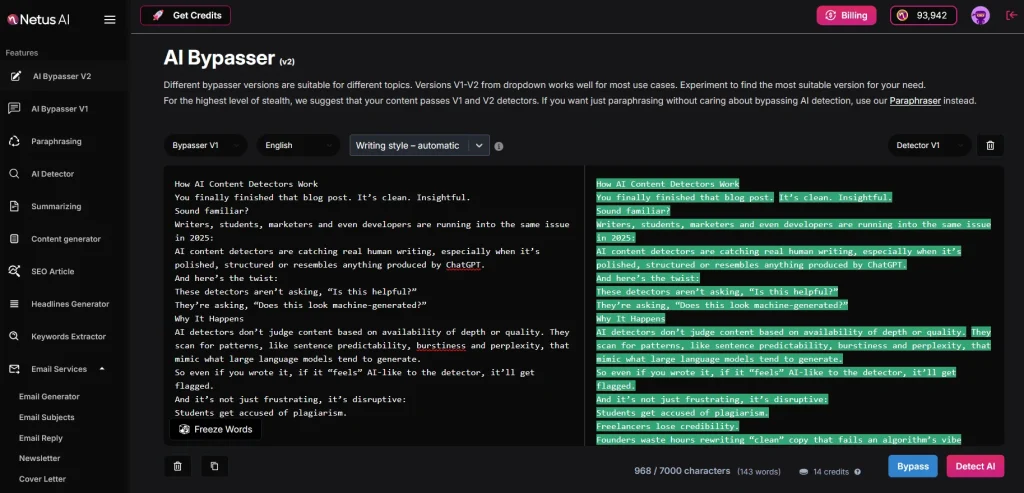

NetusAI’s Bypass Engines (V1 & V2) go beyond word swaps.

They reshape tone, structure, pacing and rhythm, the deeper signals most detectors rely on.

Choose from two rewriting engines:

- for fixing sensitive paragraphs

- for polishing full sections

Unlike other tools, you’re not left wondering what changed, every output is crafted for variation without losing your meaning.

Step 3: Retest Instantly

Run your rewritten draft right back through the detector.

If it still gets flagged? Tweak, test again, all without leaving the page. It’s built for trial → feedback → approval, not guesswork.

Why This Loop Wins

You don’t have to wonder if your rewrite “worked”, you see it turn from 🔴 to 🟢.

“It’s the only tool where I can see real-time progress.”, actual NetusAI bypasser user review. Whether you’re a student, marketer or founder, this loop gives you control.

Future Trends for AI Content

As detection tech evolves, it’s no longer just about what sounds AI, it’s about what proves it.

Watermarking Is No Longer Just a Concept

Despite OpenAI discontinuing content watermarking in 2024, AI-generated material can still be subtly marked with small, concealed patterns. Future detectors may not guess, they’ll know.

Content Provenance Is Becoming a Standard

The Content Authenticity Initiative and C2PA have pushed hard for metadata layers to trace the origin of digital content. Think of it like a blockchain for blog posts, showing whether a file was AI-written, edited or human from scratch.

Governments Are Stepping In

The EU AI Act, new law that came out in early 2025. It basically says some types of made-up stuff, especially in education, news and political talks, are pretty risky. In the U.S., universities and workplaces have started enforcing stricter detection policies, requiring signed attestations or “authorship proof.”

Why Flexible Humanization Matters

As watermarking and policy enforcement increase, bypassing AI detection becomes about more than hiding, it’s about transforming. NetusAI restructures tone, rhythm and flow, making your work future-proof against algorithms and regulations.

Final Thoughts

The line between “AI-written” and “humanized” isn’t just technical, it’s emotional, contextual and strategic. To build investor trust, avoid academic penalties and achieve strong SEO, founders, students and content creators alike must prioritize authentic, human-sounding communication.

But doing it manually? That’s inefficient, inconsistent and exhausting.

Now, before you publish your next blog, essay or brand copy, run it through NetusAI bypassers’s detection–rewrite–retest loop.

It’s not about hiding from AI detectors.

It’s about sounding like you and getting credit for it.

FAQs

1. What does “humanized AI content” actually mean?

Humanized AI content is AI-generated or rewritten text that emulates natural human writing, incorporating varied tone, emotional flow, sentence structure and unpredictability. Its goal is to bypass AI detection and resonate with human readers.

2. Can AI detectors really flag human-written content?

AI detectors analyze structured, clean or predictable writing. NetusAI detects risky patterns before rewriting, even in hybrid or polished drafts.

3. Does paraphrasing content make it undetectable?

Simple paraphrasers are unreliable; AI detectors still identify them. NetusAI effectively humanizes content by altering tone, pacing and sentence structure.

4. Why is humanizing AI content important for SEO?

Google’s search algorithms now favor content with real expertise (E-E-A-T). Overly optimized or AI-detected text can harm rankings and trust. NetusAI helps humanize SEO content, avoiding algorithmic penalties.

5. How does NetusAI compare to tools like QuillBot or HumanizeAI?

NetusAI differs from QuillBot and HumanizeAI by providing a full workflow: detecting risky content, rewriting for variation and verifying humanization.

6. Can NetusAI help students avoid false flags in Turnitin or ZeroGPT?

NetusAI helps students avoid false positives in academic scans by rewriting original work to reflect human writing styles.

7. Will watermarking or provenance tech make rewriting tools useless?

While watermarking and traceable content origins are evolving, most systems focus on untouched, raw AI output. NetusAI is valuable for credibility and compliance, transforming tone, pacing and meaning, especially in multilingual or rewritten drafts.