You’ve probably used AI tools to speed up content creation. Who hasn’t? But here’s the catch, just because your blog ranks today doesn’t mean it’ll stay there tomorrow.

Search engines are getting smarter at picking up “machine-written” patterns. Google isn’t against AI, but emphasizes that content must be helpful, original, and human-centric. That’s where AI content detectors quietly shape your SEO outcomes.

What Are AI Detectors? (And What Are They Looking For)

AI detectors (e.g., ZeroGPT, UndetectableAI, AIUndetect) scan text for large language model (e.g., ChatGPT, Claude, Gemini) authorship.

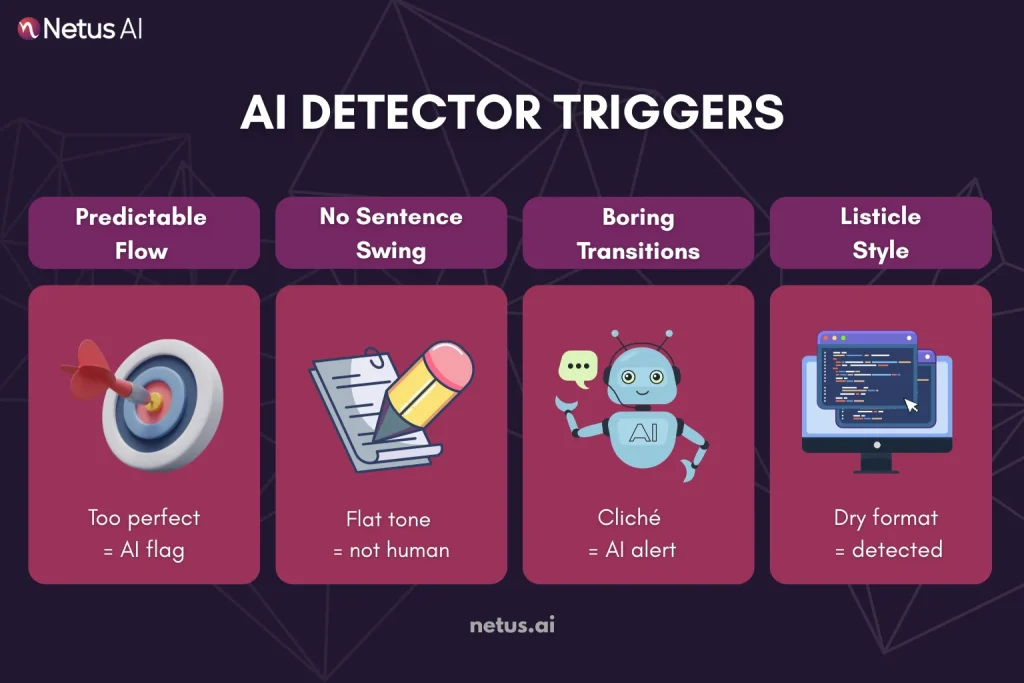

Here’s what they’re looking for:

- Predictability: AI-generated text tends to be more uniform and statistically “likely” in word choice and sentence flow. Detectors flag this repetitiveness.

- Burstiness and Perplexity: Human writing varies in sentence length and structure, while AI writing is flatter.

- Overuse of generic phrases: AI frequently uses generic transitions such as “in conclusion” or “on the other hand.”

- Formatting patterns: Headings, listicles and bullet points without unique tone or style are easy giveaways.

Does AI Detection Actually Impact SEO Rankings?

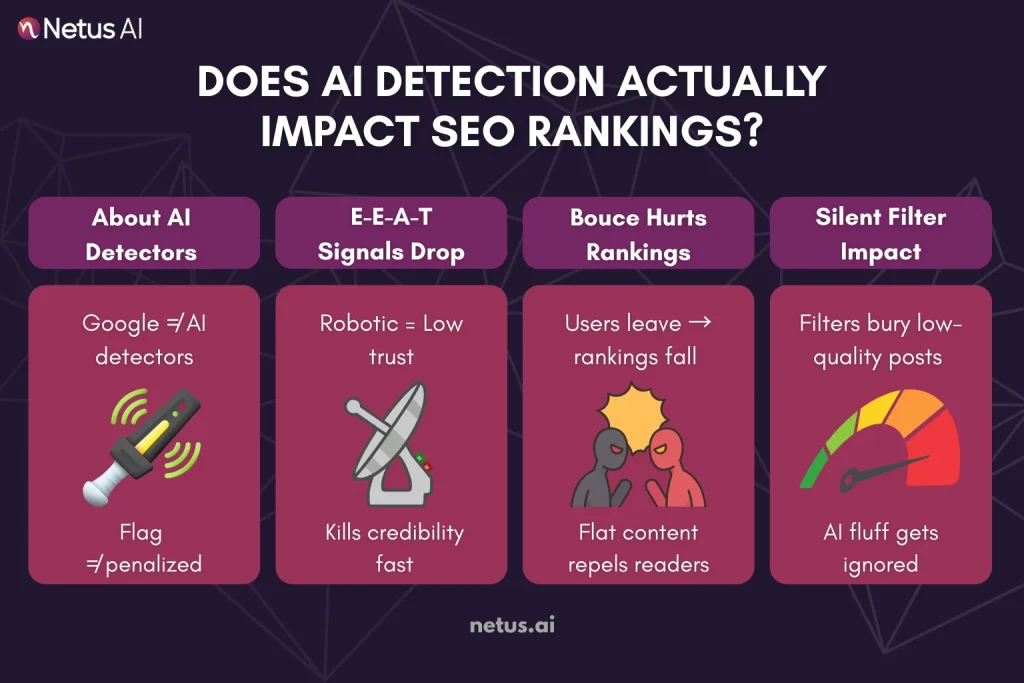

Google doesn’t directly penalize content flagged by AI detectors like ZeroGPT HumanizeAI or BypassAI. These tools are third-party solutions, not built or used by Google to determine your rankings.

Here’s how poorly rewritten or over-AI’d content ends up silently thanking your rankings:

1. Weak E-E-A-T Signals

Google uses E-E-A-T as core markers of high-quality content. If your content sounds robotic, has no personal insight and offers nothing original, it naturally lacks these elements.

2. High Bounce Rates and Low Engagement

Ever leave an article quickly because it was flat, repetitive, or clearly AI-written?

That’s the bounce rate in action and it signals to Google that users aren’t satisfied.

3. Algorithmic Devaluation via Quality Filters

Google’s Helpful Content Update targets content created for ranking, not user assistance.

If your content feels thin, lacks real-world depth or reads like a machine wrote it, it could get swept up in these algorithmic filters.

4. Reduced Distribution on Publishing Platforms

It’s not just Google. Content flagged as AI-heavy often struggles on distribution platforms like:

- Medium

- LinkedIn Articles

- Publisher syndication networks

- Third-party blog networks

These platforms are increasingly cautious about AI-generated material, especially when it sounds generic or lacks personality.

How AI Detection Affects Different Types of SEO Content?

AI detection doesn’t just impact blogs, it can quietly sabotage your SEO across multiple content types. Let’s break it down:

Blog Posts

If your blog post is flagged as AI-written, it may:

- Lower engagement (readers bounce faster when tone feels “off”)

- Trigger Google’s quality filters (especially under the Helpful Content System)

- Poor performance in featured snippets or People Also Ask boxes

Product Descriptions

Ecommerce sites using AI to scale product copy are especially at risk. AI-detected descriptions often:

- Sound generic

- Miss emotional or persuasive phrasing

- Fail to rank due to thin or repetitive content

Educational or YMYL Content

Google scrutinizes Your Money or Your Life (YMYL) content heavily. If detection tools flag your content as AI-written:

- It may lose trust signals

- Rankings in sensitive niches like finance, health or legal could drop dramatically

Long-form Guides and SEO Pillars

These need depth, originality and a clear voice. When they sound too automated:

- Bounce rate increases

- Internal links lose strength

- Topical authority suffers, weakening your entire domain’s SEO

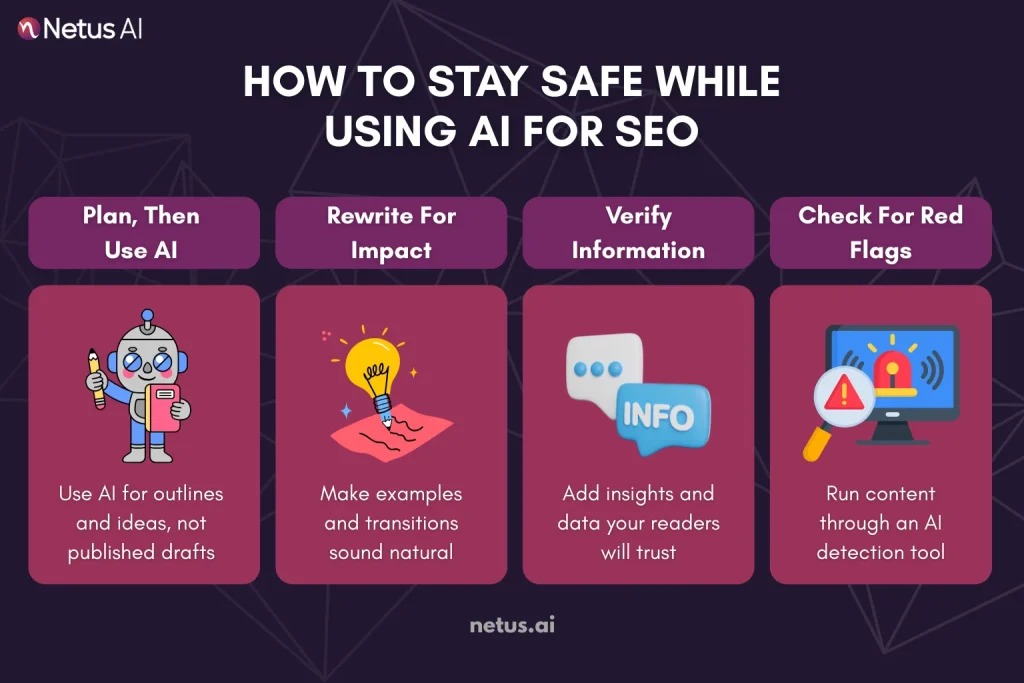

How to Stay Safe While Using AI for SEO?

AI can absolutely boost your content workflow, if used strategically. But without thoughtful execution, AI-generated content can raise flags that hurt your rankings. Here’s how to stay on the safe side:

Use AI for Structure, Not Final Copy

Think of AI as a brainstorming partner, great for outlines, research or even drafting sections. But raw AI text should never go live. It’s your job to add flow, personality and intent.

Rewrite for Humans, Not Just Algorithms

AI often defaults to neutral tone and repetitive patterns. Fix that. Break monotony with real examples, punchy transitions and sentence variety.

Verify, Validate and Add Value

Even the smartest model doesn’t know your audience. Add personal stories, brand-specific data or product insights to move beyond surface-level fluff. Google values experience-based content and readers do too.

Use Smart AI Detection and Rewrite Tools

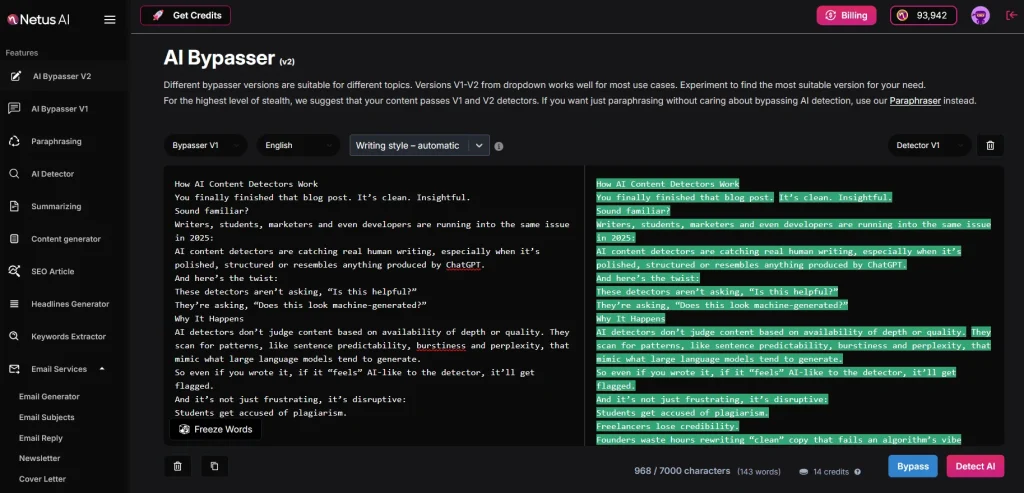

Before hitting publish, run your draft through a quality scanner. NetusAI provides a score, real-time suggestions, highlights risky sections, and allows instant rewriting. No copy-paste mess, no guesswork.

The Role of Humanizing Tools in Avoiding SEO Penalties

AI isn’t the problem, detection is. Getting flagged by AI detectors can quietly sabotage your visibility. That’s where humanizing tools step in.

Here’s how they actively protect your rankings:

Real-Time AI Detection & Feedback

The worst kind of editing? Blind editing.

Tools like NetusAI eliminate the guesswork by offering instant, color-coded feedback.

This real-time verdict allows you to pinpoint risky sections on the fly. Instead of rewriting your whole article blindly, you can focus on problem areas, saving time, credits and SEO value.

Structure & Tone Adjustment

Rewriting isn’t just about swapping words. It’s about restoring human rhythm, sentence variation, natural transitions and non-AI-sounding phrasing.

NetusAI’s humanizing models rework tone, syntax, and cadence to mimic real human speech and writing.

Rewrite Without Losing Meaning

Too many AI bypass tools ruin your message in the name of “detection safety.” NetusAI’s AI Bypasser is built differently.

It’s the best of both worlds: protection without compromise.

Trial, Tweak, Retest, The Winning Loop

The most effective strategy isn’t to rewrite once and publish, it’s to rewrite → scan → tweak → rescan.

That’s the workflow NetusAI enables:

- You rewrite one paragraph at a time

- Test it immediately

- Tweak only where needed

- Confirm “Human” status

- Move on

This system ensures compliant, confident, and controlled content with SEO integrity.

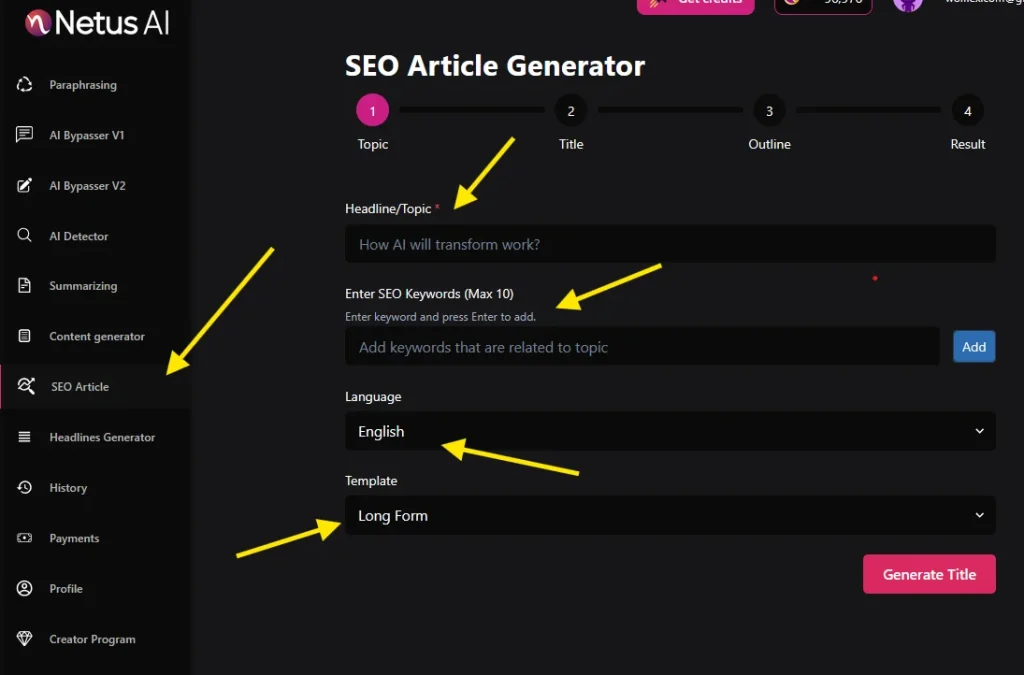

The NetusAI SEO Article Generator helps create optimized, full-length blog posts. Unlike generic tools, it goes beyond simple drafting. It:

- Lets you input a headline and targeted SEO keywords

- Supports long-form templates for full blogs

- Auto-generates a structure with Title → Outline → Content

- Works in multiple languages for global teams

Integrated with the Netus AI Bypasser + Detector system, the output is readable and avoids detection.

You can generate, review and rewrite all in one interface without needing third-party tools to patch the gaps. It’s built for marketers, freelancers and bloggers who want their AI content to actually pass as human-written.

Final Thoughts

AI detectors flag the same quality issues, predictable, thin, cookie-cutter content, that Google’s ranking algorithms already identify. If a scan flashes red, treat it as a gift: you have time to humanize before the SERP does the damage for you.

Use AI for drafts and outlines, then personalize with unique insights, varied style, factual depth, and conciseness.

Streamline your polish loop: detect, rewrite, and retest content using tools like NetusAI. This protects rankings and creates engaging content, aligning quality and human signals for better SEO.

FAQs

1. Will Google penalize me if an AI detector flags my article?

Not directly. Google’s Helpful Content and spam algorithms target “low-value” content with predictable language, lack of depth, and recycled transitions.

2. Why does clean, grammatically perfect writing sometimes fail AI checks?

Because detectors look for patterns, not typos. Ultra-polished text often has uniform sentence length, neutral tone and safe transitional phrases, hallmarks of model-generated prose.

3. Does using Grammarly or other polishers increase my risk?

Only if they iron out every bit of personality. Heavy reliance on automated editors can flatten cadence and remove the small imperfections that signal authenticity.

4. How can I safely leverage ChatGPT or Gemini without hurting SEO?

Use AI for drafts: outlines, idea lists, and quick summaries. Then, humanize the content by adding proprietary data, brand voice, and fact-checking. Finally, use a detector-rewriter (e.g., NetusAI) to refine machine-like sections.

5. Which content formats are most vulnerable to AI flags?

AI detection targets generic product descriptions, templated long-form guides, and YMYL articles (finance, health, legal). Add unique perspectives, testimonials, case studies, and current data.

6. Is paraphrasing enough to bypass detectors?

Rarely. Simple synonym swaps leave underlying structure untouched and that’s what algorithms score.