Can AI Detectors Really Tell If You Used ChatGPT?

Picture this: your company’s content team spends a week polishing a white-paper draft. No ChatGPT involvement, just caffeine, style guides and a lot of Ctrl-S. Minutes before publication, someone runs it through an AI-detection checker “just to be safe.” The verdict? “Highly likely AI-generated.” Cue the panic, edits and awkward Slack threads.

Scenarios like this aren’t edge cases anymore. Detectors powered by buzz-metrics like perplexity and burstiness claim they can sniff out a ChatGPT-style cadence in milliseconds. Yet polished human prose, especially copy refined by Grammarly, SEO tools or a meticulous editor, often trips the same wires.

So, what’s really happening under the hood?

- Do detectors hold a secret list of GPT sentence fingerprints?

- Or are they gambling on statistical hunches that occasionally burn real writers?

In the next sections, we’ll unpack how these tools score text, where their confidence falters and what you can do to keep genuine writing from getting mislabeled, without sabotaging clarity or style.

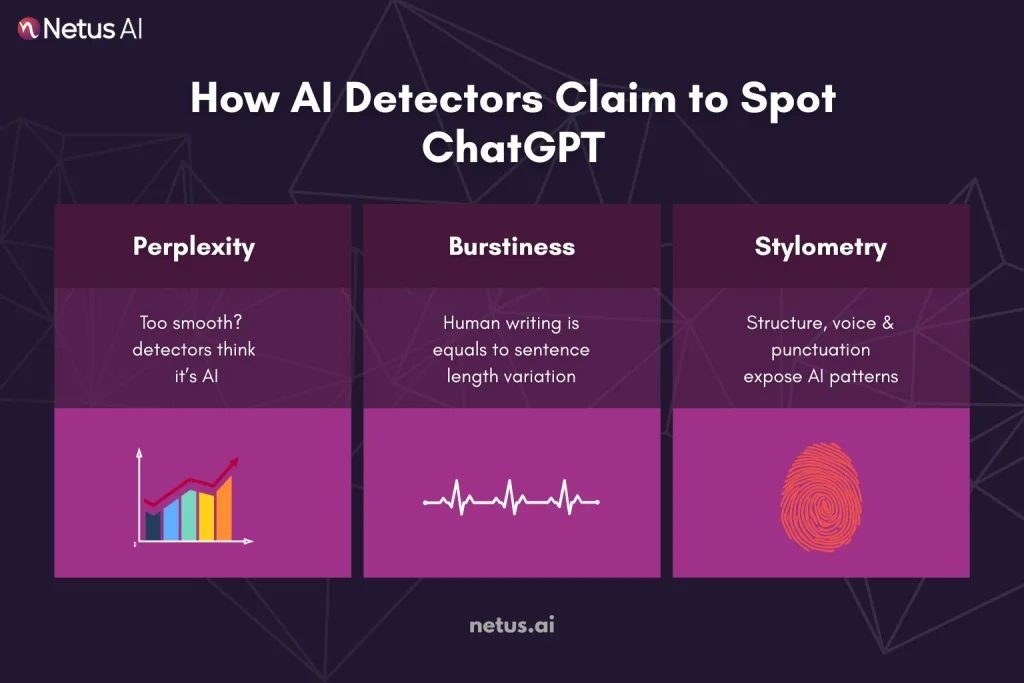

How AI Detectors Claim to Spot ChatGPT

Most AI-detection tools sell a simple promise: paste your text, get an instant verdict on whether it’s machine-generated. Under the hood, the algorithms are crunching three main signals, none of which involve peeking at your ChatGPT login or Google Doc history.

Perplexity: Measuring Predictability

At its core, perplexity is a measurement of how surprising each word in your sentence is, based on what came before it. If a language model expects the next word with high confidence, perplexity is low. If it’s genuinely surprised by the next word, perplexity is high.

Why it matters:

- AI-generated text is very predictable. LLMs are trained to write smoothly, so their output tends to score with low perplexity.

- Human writers often introduce randomness, idioms or imperfect phrasing, raising perplexity scores.

That means your clean, well-outlined blog post?

It might be too perfect, which can backfire.

Burstiness: Sentence Variety

Burstiness measures variation in sentence length and structure.

- Humans write with natural rhythm. We ramble. We pause. We use short and long sentences interchangeably.

- AI, by default, tends to write with a steady, uniform rhythm unless prompted otherwise.

Detectors like ZeroGPT and HumanizeAI use burstiness scores to judge how much your sentence flow mimics human behavior.

Too symmetrical = suspicious.

Too varied = probably human.

Stylometric Patterns: Your Writing’s DNA

Beyond raw scores, detectors also tap into stylometry, the analysis of your writing “style” based on:

- Word frequency

- Punctuation habits

- Average sentence length

- Passive voice usage

- Syntax trees

These create a statistical fingerprint. Academic research (Weber-Wulff et al., 2023) shows stylometric models can detect LLM-generated content with up to 90% accuracy, especially when no rewriting has been applied. Even minor rewrites often don’t shift stylometric patterns enough to fool detection engines.

Where ChatGPT Leaves Digital Footprints

Even when you ask ChatGPT to “write like a human,” it tends to leave behind subtle tell-tale clues, digital crumbs that detectors scoop up. Here are the most common giveaways:

Safety-First Vocabulary

LLMs are trained on huge, public datasets and aim for broad readability. That means they default to mid-level, non-controversial word choices, rarely slang, rarely jargon. Over an article, that steady “neutral register” becomes a detectable pattern.

Balanced Cadence in Lists

Ask ChatGPT for “10 tips,” and you’ll often get perfectly parallel sentences:

Tip 1: Do X.

Tip 2: Do Y.

Tip 3: Do Z.

Humans usually slip, adding an anecdote in one bullet, shortening another. Those imperfections boost burstiness; ChatGPT’s symmetry flattens it.

Filler Transitions

Phrases like “In today’s fast-paced world,” “It is important to note that,” and “One possible reason is” appear disproportionately in LLM output because they’re safe openers. Sprinkle a few too many and stylometry engines raise an eyebrow.

Temperature-Balanced Sentences

Most users keep ChatGPT’s temperature (randomness) around 0.7, which yields polished but predictable rhythm. Unless you deliberately tweak temperature or regenerate multiple times, that steady predictability remains intact across paragraphs.

JSON-Like Structure in Explanations

When ChatGPT explains code, data or step-by-step processes, it often formats answers like mini JSON blocks or bullet hierarchies, clean, indented, consistent. Handy for readability; also easy for detectors to spot.

Key takeaway: These footprints aren’t glaring to the human eye, but they are to algorithms trained to look for them. In the next section we’ll compare lab-reported accuracy rates with real-world performance and see why detectors sometimes still hit or miss when chasing these clues.

Why It Happens

AI detectors don’t judge content based on availability of depth or quality. They scan for patterns, like sentence predictability, burstiness and perplexity, that mimic what large language models tend to generate.

So even if you wrote it, if it “feels” AI-like to the detector, it’ll get flagged.

And it’s not just frustrating, it’s disruptive:

- Students get accused of plagiarism.

- Freelancers lose credibility.

- Founders waste hours rewriting “clean” copy that fails an algorithm’s vibe check.

It’s Not About Writing Better, It’s About Beating AI Detection

In this blog, we’ll break down how AI detectors actually work, what makes your content look “AI,” and how smart AI Bypasser tools, like the detection + rewrite loop inside NetusAI, can help you create content that doesn’t just sound human, it gets treated like it too.

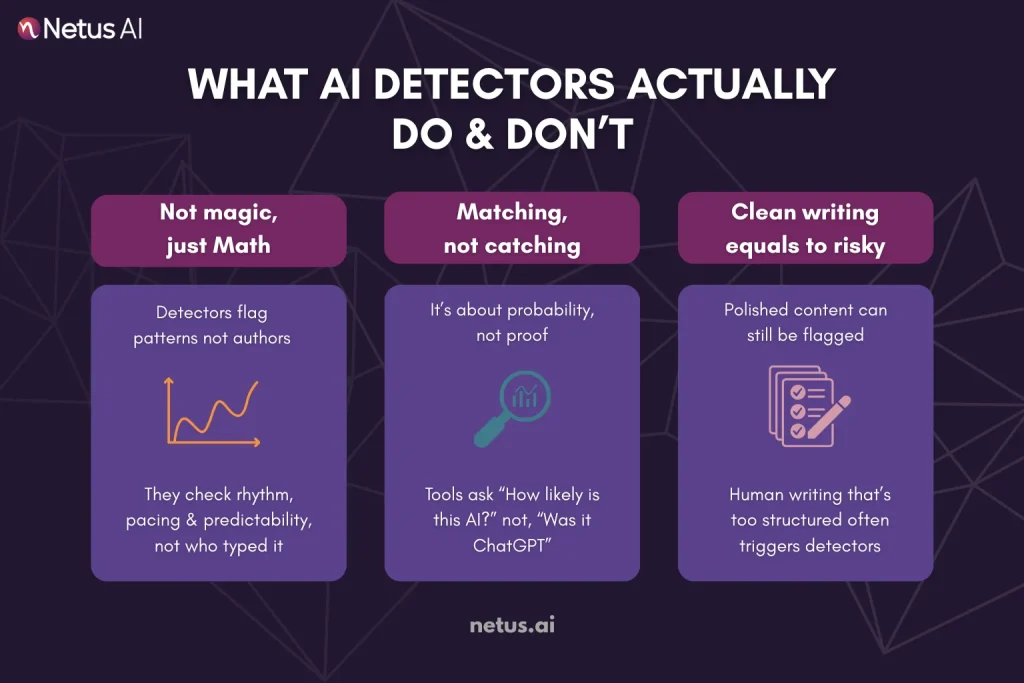

What Are AI Content Detectors?

By now, you’ve probably seen the warning labels: “This content may have been AI-generated.”

But how do detectors actually know?

Contrary to what many believe, AI detectors don’t run some magical truth scan.

They don’t catch ChatGPT red-handed. They simply analyze patterns and flag anything that matches what language models tend to produce.

The Core Mechanism

Most AI detectors, from ZeroGPT to HumanizeAI and Turnitin’s AI Writing Indicator, operate on the same basic logic:

AI-generated content has certain statistical fingerprints.

These tools look for traits like:

- Low burstiness (sentence variation)

- Low perplexity (predictability)

- Unusual syntactic uniformity

- “Machine” pacing and rhythm

Each sentence (or paragraph) is scored. If the scores fall within the typical range of LLMs like GPT‑4, the text is flagged, even if it was human-written.

It’s Not About “Catching AI”, It’s About Matching Probability

Here’s what AI detection isn’t:

- It’s not forensic text analysis

- It doesn’t detect if ChatGPT or Claude wrote your piece

- It doesn’t judge your intent

Instead, it answers:

“How likely is it that this was generated by a bot?”

If your writing feels too clean, too consistent, too symmetrical, it starts to resemble AI output. And that’s where even human-written essays and blogs can fail.

Detectors Don’t Think, They Measure

To be clear: no detector is “intelligent.” They don’t understand context, story or nuance. They don’t see availability, just pattern probability. This matters because human writing can easily fall within AI-like patterns, especially when it’s:

- Structured well

- Follows a logical outline

- Uses consistent tone and pacing

Ironically, the more professional your writing looks, the more it risks getting flagged.

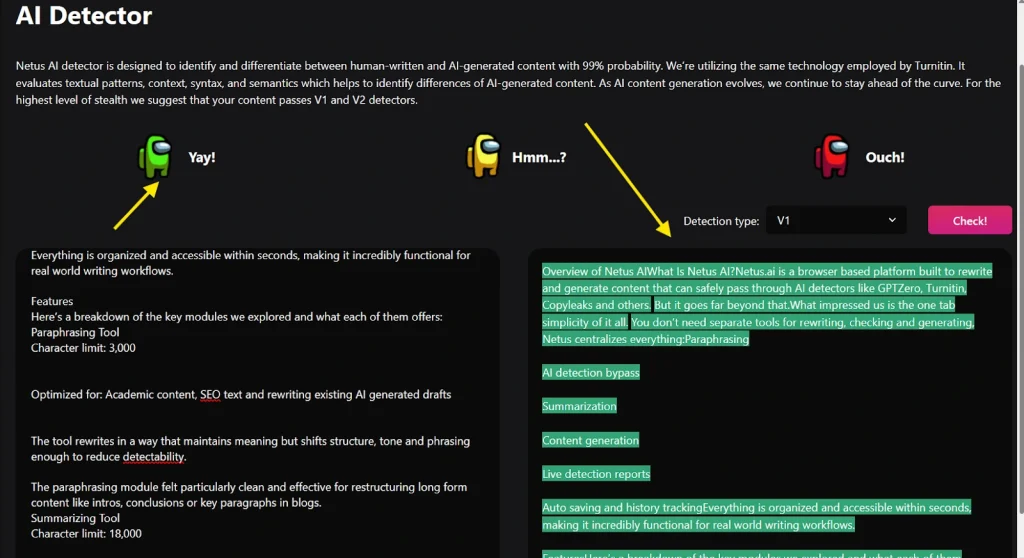

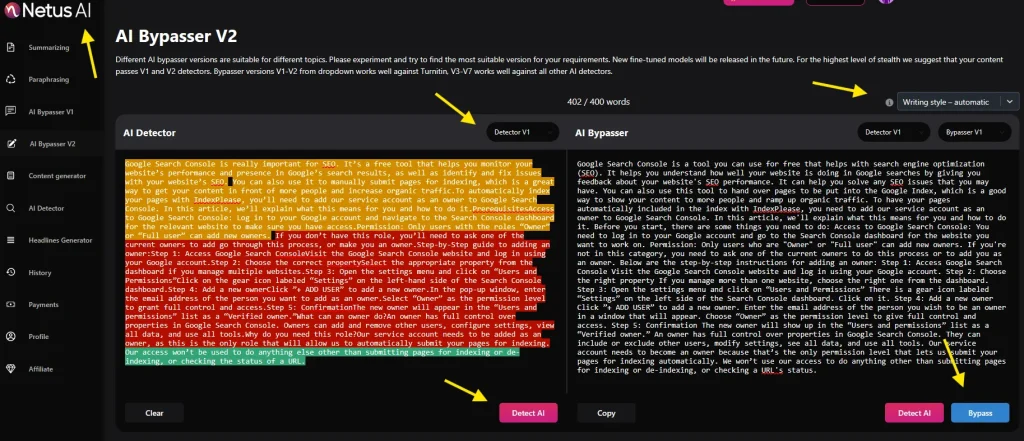

Where NetusAI Comes In

NetusAI was built specifically around these needs.

- You can paste any text, even content not generated with NetusAI.

- The detector runs a real-time analysis and flags the output as:

🟢 Human, 🟡 Unclear or 🔴 Detected.

It’s ideal for freelancers reviewing client drafts or SEO teams checking old blogs or students detetcting their essays before submission.

Why Rephrasing Isn’t Enough

Here’s the trap most “AI bypass” tools fall into: They just swap words. Or restructure a sentence. But if the underlying sentence predictability and flow rhythm remain the same?

Detectors will still flag it.

To bypass effectively, you need:

- Sentence-level structural variation

- Unpredictable word choices

- Real-time feedback to see if it worked

Why Good Writing Still Gets Flagged

It’s one of the most frustrating parts of AI detection in 2025: You write a clear, thoughtful, well-researched article and it still gets flagged as “AI-generated.”

This happens not because your work lacks human touch but because AI detectors aren’t measuring creativity. They’re measuring patterns.

The False Positive Problem

AI detectors aren’t perfect. And while their false-positive rates have improved, they still flag plenty of human-written content, especially when it’s:

- Highly structured

- Too consistent

- Clean and grammar-optimized

- Written by non-native English speakers

According to HumanizeAI, even their latest model can misclassify up to 1 in 100 human-written texts. That might sound low, until you consider the volume of essays, blogs and emails scanned daily.

Turnitin has acknowledged this as well, stating:

“AI writing indicators should not be used alone to make accusations of misconduct.”

Non-Native Writers Get Hit Hardest

One of the most common sources of false flags?

Non-native English writers.

Because many LLMs are trained on standardized, “neutral” English, their sentence construction closely mirrors what non-native speakers often emulate for clarity.

If you write with:

- Perfect grammar

- Predictable pacing

- Limited idioms or slang

You might unintentionally sound “AI-like” to a detector. This has sparked complaints across Reddit, Quora and even academic appeals forums, where students and freelancers face consequences for content they genuinely wrote.

The Hybrid Content Dilemma

Modern writing is often blended:

- You brainstorm in ChatGPT

- Add your own examples

- Rephrase and expand it by hand

The result? Hybrid content, partially AI-assisted, partially human.

But here’s the problem: Detectors don’t always separate the parts. If just 20–30% of your draft has LLM-style phrasing, the entire piece may be flagged. Quillbot, for example, offers sentence-level breakdowns, but tools like Turnitin only flag general probability, leaving writers with little clarity.

When “Good” = “Too Clean”

Ironically, the more professional your writing sounds, the more likely it is to be flagged.

Why?

- AI tools are optimized for coherence, logic and rhythm

- Editors and tools (like Grammarly) reinforce this

- Detectors see that polish and assume it’s machine-made

So you’re left in a weird place:

Write well and risk getting flagged. Write messy and look unprofessional.

The Solution: Write → Test → Rewrite

This is where smart creators now work with a feedback system.

With tools like NetusAI, you can:

- Scan your content in real-time

- See which parts are triggering red flags

- Rewrite just those blocks using AI bypass engines

- Rescan until you hit “Human”

Choose from two rewriting engines:

- for fixing sensitive paragraphs

- for polishing full sections

Instead of guessing, you’re testing and avoiding the pain of false positives entirely.

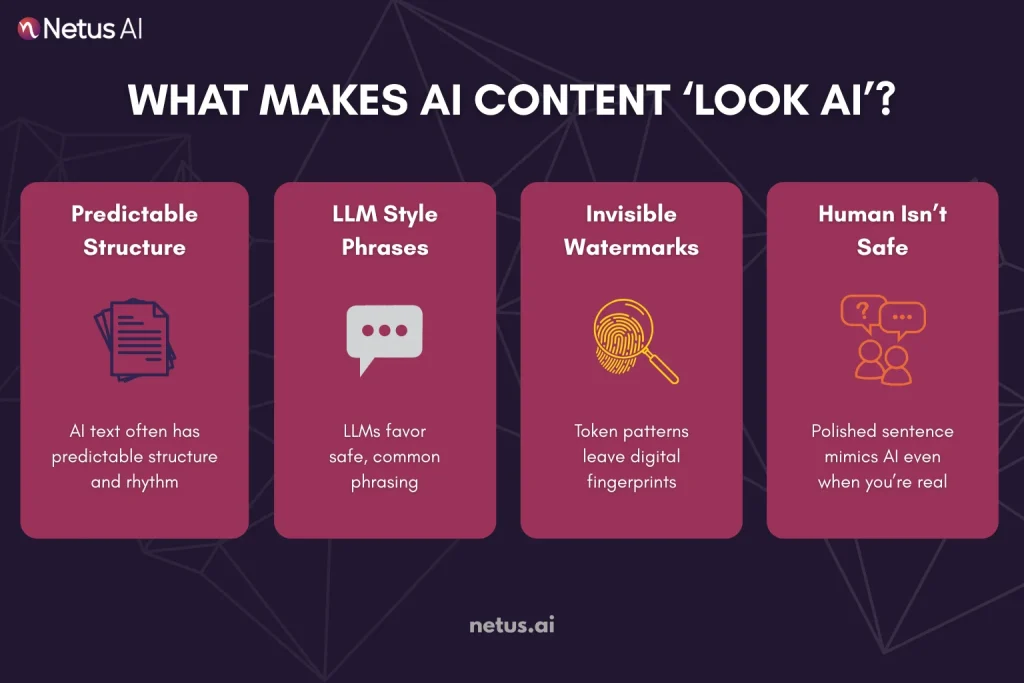

What Makes AI Content “Look AI”?

Most AI detection tools aren’t scanning your content for plagiarism, topic depth or even truthfulness. They’re scanning for one thing:

Patterns that feel like a machine wrote it.

And unfortunately for writers, good structure and clear thinking often match those patterns.

Predictable Structure

Large language models (LLMs) are trained to be consistent. They don’t ramble. They don’t jump around. They’re incredibly symmetrical. That means their writing often follows a tight loop:

- Every paragraph is 3–4 lines.

- Sentences average the same length.

- Transitions are polished and templated.

- Tone is neutral, informative and safe.

Sound familiar? That’s because even human writers, especially professionals, write this way too. But to a detector, this symmetry is a red flag. If your writing lacks natural variation or “messiness,” it can look AI-generated.

The LLM Style Trap

AI-generated text tends to overuse:

- “In conclusion,” “It’s important to note,” “One possible reason is”

- Passive voice

- Vague hedging phrases (“some experts believe,” “this could be interpreted”)

- Over-explaining or restating ideas

These statistical tics are easy to spot at scale and they’re exactly what detectors flag. Even if you write this way naturally, it can make your content appear machine-written.

Watermarking & Traceable Tokens

Some detection tools also look for invisible cues:

- Watermarking: Patterns subtly inserted during LLM generation (like token frequency or positioning).

- Token burst patterns: Repetition in how GPT models format punctuation or syntax.

OpenAI previously explored embedding watermarks in GPT output, a kind of digital fingerprint, though this has not been widely deployed yet. Still, detectors like Smodin and HIX may analyze token spacing or other low-level signals, especially in long-form content.

When Human = AI-Like

Here’s the paradox:

The more you polish your draft (with Grammarly, templates, SEO best practices), the more your content risks matching AI behavior.

That’s why even high-performing blog writers, academic researchers and email marketers sometimes get hit with false flags. You’re not writing like a bot, you’re writing like a bot was trained to write like you.

Final Thoughts:

By now, one thing’s clear: AI detectors don’t care how long you spend writing. They don’t know if you used ChatGPT, Grammarly or just years of good writing habits. They scan for patterns, perplexity, burstiness and stylometric fingerprints and if your content falls into the wrong statistical zone, you’ll get flagged.

Whether you’re a student, marketer, founder or freelance writer, fighting false flags isn’t about writing less clearly. It’s about testing, adjusting and rewriting with intent.

That’s exactly where tools like NetusAI become your safety net. Instead of crossing your fingers before submitting or publishing, NetusAI’s real-time detect → rewrite → retest loop gives you control over how your content scores.

You can see your AI risk before your professor, editor or Google’s algorithms do.

If you’re serious about keeping your work human-safe and detection-proof, without gutting your tone or wasting hours, try scanning your next draft through NetusAI’s detector and bypass engine.

- Less guessing

- More greenlights

- Zero last-minute panic

FAQs

No. AI detectors don’t track your ChatGPT history or check your OpenAI account. They analyze patterns like perplexity, burstiness, and stylometry in the text itself to predict if it’s AI-generated.

Many human writers (especially those using tools like Grammarly or writing for SEO) unintentionally create text with low burstiness and high predictability. Detectors flag this as AI-like because it mirrors LLM writing patterns.

No. These tools don’t have access to ChatGPT’s training data or your chat history. They rely on probability models and writing-pattern analysis—not content-matching.

Not always. Small edits or synonym swaps won’t break deep AI patterns. To reduce detection risk, you’ll need structural rewriting—changing sentence rhythm, tone, and flow. Tools like NetusAI specialize in this kind of advanced rewriting.

Possibly. Over-editing with grammar tools can polish your content into a uniform, overly clean tone—one of the exact patterns AI detectors look for. Keeping natural quirks in your writing helps.

Lowering or raising ChatGPT’s temperature can alter randomness in output, but it’s not a guaranteed fix. Even high-temperature outputs can carry detectable stylometric patterns.

A good practice is:

- Draft (AI or human)

- Run through a detector like NetusAI

- Rewrite flagged sections

- Retest

This detect-rewrite-retest loop greatly reduces your risk of false positives.

Yes, and this is becoming more common. Even if only 20-30% of your draft is AI-generated, it may still push your detection score into the red.

No tool can promise total invisibility forever, but tools like NetusAI’s Bypass Engine V2 come close by targeting both detection patterns and structural AI footprints—making your content statistically safer than basic paraphrasing tools.

Watermarking research is ongoing, but as of now (mid-2025), OpenAI has not deployed widespread token-level watermarking in ChatGPT output. Still, future detectors may adopt more advanced fingerprinting methods, so staying proactive with humanization tools is smart.