Why AI Detectors Flag Human Writing

You spend a Saturday night polishing an essay, double-checking every citation and running one last grammar pass, only to see the detector flash red: “Likely Written by AI.” The same thing happens to marketers tweaking web copy, bloggers crafting listicles and even novelists posting excerpts online.

What’s going on? AI detectors like ZeroGPT aren’t mind readers; they don’t care who wrote the text. Instead, they judge how it’s written. If your sentences look too predictable, too uniform or too “machine-clean,” the algorithm assumes a language model must be behind the keyboard, no matter how many coffee-fueled hours you actually spent.

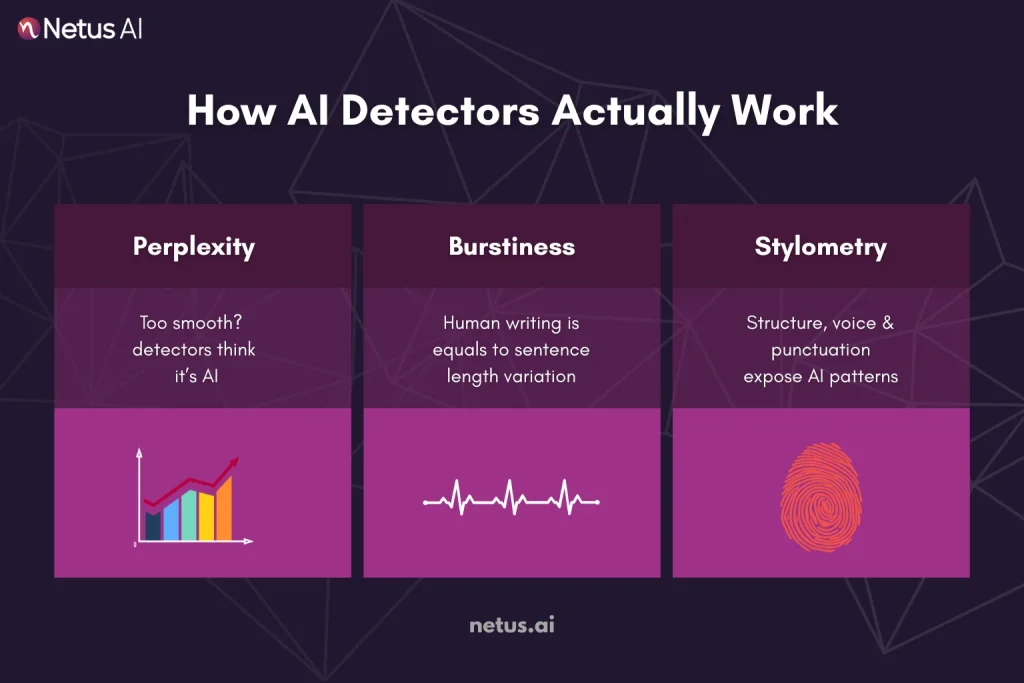

How AI Detectors Actually Work

Think of detectors as forensic linguists, not watermark scanners. They statistically profile your writing against LLM fingerprints. Three metrics dominate the assessment:

Perplexity

Think of perplexity as a “surprise meter.” It measures how easy it is to guess the next word in a sentence. LLMs aim for smooth, highly predictable prose, which often yields low perplexity scores. Detectors flag low perplexity, a hallmark of AI’s predictable flow. Humans evade this with linguistic texture. Weave in colloquialisms (“slam dunk”), rhetorical questions (“See the issue?”) or abrupt tonal pivots to inject algorithmic chaos.

Burstiness

Humans naturally mix short, punchy sentences with long, winding ones. That variation, called burstiness, gives text a rhythm detector associated with real authors. AI-generated drafts, especially “straight out of the box,” tend to keep sentence lengths uniform. Detectors flag that flat rhythm as another machine-made clue.

Stylometry

Stylometry analyzes writing like a fingerprint, tracking your passive-voice usage, punctuation rhythms, average clause length and even sentence-starter preferences. Large language models (LLMs) cluster stylistically within a narrow “neutral” band, optimized for predictability. If your own voice inadvertently mirrors this zone, perhaps by over-polishing contractions or avoiding colloquialisms, detectors may falsely flag you as AI. The cruel irony? Traditional writing virtues (clarity, formality) now risk algorithmic suspicion.

Key takeaway: detectors don’t know or care who’s typing. They only see numbers that say “This text behaves like ChatGPT.” In the next section, we’ll look at the most common real-life reasons your human writing slides into those danger zones.

Top Reasons Human Writing Gets Flagged

AI detectors don’t single out plagiarists, they flag patterns. Unfortunately, many perfectly legitimate writing habits overlap with those patterns. Here’s where real authors accidentally look like bots.

1. Over-Polished Grammar

Years of Grammarly suggestions can sand away every rough edge, comma splices vanish, clauses align, transitions read like textbook examples. Great for clarity, but all that mechanical perfection drags perplexity down and screams “machine-made” to an algorithm.

2. Rigid Essay or Blog Structures

Intro, three tidy body paragraphs, cookie-cutter transitions (“Firstly, Secondly, Lastly”), then a neat conclusion. Detectors love this kind of symmetry because large language models default to it. Real humans typically wander a bit, even in formal writing.

3. Sentence-Length Uniformity

If most of your sentences clock in around 18–20 words, you’ve flattened burstiness. Humans throw in a fragment. Then a winding 30-word tangent. Variety signals life; uniformity raises suspicion.

4. Template-Driven SEO and Copy Tools

Outline generators, AI meta-description helpers and “perfect H1-H2 ladder” plug-ins make drafts look tidy, but they also inject repetitive phrasing and predictable keyword placement. Detectors spot those patterns fast.

5. Non-Native Writers Playing It Safe

Many ESL authors adopt “safe” grammar and vocabulary to avoid mistakes. Ironically, that restraint can mimic AI’s controlled output. Mixing in idioms or personal voice helps re-balance the signal.

6. Heavy Reuse of Stock Phrases

“In today’s fast-paced world” or “It is important to note that” appear all over LLM training data. Sprinkle them too liberally and detectors assume bot origin.

Key takeaway: The better your writing matches textbook standards, the more you risk looking algorithmic. Next up, we’ll look at how often false positives truly happen and why the numbers keep climbing.

False Positives: How Often Does Human Writing Get Tagged as AI?

False positives aren’t rare edge cases anymore, they’re a growing pain point for schools, publishers and even corporate comms teams.

Turnitin’s Own Numbers

When Turnitin rolled out its AI-writing indicator, the company publicly reported a 4% false-positive rate in early field tests. That sounds small, until you realize that 4 out of every 100 genuinely human papers show up as “AI-generated.” Multiply that across thousands of student submissions and you’ve got a grading nightmare.

Reddit & Student Forum Stories

Online communities are flooded with examples:

- A graduate student’s meticulously cited thesis flagged at 92% “AI.”

- A freelance blogger’s SEO article rejected by a client because ZeroGPT called it bot-generated.

- Multiple ESL writers shared that their “safest” English gets flagged more often than their casual drafts.

Why the Trend Is Rising

- Detectors are tightening. As more LLMs flood the web, algorithms raise their sensitivity to stay ahead.

- Writing tools push uniformity. Grammarly, SEO optimizers and outline generators herd writers toward the same “perfect” structures.

- Stylometric drift. Over time, even human writing mimics AI outputs because we read so much AI-generated content online.

How to Avoid Getting Flagged

Detectors judge patterns, not intentions, so the antidote is to introduce healthy unpredictability without sacrificing clarity. Below are field-tested tweaks that raise the “human” signal while keeping your ideas front and center.

1. Draft in Layers, Not in One Pass

Start with a skeletal outline → expand into raw paragraphs → revise for voice and rhythm. This layered method forces you to recompose phrasing and break predictable patterns, avoiding the robotic first-draft trap.

2. Vary Sentence Rhythm on Purpose

Follow a long, winding sentence with a crisp, five-word punch. Mix simple statements with compound or rhetorical questions. This up-and-down cadence boosts burstiness, the metric detectors expect from real people.

3. Swap Template Transitions for Real Voice

Replace “In conclusion,” with something authentic to your style: “Big picture?” or “Bottom line?” Even a small switch disrupts the template patterns baked into large language models.

4. Sprinkle in Low-Stake Imperfections

A contraction here, a short parenthetical aside (yep, like this one), a dash of informal phrasing, tiny human quirks raise perplexity just enough to tip the algorithm toward “human-written.”

5. Check Tone Alignment

If you’re writing an academic paper, keep formal diction but loosen structure; if it’s a blog, let conversational energy in. Tone mismatch, formal words in rigid structure, often mirrors AI output.

6. Run a Pre-Submission Scan, Then Targeted Rewrite

Paste your draft into a detector before you hand it off. If certain sections light up in red, rewrite only those paragraphs. Tools with a built-in detect → rewrite → retest loop (e.g., NetusAI) streamline this process, you tweak, rescan and watch the score flip from “Detected” to “Human” in real time.

7. Keep Your Own “Voice Bank”

Maintain a personal list of favorite phrases, metaphors or storytelling habits. Sprinkling them into drafts instantly sets your writing apart from generic AI cadence.

Final Thoughts:

Algorithms are only growing sharper, but they’re still just math, counting sentence lengths, punctuation quirks and word-prediction scores. That means honest writers can keep getting caught in the net unless they add a dash of unmistakable human texture.

The goal isn’t sloppier prose, it’s authentic cadence. When clarity meets personality, detectors back off and real readers lean in. Write that way and your work stays yours, recognized as human by both people and the algorithms watching in the background.

FAQs

Because detectors check patterns, not authorship. If your sentences are uniformly structured, vocabulary is predictable and transitions are textbook-clean, the algorithm sees “machine-like” rhythm and flags it, even if you wrote every word yourself.

A moderate grammar pass is fine. But over-polishing every line can erase natural quirks (varying sentence length, occasional informal phrasing) and lower perplexity, precisely the metric detectors watch for.

Not really. Modern detectors focus on deeper statistics, sentence rhythm, passive-voice frequency, stylometry. Typos may reduce professionalism without raising your “human” score.

Non-native writers can evade false AI-detection flags by strategically breaking predictable patterns. Mix short, punchy sentences with longer, complex ones to boost burstiness.

Simple synonym swaps rarely work. Detectors read through surface-level vocabulary changes. What helps is structural rewriting: shifting clause order, varying rhythm and injecting voice.

No. Turnitin originalityAI, GPTZero and enterprise models weigh signals differently. A draft might pass one tool and fail another. That’s why pre-submission testing across multiple detectors is wise for high-stakes writing.

Heavy passive-voice usage is common in AI outputs and can reduce variety. Mixing active and passive constructions, while staying clear, adds balance and reads more human.

Prompt engineering helps a bit (temperature changes, role instructions), but the underlying model still favors predictable structures. Manual or tool-assisted humanization after generation is usually required.

Read your draft aloud. Wherever the cadence feels flat, insert a short question, a one-word echo or a longer illustrative sentence. This natural pacing variance lifts burstiness scores.

Using AI humanizers isn’t inherently unethical, it’s a tool like grammar checkers for refining tone and rhythm. The ethical line is crossed only when you conceal AI involvement in contexts demanding human originality